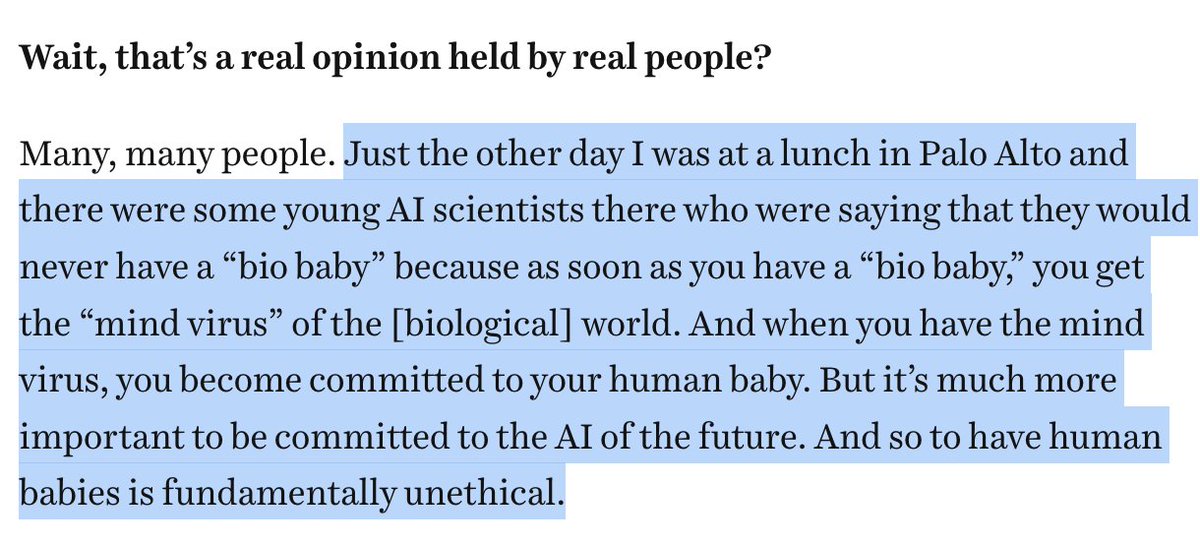

Here's Robin Hanson, a colleague of the longtermist William MacAskill at the Future of Humanity Institute, imagining what a world full of simulated people (or "ems" for "brain emulations") would be like. The word "elite ethnicities" is striking:

Citing Nick Bostrom, the Father of Longtermism, Hanson adds that biotech may enable us to create super-smart designer babies, who would be good candidates to have their brains scanned and uploaded to computers (to live in a virtual reality world full of ems).

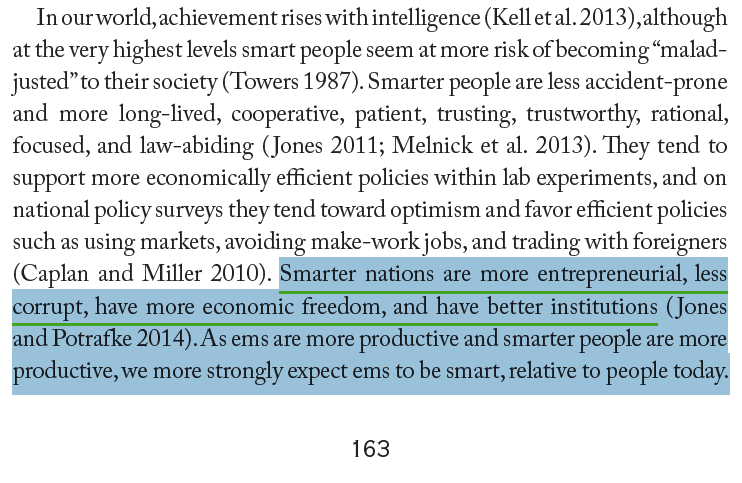

Indeed, Hanson claims that ems would be highly intelligent, reflecting the entrepreneurial spirit, freedom, etc. of what he describes as the "smarter nations" (you know which ones he's talking about).

Many "female" ems might be lesbians, Hanson argues, but "disproportionally few male ems may be gay." You really can't make this stuff up:

I could go on, but that's plenty enough for today. These excerpts are from Robin Hanson's "The Age of Em: Work, Love and Life when Robots Rule the Earth," published by Oxford University Press (@OUPAcademic). The point of the book is to try to picture what a world full of

uploaded minds would look like. It explores, in other words, the "world of digital people" possibility at the top of this image from Holden Karnofsky.

Note that MacAskill says in his book "What We Owe the Future" that Karnofsky's "influence on me is so thoroughgoing that it

Note that MacAskill says in his book "What We Owe the Future" that Karnofsky's "influence on me is so thoroughgoing that it

permeates every chapter." These people are serious about such a future, and indeed many are eager to bring it about. #longtermism

• • •

Missing some Tweet in this thread? You can try to

force a refresh