Author, Realtime Techpocalypse Newsletter. Co-host of Dystopia Now w/ comedian Kate Willett. Philosopher focused on all-things related to human extinction.

7 subscribers

How to get URL link on X (Twitter) App

In an undated letter of recommendation, Chomsky described Epstein as “a highly valued friend and regular source of intellectual exchange and stimulation,” adding that they met “half a dozen years ago” and have “been in regular contact since.”

In an undated letter of recommendation, Chomsky described Epstein as “a highly valued friend and regular source of intellectual exchange and stimulation,” adding that they met “half a dozen years ago” and have “been in regular contact since.”https://x.com/SilvermanJacob/status/1989336932308877484

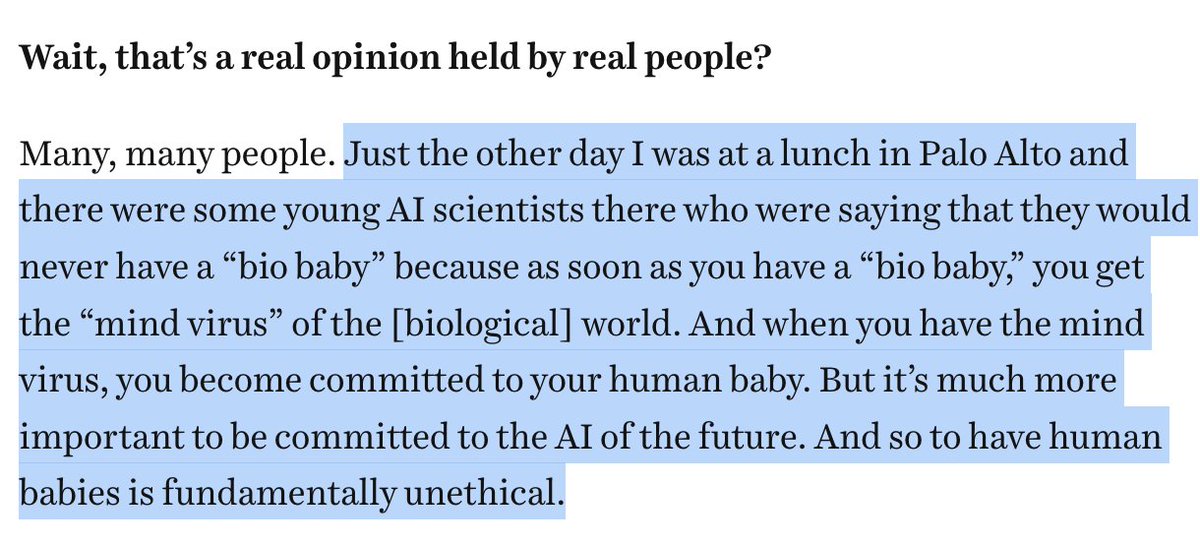

Jaron Lanier, the VR pioneer, reports in a recent Vox interview that many AI researchers in Palo Alto think that "it would be good to wipe out people" with AI. They also think it's "fundamentally unethical" to have "bio-babies," because the AI future is more important.

Jaron Lanier, the VR pioneer, reports in a recent Vox interview that many AI researchers in Palo Alto think that "it would be good to wipe out people" with AI. They also think it's "fundamentally unethical" to have "bio-babies," because the AI future is more important.

Here are all the articles I've written about this so far. Pro-extinctionism is not a fringe ideology, but the mainstream within certain tech circles. But don't take my word for it -- listen to what these people themselves have said: techpolicy.press/digital-eugeni…

Here are all the articles I've written about this so far. Pro-extinctionism is not a fringe ideology, but the mainstream within certain tech circles. But don't take my word for it -- listen to what these people themselves have said: techpolicy.press/digital-eugeni…

https://twitter.com/jasonwblakely/status/1938639600907612610truthdig.com/articles/a-tal…

First, here's a link to the article: truthdig.com/articles/a-tal…

First, here's a link to the article: truthdig.com/articles/a-tal…

https://twitter.com/StopAI_Info/status/1927825757478473780two distinct extinction scenarios. The first--terminal extinction--would happen if our species were to disappear entirely and forever. The second--final extinction--adds an additional condition. It would happen if our species were to disappear entirely and forever *without* us

I note that there are two factions within the MAGA movement: traditionalists like Steve Bannon and transhumanists like Elon Musk. The first are Christians, and the second embrace a new religion called "transhumanism" (the "T" in "TESCREAL").

I note that there are two factions within the MAGA movement: traditionalists like Steve Bannon and transhumanists like Elon Musk. The first are Christians, and the second embrace a new religion called "transhumanism" (the "T" in "TESCREAL").

As I write in my newest article (linked below), some scholars refer to evangelical Christian Zionists as the "Armageddon Lobby." But there's a new Armageddon Lobby that's taken hold in Silicon Valley. These people embrace what Rushkoff calls The Mindset.

As I write in my newest article (linked below), some scholars refer to evangelical Christian Zionists as the "Armageddon Lobby." But there's a new Armageddon Lobby that's taken hold in Silicon Valley. These people embrace what Rushkoff calls The Mindset.

https://twitter.com/DKokotajlo/status/1907868636288851992an influential AI safety researcher once described to me as having the highest bullshit-to-word ratio of any book he's ever read. In many ways, this report (discussed in the podcast) is worse than theology, although it manages to give one the prima facie impression of rigor.

https://twitter.com/davetroy/status/1887437731686477914destination? The answer comes from the TESCREAL worldview, which Dave Troy has written about before (I recommend his article!). The end-goal is a techno-utopian civilization of posthumans spread throughout our entire lightcone. No, I am not kidding--I know this because I used to

https://twitter.com/Helenreflects/status/1849485366568403442this time it got reviewed: one reviewer liked it, but opted to reject(??), while the other reviewer said, basically, that the paper is complete trash. Since then, I've sent it out to 5 other journals -- all desk rejects. I'm about ready to post it on SSRN so that this Oxford prof

This is absolutely crucial for journalists, policymakers, academics, and the general public to understand. Many people in the tech world, especially those working on "AGI," are motivated by a futurological vision in which our species--humanity--has no place. We will either be

This is absolutely crucial for journalists, policymakers, academics, and the general public to understand. Many people in the tech world, especially those working on "AGI," are motivated by a futurological vision in which our species--humanity--has no place. We will either be

https://twitter.com/DanBuk4/status/1743323765902057862worldview, tbh. I wasn't a philosophical pessimist or nihilist when I entered Germany, but--ironically--I left Germany as one. Hard to express how much ghosting has impacted me. Studies, though, suggest that ghosting can harm ghosteres, too. More here: truthdig.com/articles/what-…

After saying he doesn't advocate for violence, Yudkowsky has endorsed nanobots causing property damage to AI labs. The reference to "instant death" is that a rogue AGI could create self-replicating atmospheric diamondoid bacteria (what??) that blot out the sun, or something. 🤖🦠

After saying he doesn't advocate for violence, Yudkowsky has endorsed nanobots causing property damage to AI labs. The reference to "instant death" is that a rogue AGI could create self-replicating atmospheric diamondoid bacteria (what??) that blot out the sun, or something. 🤖🦠