@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam 1/ Mr. Kelly you are operating on assumptions. You claim that "AI has not harmed actual people", which is entirely speculation and not facts. So let's talk facts, and with the evidence you ask for. Let's begin, and please be patient, it's going to be a bit long 🧵👇:

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam 2/ A quick intro to who am I. I am a professional artist of over 15 years working in the film industry (Marvel, HBO, Universal etc), and have been an advocate for my community, raising alarms on the unethical practices of AI companies, and how it harms our community.

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam 3/ I've also been in constant communication with AI engineers, ethic advocates and researchers. For example @atg_abhishek of the Montreal AI Ethics Institute, to better understand these AI/ML models and really see why this has been so harmful.montrealethics.ai

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 4/ The technology:

As you know AI/ML text-to-image models generate a wide array of imagery. What an AI/ML model is able to generate depends completely on the data it's been trained upon. Let's first take a look at the data set in question. LAION.

laion.ai

As you know AI/ML text-to-image models generate a wide array of imagery. What an AI/ML model is able to generate depends completely on the data it's been trained upon. Let's first take a look at the data set in question. LAION.

laion.ai

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 5/ LAION: Stability AI, funded the creation of the biggest and most utilized database called LAION 5B. The LAION 5B database contains 5.6 billion text and image data, including copyrighted data and private data .This also includes trademarked works(cont.)

rom1504.github.io/clip-retrieval…

rom1504.github.io/clip-retrieval…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 6/ This IP and private data was all gathered without artists, individuals and businesses knowledge or permission. Again this was funded by Stability AI.

Here's an article showcasing how Stability AI funded Laion: techcrunch.com/2022/08/24/dee…)

Here's an article showcasing how Stability AI funded Laion: techcrunch.com/2022/08/24/dee…)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 7. Data Laundering, From Research to Commercial:

Stability AI, Midjourney and even Google have utilized various datasets from LAION in their commercial models. This is surprising since LAION is supposed to be a non-commercial research data set (cont.)

Stability AI, Midjourney and even Google have utilized various datasets from LAION in their commercial models. This is surprising since LAION is supposed to be a non-commercial research data set (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 8.This is Data Laundering. By utilizing non-commercial research commercially, companies can profit from data they normally wouldn't be able to use. All done without anyone's knowledge, consent, permission or even ability to opt out (more on that later) waxy.org/2022/09/ai-dat…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 9/ Our full names used, potential for Privacy violations: To generate media through AI/ML models, users have to input prompts telling the software what to generate. Artists' full names are commonly used—in fact, encouraged to be used—as part of those prompts (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek 10/ Full names used cont.:

An example on this is artist @GrzegorzRutko14, who as of today, name and work has been used 289,995 between SD and MJ libraire.ai/search?q=Greg+…

This egregious use of our full names alongside our work can have many chilling effects (cont.)

An example on this is artist @GrzegorzRutko14, who as of today, name and work has been used 289,995 between SD and MJ libraire.ai/search?q=Greg+…

This egregious use of our full names alongside our work can have many chilling effects (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 11/ Greg has spoken in depth on his concerns on seeing his work now having to compete against AI imagery in search results and social media. What effects can this have on his ability to advertise? What about potential for fraud and identity theft? hyperallergic.com/766241/hes-big…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 12/ It's not just artists, it's the general public:

Within these data sets sensitive personal data has been found such as:

Medical Records: arstechnica.com/information-te…

Non-Consensual porn, executions and worse:

vice.com/en/article/93a…).

Cont.

Within these data sets sensitive personal data has been found such as:

Medical Records: arstechnica.com/information-te…

Non-Consensual porn, executions and worse:

vice.com/en/article/93a…).

Cont.

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 13/ Not just this but early research indicates that LAION has scraped data from business websites such as Shopify (36,469,372), Pinterest (30,908,667, Deviant Art ( 1,435,184 ), heck even HOME AWAY(472,132).

https://twitter.com/ummjackson/status/1565136733250809857?s=20&t=l3cZcQY96ZFnOjlWEd2f8Q

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 14. Lack of Consent, No real Opt-Out and Bad precedents:

All of this was done without anyone's knowledge or consent. None of my peers, no one in those databases have been asked if they wanted to be a part of this. And again they're profiting from this.

In fact (cont.)

All of this was done without anyone's knowledge or consent. None of my peers, no one in those databases have been asked if they wanted to be a part of this. And again they're profiting from this.

In fact (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 15/ Carolyn Henderson, wife of fine artist Steve Henderson, has been asking non stop to be removed from the data sets but his request has been ignored: technologyreview.com/2022/09/16/105…

This request is ignored because AI companies cannot comply. (cont.)

This request is ignored because AI companies cannot comply. (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 16/ Once an AI model has been trained on data, it cannot unlearn. This is why promises of Opt-Out are suspect, because it simply doesn't address the issue that these models have already been trained on our data. Your own publication discussed this: wired.com/story/the-next…

Cont.

Cont.

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 17/ But this also is an EGREGIOUS practice and precedent set by AI/ML companies. That now they can just grab all of your data (IP or private)and profit from it. They are taking our choice (perhaps even the right) to opt-in away from affected and unsuspecting individuals. (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 18/ This practice sets a bad precedent for any company to arbitrarily take and use anyone’s intellectual property and personal data without their knowledge or permission, and without fair compensation! This has the potential for a LOT of harm.

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 19/ It can very HEAVILY Plagiarize:

This is an issue that even Stability AI recognizes (see their announcement on why they don't utilize copyrighted material in their Dance Diffusion audio models here:

(Cont.)

This is an issue that even Stability AI recognizes (see their announcement on why they don't utilize copyrighted material in their Dance Diffusion audio models here:

https://twitter.com/kortizart/status/1583716918040530946?s=20&t=mb7fZCxtBdRnV5FSBsf2VQ)

(Cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 20/ Here's another thread to help you visualize how egregious plagiarism can be:

Attached is the generated image via Midjourney V4 with prompt

https://twitter.com/kortizart/status/1588915427018559490?s=20&t=mb7fZCxtBdRnV5FSBsf2VQ

Attached is the generated image via Midjourney V4 with prompt

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 21/Intentional Harm:

Some AI users intentionally take the work of artists, against artists wishes. Talk to @samdoesarts who has AI users taking his work, and abuse from them for him asking his work to not be utilized. Another story by @waxpancake : waxy.org/2022/11/invasi…

Some AI users intentionally take the work of artists, against artists wishes. Talk to @samdoesarts who has AI users taking his work, and abuse from them for him asking his work to not be utilized. Another story by @waxpancake : waxy.org/2022/11/invasi…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 22/ Massive Profits:

So let's not forget, Stability AI , Midjourney and other AI companies have made HUGE profits from all our data. These aren't insignificant harmless sums: techcrunch.com/2022/10/17/sta…

So let's not forget, Stability AI , Midjourney and other AI companies have made HUGE profits from all our data. These aren't insignificant harmless sums: techcrunch.com/2022/10/17/sta…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 23/ Labor is being replaced. Anecdotal but I'm a solid source on this, as a pro artist and someone who is a part of the art community. I've heard reports of artists loosing work over this. Entry level artists project's or opportunities getting cut. All done utilizing our own work

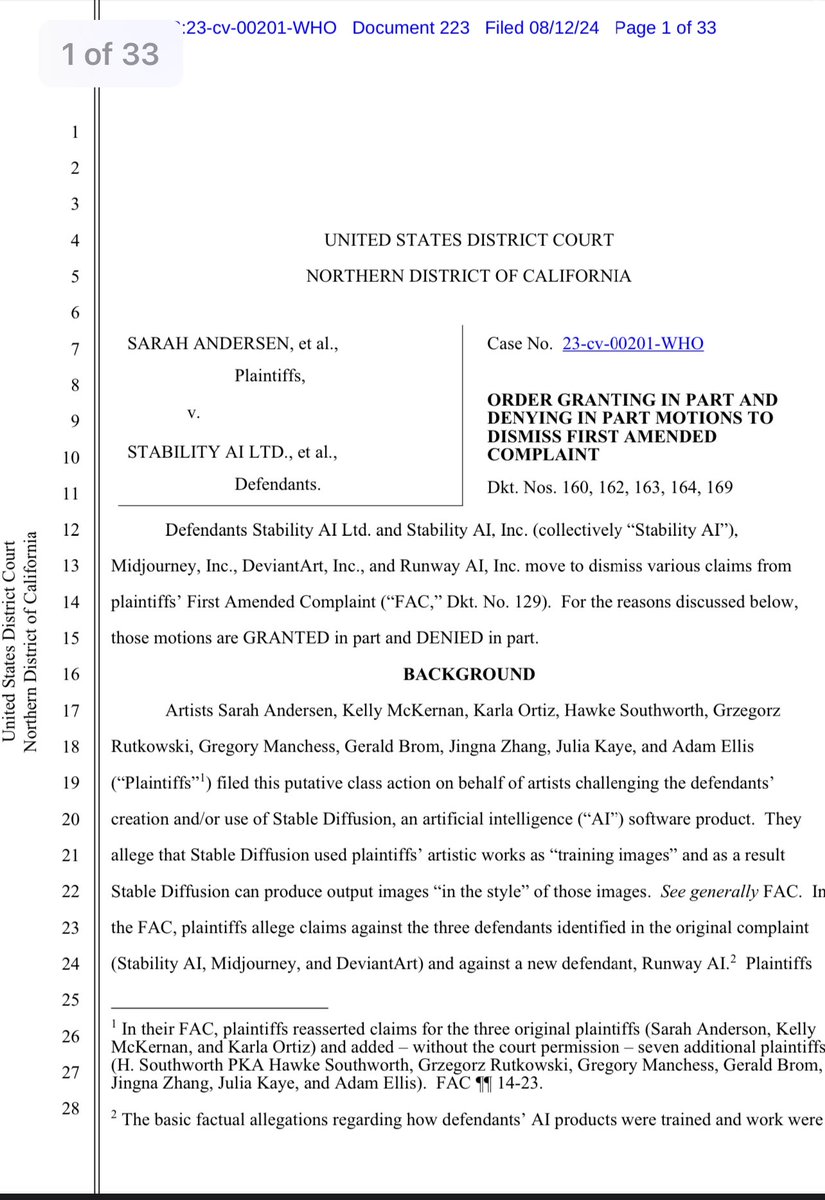

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 24/Similar cases (though not exact) in the courts:

These practices are all so egregious that a similar case to ours, Open AI/Microsoft's CoPilot is being sued. Read about it here: githubcopilotinvestigation.com

and here: githubcopilotlitigation.com

complaint here: githubcopilotlitigation.com/pdf/1-0-github…

These practices are all so egregious that a similar case to ours, Open AI/Microsoft's CoPilot is being sued. Read about it here: githubcopilotinvestigation.com

and here: githubcopilotlitigation.com

complaint here: githubcopilotlitigation.com/pdf/1-0-github…

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 25/ Detrimental narratives

So with all this said, I hope you understand how detrimental and harmful your narratives can be. There is PLENTY of harm being done, and these are valid concerns that have nothing to do with silly philosophical debates. (cont.)

So with all this said, I hope you understand how detrimental and harmful your narratives can be. There is PLENTY of harm being done, and these are valid concerns that have nothing to do with silly philosophical debates. (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 26/ There is tangible harm being perpetuated by these companies to our communities and the general public. And to dismiss this as hysteria is not only irresponsible, but harmful. Please I urge you and your publication do better. Oh and lastly (cont.)

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 27/ @glichfield you spoke about the Copyright Office. Just want to let you and @kevin2kelly know we've held a townhall with the US copyright office on this, including AI experts like @atg_abhishek and @GrzegorzRutko14. It is a good thorough listen:

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake 28/ I thank you both for your attention and time. There is a LOT more to this story than what the article presents. So please do listen to those who really are affected by this (artists and the public). You'll find out, it's much more than hysteria ;)

https://twitter.com/kortizart/status/1593366847595827201?s=20&t=Vm_UyhhG4jWqVoflFtlX5Q

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake P.S I hope it's ok but I will be also sharing this on my wall. It took a long time to gather all this information, and I'd like my community to get a refresher on this. I am available to you both if you have any further questions.

You can email me via:

karlaortizart.com

You can email me via:

karlaortizart.com

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake P.s #2 quick correction to this tweet , I linked the wrong article showcasing how Stability Ai funded LAION 5B.

Here’s the correct article with a screenshot. My apologies:

techcrunch.com/2022/08/12/a-s…

Here’s the correct article with a screenshot. My apologies:

techcrunch.com/2022/08/12/a-s…

https://twitter.com/kortizart/status/1593725985991852034

@kevin2kelly @glichfield @FlyingTrilobite @neilturkewitz @WIRED @sciam @atg_abhishek @GrzegorzRutko14 @samdoesarts @waxpancake Update: We keep finding more ways in which AI production is harmful.

Imagine you as writers and photographers, find a site just selling your name, without your ok?

@WIRED ,@kevin2kelly and @glichfield with all the info above, would you be willing to reconsider this issue?

Imagine you as writers and photographers, find a site just selling your name, without your ok?

@WIRED ,@kevin2kelly and @glichfield with all the info above, would you be willing to reconsider this issue?

https://twitter.com/kortizart/status/1594859822674944001

• • •

Missing some Tweet in this thread? You can try to

force a refresh