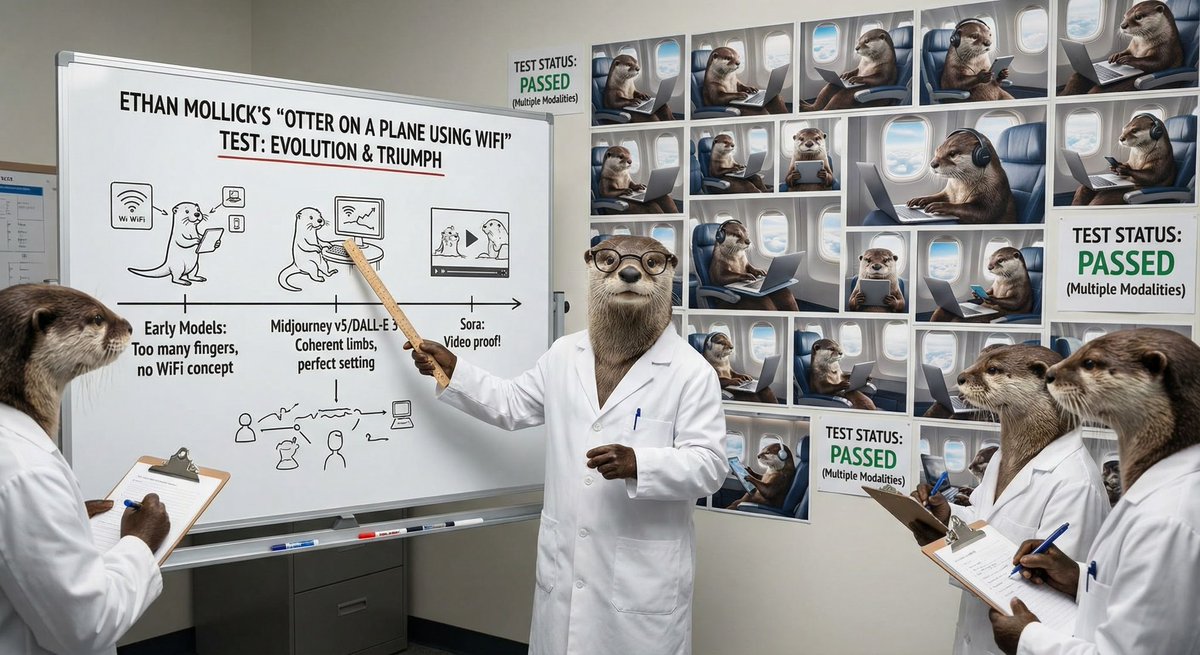

If you last checked in on AI image makers a month ago & thought “that is a fun toy, but is far from useful…” Well, in just the last week or so two of the major AI systems updated.

You can now generate a solid image in one try. For example, “otter on a plane using wifi” 1st try:

You can now generate a solid image in one try. For example, “otter on a plane using wifi” 1st try:

This is a classic case of disruptive technology, in the original Clay Christensen sense 👇

A less capable technology is developing faster than a stable dominant technology (human illustration), and starting to be able to handle more use cases. Except it is happening very quickly

A less capable technology is developing faster than a stable dominant technology (human illustration), and starting to be able to handle more use cases. Except it is happening very quickly

https://twitter.com/emollick/status/1372973748614262794

Seriously, everyone whose job touches on writing, images, video, or music should realize that the pace of improvement here is very fast & also, unlike other areas of AI, like robotics, there are not any obvious barriers to improvement.

We should be thinking about what that means

We should be thinking about what that means

https://twitter.com/emollick/status/1584743837637160960

Also worth looking at the details in the admittedly goofy otter pictures: the lighting looks correct (even streaming through the windows), everything is placed correctly, including the drink, the composition is varied, etc.

And this is without any attempts to refine the prompts.

And this is without any attempts to refine the prompts.

Some more, again all first attempts with no effort to revise:

🦦 Otters fighting a medieval duel

🦦Otter physicist lamenting the invention of the atomic bomb

🦦Otter inventing the airplane in 1905

🦦Otters playing chess in the fall

(These AIs just came out just a few months ago)

🦦 Otters fighting a medieval duel

🦦Otter physicist lamenting the invention of the atomic bomb

🦦Otter inventing the airplane in 1905

🦦Otters playing chess in the fall

(These AIs just came out just a few months ago)

AI image generation can now beat the Lovelace Test, a Turing Test, but for creativity. It challenges AI to equal humans under constrained creativity.

Illustrating “an otter making pizza in Ancient Rome” in a novel, interesting way & as well as an average human is a clear pass!

Illustrating “an otter making pizza in Ancient Rome” in a novel, interesting way & as well as an average human is a clear pass!

And I picked otters randomly for fun

But since some comments are pointing out that nonhuman scenes may be easier; here are some of the prompt “doctor on a plane using wifi” - we are good at picking out flaws with illustrations of people, but they are impressive & improving fast.

But since some comments are pointing out that nonhuman scenes may be easier; here are some of the prompt “doctor on a plane using wifi” - we are good at picking out flaws with illustrations of people, but they are impressive & improving fast.

People keep asking what system I was using: it is MidJourney (I mentioned this in the thread)

If you want to try it, you get 25 uses for free & a guide is below. Be sure to use —v4 at the end of your prompt to use the latest version, which is the one I use throughout the thread.

If you want to try it, you get 25 uses for free & a guide is below. Be sure to use —v4 at the end of your prompt to use the latest version, which is the one I use throughout the thread.

https://twitter.com/emollick/status/1554264506683072513

Here👇 is a thread with more comparisons between MidJourney a month or so ago, compared to MidJourney now. The pace is fast!

If you are trying MidJourney, the way to use the new version is to add --v 4 to the end of your prompt (I have no association with it or any AI company)

If you are trying MidJourney, the way to use the new version is to add --v 4 to the end of your prompt (I have no association with it or any AI company)

https://twitter.com/emollick/status/1589449800340029440

And I generated every one of these images from my phone in seconds & most were done over plane wifi (appropriately).

As to what this all means? There are many different ways human work will be impacted by AI, including boosting our capabilities 👇

But change is coming quick!

As to what this all means? There are many different ways human work will be impacted by AI, including boosting our capabilities 👇

But change is coming quick!

https://twitter.com/emollick/status/1508651631151362050

If you want more connections between what is happening in research and how it effects the real world, I have a free Substack you can read.

For example, here is a post on boosting creativity… oneusefulthing.substack.com/p/how-to-be-mo…

For example, here is a post on boosting creativity… oneusefulthing.substack.com/p/how-to-be-mo…

Reminder: if you want to use the new MidJourney version 4, rather than the old (from a month ago!) version add “ --v 4” to the end of the prompt. The spaces are vital

Interestingly, version 4 “just works” making it easier for everyone but power users who learned to craft prompts

Interestingly, version 4 “just works” making it easier for everyone but power users who learned to craft prompts

• • •

Missing some Tweet in this thread? You can try to

force a refresh