You couldn't make it to #NeurIPS2022 this year?

Nothing to worry - I curated a summary for you below focussing on key papers, presentations and workshops in the buzzing space of ML in Biology and Healthcare 👇

Nothing to worry - I curated a summary for you below focussing on key papers, presentations and workshops in the buzzing space of ML in Biology and Healthcare 👇

Starting off with Keynote presentations:

Back prop has become the workhorse in ML-

@geoffreyhinton challenges the community to rethink learning introducing the Forward-Forward Algorithm that are trained to have high goodness on positive and low goodness on negative samples.

Back prop has become the workhorse in ML-

@geoffreyhinton challenges the community to rethink learning introducing the Forward-Forward Algorithm that are trained to have high goodness on positive and low goodness on negative samples.

Giving models an understanding of what they do not know, is for many decision-making applications as important as providing accurate predictions

E Candès @Stanford gave a broad introduction to conformal prediction with quantile regression to filter out low confidence predictions

E Candès @Stanford gave a broad introduction to conformal prediction with quantile regression to filter out low confidence predictions

Causality is at the core of key problems in healthcare and is receiving more attn at NeurIPS:

In the causalML for real world impact workshop, causality pioneer Peter Spirtes @CarnegieMellon (of PC algo fame) outlines challenges, limitations and paths forward for causal inference

In the causalML for real world impact workshop, causality pioneer Peter Spirtes @CarnegieMellon (of PC algo fame) outlines challenges, limitations and paths forward for causal inference

@stefanAbauer @CIFAR_News @KTHuniversity presented a wealth of recent research on real-world uses of causal inference including single-cell gene network inference, learning neural causal models from perturbational data, and optimal experimental design for causal discovery.

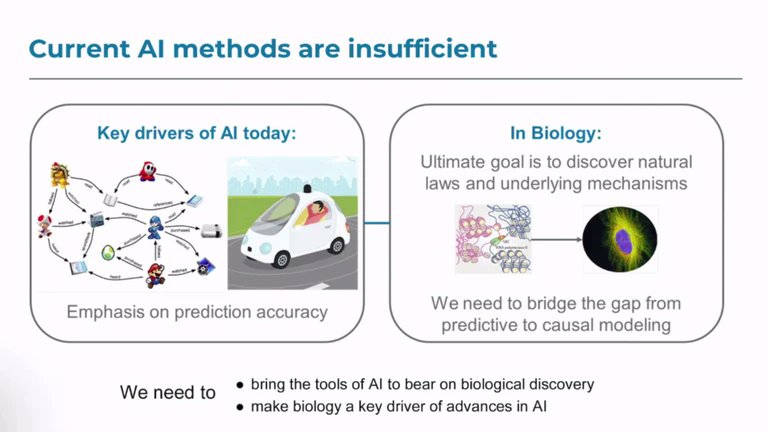

Deep learning for causality leader @rosemary_ke @DeepMind introduces us to Neural Causal Structure Learning - a synthesis of deep learning and causal discovery that promises flexibility and scalability to capture cause-effect relations with deep learning.

In a following session, @weatheralljim75 @AstraZeneca makes a strong argument for causal ML in medicines development to enable a deeper understanding of mechanisms of therapies, and outlines some of the challenges faced by practitioners.

Causality in biology leader @CarolineUhler @MIT makes the case for the new methods needed to truly move towards causality in ML and introduces us to an impactful application to cancer immunotherapy for which a $USD 50'000 challenge has been opened: broadinstitute.org/news/eric-and-…

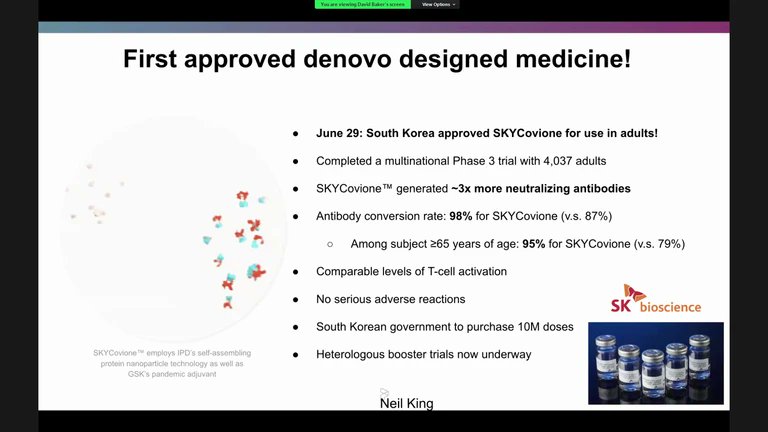

In the AI for Science workshop, David Baker @UWproteindesign gives us a whirlwind tour of the exciting progress in de novo protein design with ML incl the first approved de-novo designed medicine, amyloid binding, protein/NA complexes and RFdiffusion for unconditional generation

In AI4Mat workshop, @cwcoley @MIT presents recent advances in automated material synthesis that promise to lead to algorithmic "self-driving labs" that plan and execute synthesis experiments built on data-driven ML approaches.

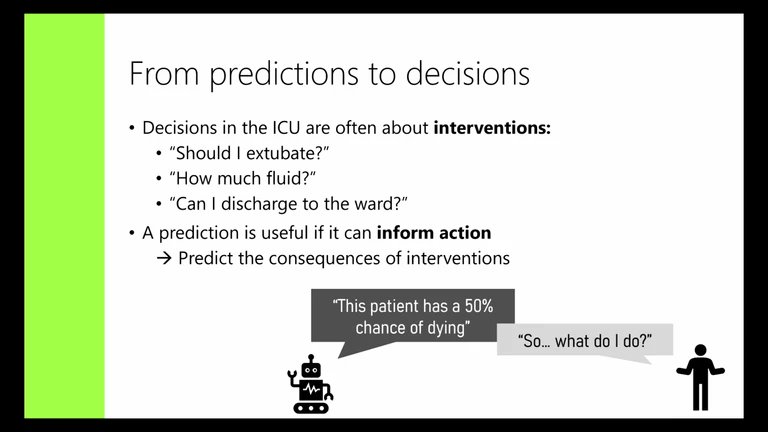

@_hylandSL @MSFTResearch tackles the challenging setting of using ML in intensive and perioperative care in her amazing time series for health workshop talk - providing an excellent outline of how to approach, specify, represent and act on interdisciplinary health/ML problems.

In the same session, @DaniCMBelg @DeepMind provides an excellent overview of how to ask the right questions, refine metrics, optimise data sampling, and to better understand the clinical context and big picture to truly advance ML for healthcare.

Onto the papers:

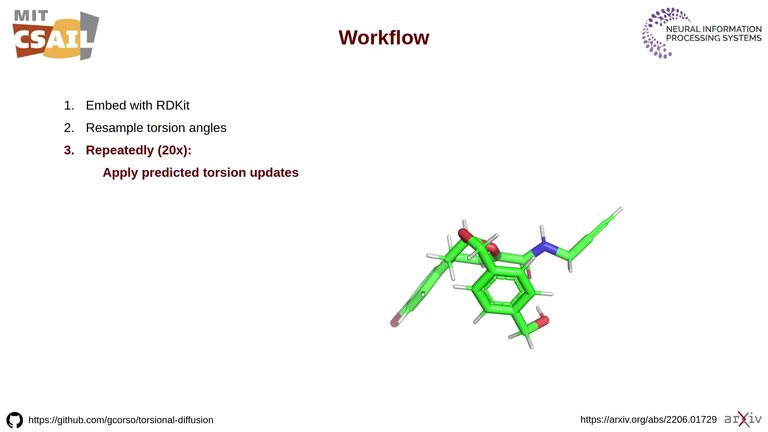

Bowen Jing @GabriCorso @MIT et al presented their Torsional Diffusion framework for conformer generation that achieved state-of-the-art performance on the GEOM dataset using fewer denoising steps than Euclidean diffusion methods.

Paper: openreview.net/forum?id=w6fj2…

Bowen Jing @GabriCorso @MIT et al presented their Torsional Diffusion framework for conformer generation that achieved state-of-the-art performance on the GEOM dataset using fewer denoising steps than Euclidean diffusion methods.

Paper: openreview.net/forum?id=w6fj2…

Hazal Koptagel @KTHuniversity et al introduce a new variational inference based approach (VaiPhy) for phylogenetics based on two novel samplers: SLANTIS for tree topologies and JC sampler for sampling branch lengths from the Jukes-Cantor model.

Paper: openreview.net/forum?id=TIXwB…

Paper: openreview.net/forum?id=TIXwB…

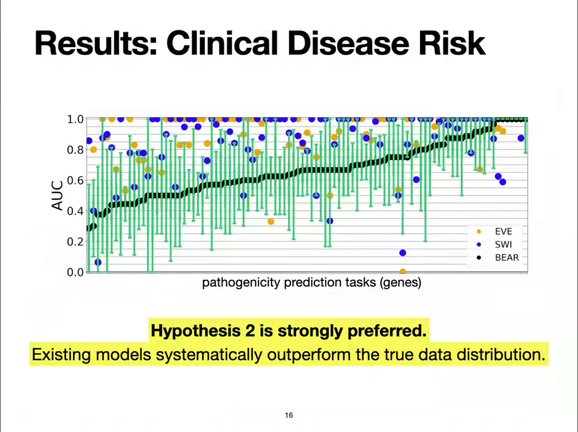

@EliWeinstein6 @Columbia et al study misspecification in generative models for estimating molecular fitness from evolutionary sequence data and show that fitness is not identifiable from observational data alone.

Paper: openreview.net/forum?id=CwG-o…

Slides: nips.cc/media/neurips-…

Paper: openreview.net/forum?id=CwG-o…

Slides: nips.cc/media/neurips-…

With graph structured data being abundant in health applications, @davidbuterez @Cambridge_Uni et al investigated neural readouts for graph neural networks and demonstrated strong empirical results on over 40 datasets incl. high throughput screening data

openreview.net/forum?id=yts7f…

openreview.net/forum?id=yts7f…

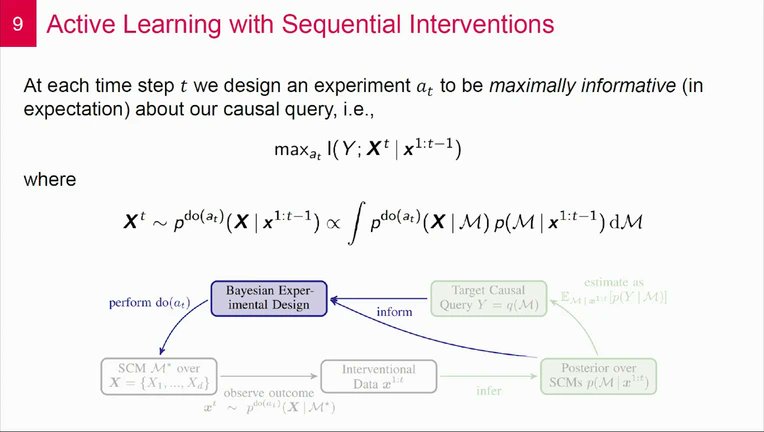

Last but not least, @chritoth @tugraz et al present a Bayesian approach to active learning that integrates causal inference and causal reasoning. The presented empirical simulation data shows attractive data-efficiency for their proposal approach.

Paper: openreview.net/forum?id=r0bjB…

Paper: openreview.net/forum?id=r0bjB…

Overall, exciting observations are:

-the emerging synthesis of deep learning and causality

-the ongoing reinvention of the entire healthcare stack with a data-driven lens

-increasing bio/health presence, but (in contrast to ICML) still small vs vision and text at #NeurIPS2022

-the emerging synthesis of deep learning and causality

-the ongoing reinvention of the entire healthcare stack with a data-driven lens

-increasing bio/health presence, but (in contrast to ICML) still small vs vision and text at #NeurIPS2022

(DISCLAIMER: The above list is a personal curation that most certainly missed many key contributions (in particular the many excellent posters!) and is only intended to be a starting point for your own exploration.)

• • •

Missing some Tweet in this thread? You can try to

force a refresh