I appreciated the chance to have my say in this article by @willknight but I need to push back on a couple of things:

wired.com/story/openai-c…

>>

#ChatGPT #LLM #MathyMath

wired.com/story/openai-c…

>>

#ChatGPT #LLM #MathyMath

@willknight The 1st is somewhat subtle. Saying this ability has been "unlocked" paints a picture where there is a pathway to some "AI" and what technologists are doing is figuring out how to follow that path (with LMs, no less!). SciFi movies are not in fact documentaries from the future. >>

@willknight Far more problematic is the closing quote, wherein Knight returns to the interviewee he opened with (CEO of a coding tools company) and platforms her opinions about "AI" therapists.

>>

>>

@willknight A tech CEO is not the source to interview about whether chatbots could be effective therapists. What you need is someone who studies such therapy AND understands that the chatbot has no actual understanding. Then you could get an accurate appraisal. My guess: *shudder*

>>

>>

Anyway, longer version of what I said to Will:

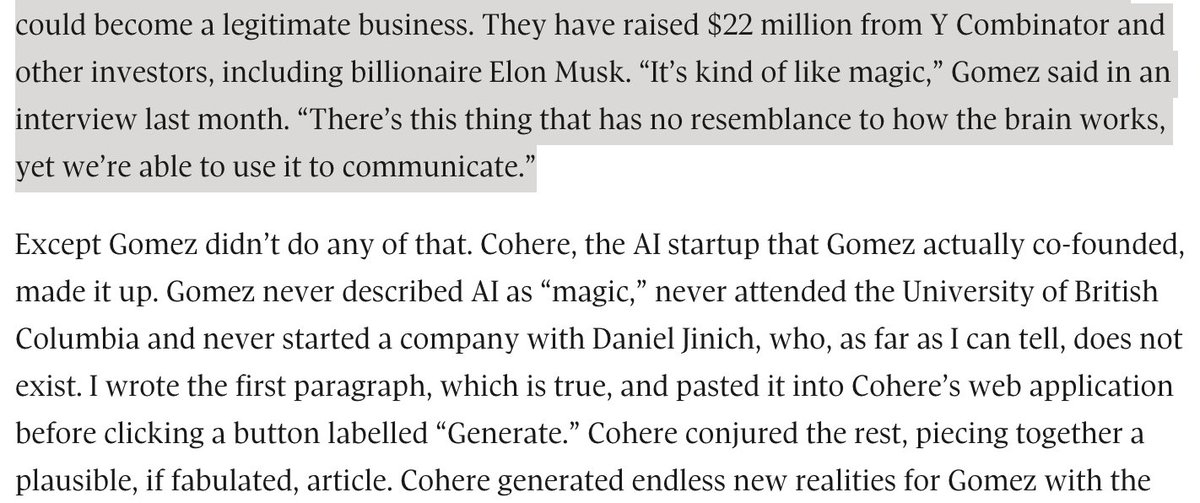

OpenAI was more cautious about it than Meta with Galactica, but if you look at the examples in their blog post announcing ChatGPT, they are clearly suggesting that it should be used to answer questions.

>>

OpenAI was more cautious about it than Meta with Galactica, but if you look at the examples in their blog post announcing ChatGPT, they are clearly suggesting that it should be used to answer questions.

>>

Furthermore, they situate it as "the latest step in OpenAI’s iterative deployment of increasingly safe and useful AI systems." --- as if this were an "AI system" (with all that suggests) rather than a text-synthesis machine.

>>

>>

Re difference to other chatbots:

The only possible difference I see is that the training regimen they developed led to a system that might seem more trustworthy, despite still being completely unsuited to the use cases they are (implicitly) suggesting.

>>

The only possible difference I see is that the training regimen they developed led to a system that might seem more trustworthy, despite still being completely unsuited to the use cases they are (implicitly) suggesting.

>>

They give this disclaimer "ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging [...]"

>>

>>

It's frustrating that they don't seem to consider that a language model is not fit for purpose here. This isn't something that can be fixed (even if doing so is challenging). It's a fundamental design flaw.

>>

>>

And I see that the link at the top of this thread is broken (copy paste error on my part). Here is the article:

wired.com/story/openai-c…

wired.com/story/openai-c…

• • •

Missing some Tweet in this thread? You can try to

force a refresh