We're seeing multiple folks in #NLProc who *should know better* bragging about using #ChatGPT to help them write papers. So, I guess we need a thread of why this a bad idea:

>>

>>

1- The writing is part of the doing of science. Yes, even the related work section. I tell my students: Your job there is show how your work is building on what has gone before. This requires understanding what has gone before and reasoning about the difference.

>>

>>

The result is a short summary for others to read that you the author vouch for as accurate. In general, the practice of writing these sections in #NLProc (and I'm guessing CS generally) is pretty terrible. But off-loading this to text synthesizers is to make it worse.

>>

>>

2- ChatGPT etc are designed to create confident sounding text. If you think you'll throw in some ideas and then evaluate what comes out, are you really in a position to do that evaluation? If it sounds good, are you just gonna go with it? Minutes before the submission deadline?>>

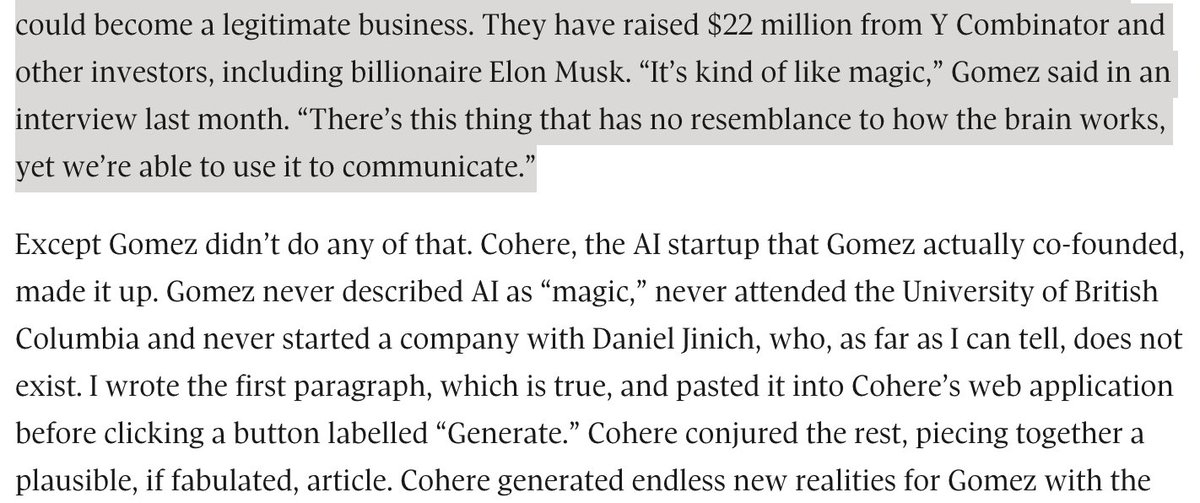

3- It breaks the web of citations: If ChatGPT comes up with something that you wouldn't have thought of but you recognize as a good idea ... and it came from someone else's writing in ChatGPT's training data, how are you going to trace that & give proper credit?

>>

>>

4- Just stop it with calling LMs "co-authors" etc. Just as with testifying before congress, scientific authorship is something that can only be done by someone who can stand by their words (see: Vancouver convention).

>>

https://twitter.com/emilymbender/status/1576718655840477184

>>

5- I'm curious what the energy costs are for this. Altman says the compute behind ChatGPT queries is "eye-watering". If you're using this as a glorified thesaurus, maybe just use an actual thesaurus?

>>

https://twitter.com/sama/status/1599669571795185665

>>

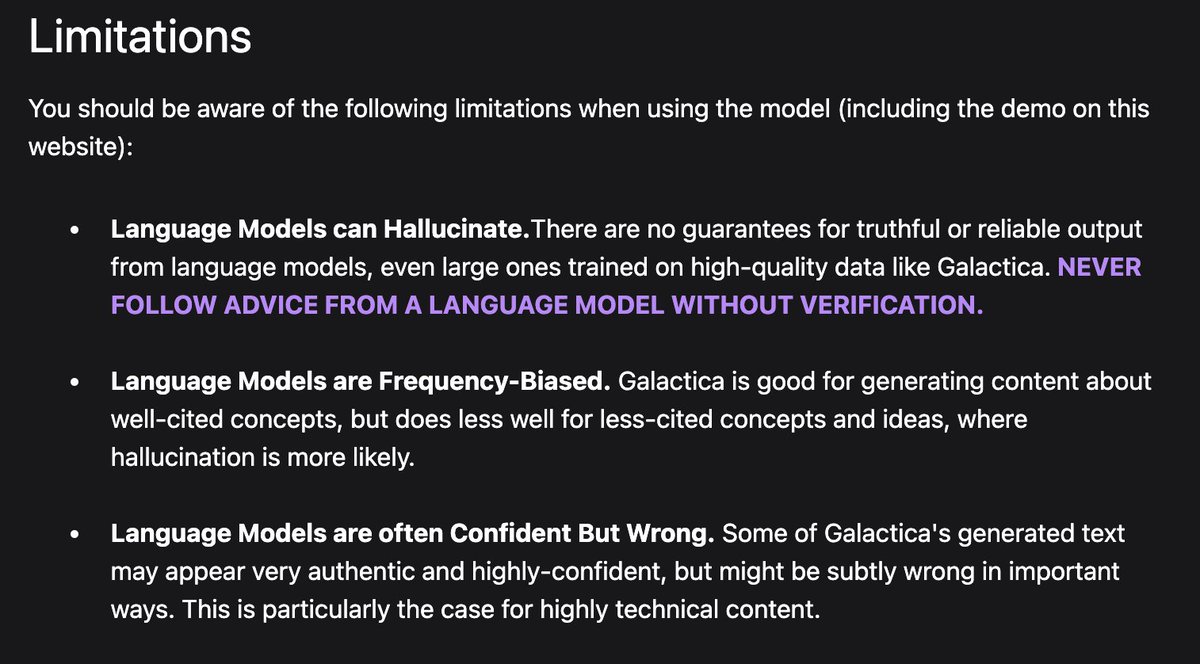

6- As a bare minimum baseline, why would you use a tool that has not been reliably evaluated for the purpose you intend to use it for (or for any related purpose, for that matter)?

/fin

/fin

p.s.: How did I forget to mention

7- As a second bare minimum baseline, why would you use a trained model with no transparency into its training data?

7- As a second bare minimum baseline, why would you use a trained model with no transparency into its training data?

https://twitter.com/emilymbender/status/1600657642518876160?s=20&t=BEat48yQle9TiSRE2co5Hw

• • •

Missing some Tweet in this thread? You can try to

force a refresh