If you're in the market for sculpture about the singularity and the apocalypse, I re-listed work from my 2020 solo show in NYC, Future Tense

opensea.io/collection/fut…

These are digital provenance of physical artworks, my gallerist can ship the art to you, or keep it in storage

opensea.io/collection/fut…

These are digital provenance of physical artworks, my gallerist can ship the art to you, or keep it in storage

A003 (Third Nature) one of four CNC aluminum ikebana vases that are hard anodized. Ikebana is a way of bringing the spirits of nature into your home, that's both symbolic and spiritual. Here a third nature, technology as organism, is in a triad with humanity and nature.

An extinction smbol made of #MOLLE nylon webbing, a military equipment fabric pattern that's popular in civilian militia and prepper culture. . It's partially about general civil unrest, and how we may want to treat ecological collapse as a national security issue

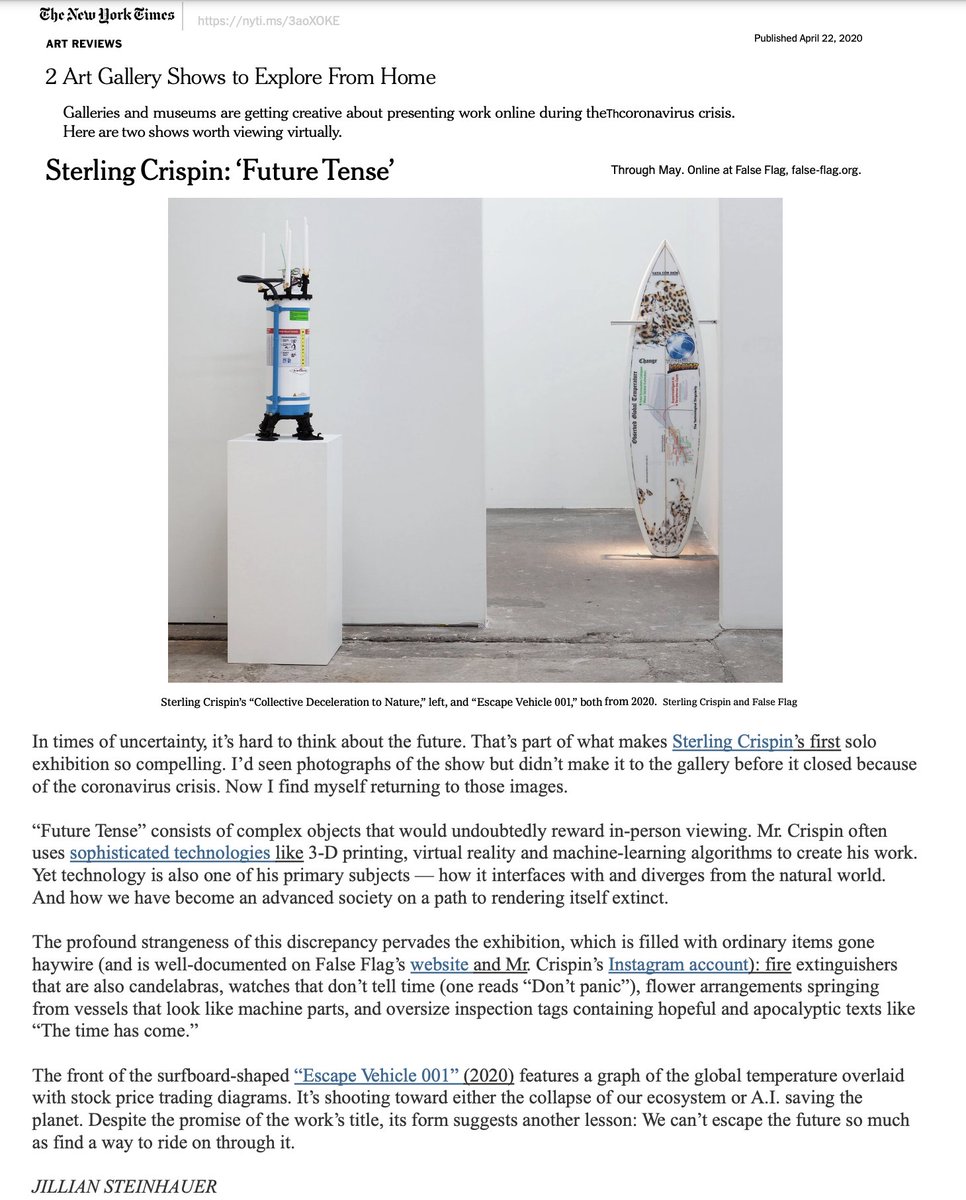

This piece is one of three fire extinguisher candelabras which reference two dualities: 'collectivism vs authoritarianism' and 'acceleration of technology vs deceleration to nature' these forces push against one another and shape our world as they find balance

The bases reference feet quelling demons, a common theme in religious iconography globally, which typically represents our higher selves vanishing our ignorance, misdeeds, and inner demons. The small ornate plants were generated with software I wrote, 3D printed, and cast in gold

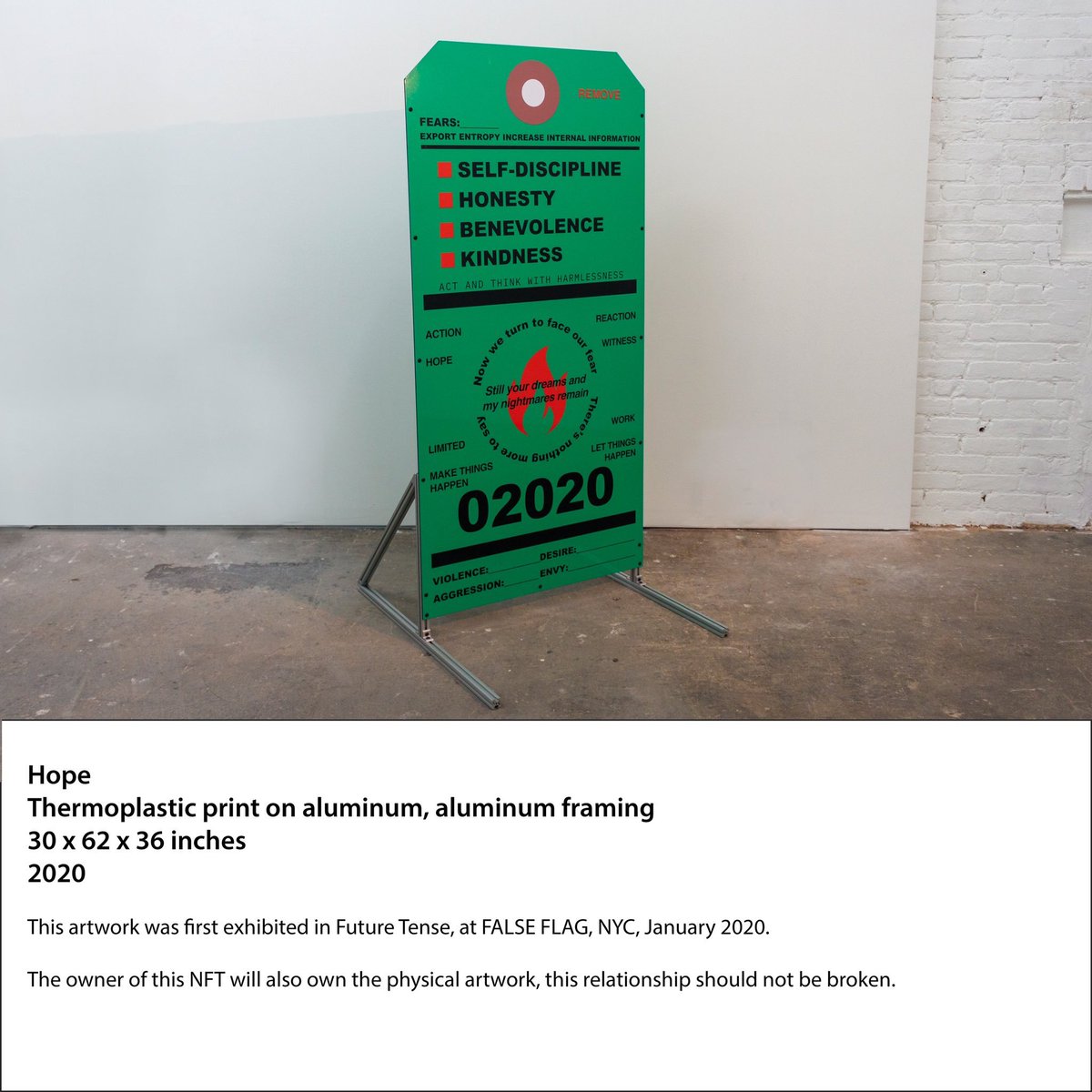

These sculptures are based on fire extinguisher safety inspection tags. At the time in late 2019 I wanted them to all reference 2020, but looking back their meaning has changed so much.

This imagery is from the infamous SoftBank vision deck, a fever dream of manic investment capital fantasies about extreme futures. Will we transmit our emotions to each other’s brains or get wiped out by a global virus or meteor?

• • •

Missing some Tweet in this thread? You can try to

force a refresh