Just saw #AvatarTheWayOfWater ! A visual spectacle and technical marvel

Through working on @unity's acquisition of @wetadigital, I got to see the tools in action responsible for bringing this story to life and meet the PhD's behind them

How Pandora made it to our screens👇

Through working on @unity's acquisition of @wetadigital, I got to see the tools in action responsible for bringing this story to life and meet the PhD's behind them

How Pandora made it to our screens👇

1/ People always ask why Avatar 2 took so long to produce: It took over a decade of R&D for the tech to be up to Cameron's standards to depict Way of Water

Weta Digital (Cameron's go-to VFX / innovation partner) has long been lauded for pushing the bleeding edge of 3D graphics

Weta Digital (Cameron's go-to VFX / innovation partner) has long been lauded for pushing the bleeding edge of 3D graphics

2/ LoTR ->Invented performance capture techniques for Gollum; crowd simulation for Battle of Helms Deep

KingKong ->Rendering fur (collision of 5M hair strands)

PlanetOfTheApes ->First CGI lead protagonist in a film; simulation of forestry evolution

The list goes on...

KingKong ->Rendering fur (collision of 5M hair strands)

PlanetOfTheApes ->First CGI lead protagonist in a film; simulation of forestry evolution

The list goes on...

3/ Avatar 1 ALSO took a decade: Cameron actually wrote the script for Avatar in '94 (pre-Titanic) but sat on it until the tech was then ready

The team evolved facial performance cap., intro'ng a helmet cam w/ a camera mounted inches from the actor's face

The team evolved facial performance cap., intro'ng a helmet cam w/ a camera mounted inches from the actor's face

https://twitter.com/PonniyinSelvanI/status/1603810967292256256

4/ Avatar 1 was one of the earliest films to utilize Virtual Production for pre-visualization. i.e. using a game engine/real-time rendering for Cameron to block out scenes and find the right camera angles using a "virtual camera," moving around low-poly assets in the viewport

5/ With Avatar 2, lots of Weta's existing tech needed to be overhauled to acheive the photorealism that Jim Cameron demanded for his vision

In the Way of Water, 2,225 out of 3,240 shots in the film involve... water

In the Way of Water, 2,225 out of 3,240 shots in the film involve... water

6/ Simulating fluid dynamics is one of the most challenging feats in computer graphics: modeling the laws of hydrodynamics and then rendering photo-realistically

7/ VFX Sup Eric Saindon stated in an interview: you need to simulate “the flow of waves on the ocean, interacting with characters, with environments, the thin film of water that runs down the skin, the way hair behaves when it’s wet, the index of refraction of light underwater"

8/ One of the patents Weta filed around this technological breakthrough:

“Methods of generating visual representations of a collision between an object and a fluid"

“Methods of generating visual representations of a collision between an object and a fluid"

https://twitter.com/SirWrender/status/1525922182408175616?s=20&t=njmkJAJZWbFzOOVZr9dfJg

9/ Weta's tool Loki (a plug-in to Houdini) does the hard work

It offers a highly-parallelized distributed simulation framework for physics-based animation of fx incl. water but also combustion, hair, cloth, muscles, and plants

It offers a highly-parallelized distributed simulation framework for physics-based animation of fx incl. water but also combustion, hair, cloth, muscles, and plants

https://twitter.com/beforesmag/status/1605661388361592833?s=20&t=lgXDS4ivDcDmjDViomrNEw

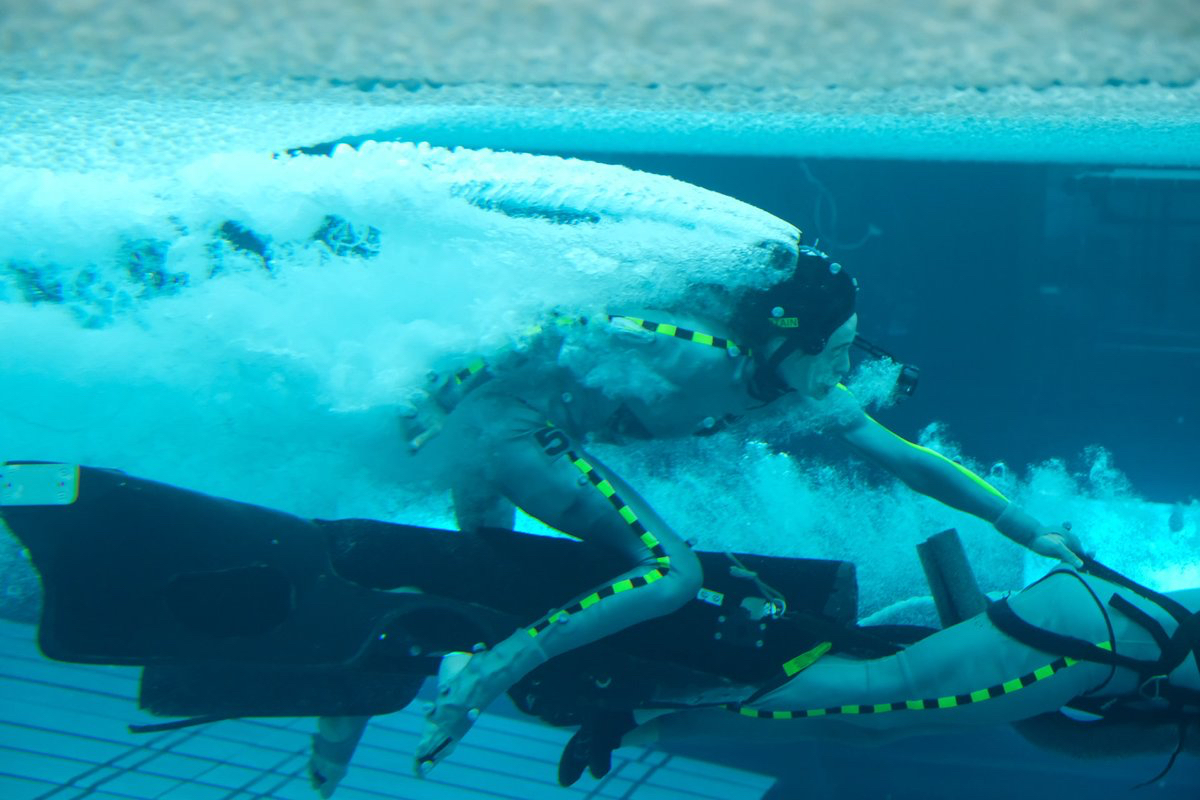

10/ Animating photorealistic digital characters is already really hard. Now try animating them while underwater!

The team needed to invent a new method of underwater performance capture to ensure realistic depiction

The team needed to invent a new method of underwater performance capture to ensure realistic depiction

11/ Light interacts with water in a way that messes with the MoCap markers. The reflections created false markers that disrupted the performance capture cameras

In order to solve this problem, the surface of the 900,000 gallon tank was covered in white balls

In order to solve this problem, the surface of the 900,000 gallon tank was covered in white balls

12/ w/ the facial capture camera 5'' in front of their face, the actors can’t use scuba gear...so they had to hold their breath...

The actors trained with champion free-drivers to extend their submergence time. Kate Winslet took the 👑 with 7 min+

The actors trained with champion free-drivers to extend their submergence time. Kate Winslet took the 👑 with 7 min+

https://twitter.com/avatar2news/status/1606620250833055744

13/ Most of the assets for the film had to be remodeled/textured from scratch for 4K (vs 2K for Avatar 1)

Total data stored for the film was 18.5 petabytes (!!) vs 1 p.b. for the OG

Hopefully in the future GenAI will enable upressing of legacy assets to make them evergreen

Total data stored for the film was 18.5 petabytes (!!) vs 1 p.b. for the OG

Hopefully in the future GenAI will enable upressing of legacy assets to make them evergreen

14/ Under the hood, new facial animation tech was developed after performance capture to transfer to the digital avatar

More precise muscle simulation and skin deformation results in more lifelike and emotionally resonant characters on screen

dgp.toronto.edu/projects/anima…

More precise muscle simulation and skin deformation results in more lifelike and emotionally resonant characters on screen

dgp.toronto.edu/projects/anima…

15/ It's actually a near miracle that Avatar was released in 2022!

Given Weta's tools and data are (for now) on-prem / landlocked to the studio in Wellington vs cloud, had it not been for New Zealand's low Covid case count, there might have been another 1-2 year delay

Given Weta's tools and data are (for now) on-prem / landlocked to the studio in Wellington vs cloud, had it not been for New Zealand's low Covid case count, there might have been another 1-2 year delay

16/ Computing complex 3D simulations and rendering out a single shot can take weeks and millions of processing hours. And there were ~3.5K+ shots that made it into the final film (each likely requiring multiple revisions)

Only 2 (!) of the shots don't contain visual effects

Only 2 (!) of the shots don't contain visual effects

17/ While most shows are rendered from Weta's local N.Z. datacenter, Avatar 2 required tapping 3 AWS datacenters in Australia. Shots were still being rendered into the fall

Apparently fiber line outages over the summer between N.Z & Australia nearly delayed the film release...

Apparently fiber line outages over the summer between N.Z & Australia nearly delayed the film release...

18/ Avatar 2 is also a reminder of how quality 3D native filmmaking (with special 3D cameras used in production and, in this case, usable underwater) displayed on massive IMAX screens creates an incredible level of immersion and depth

ymcinema.com/2022/10/24/the…

ymcinema.com/2022/10/24/the…

19/ The race after Avatar 1's success to retrofit movies to 3D in Post-Production in order to capture extra $ at the box office stained people's memory of how great a 3D movie can be when executed well from conception

It excites me for the future of linear storytelling in VR

It excites me for the future of linear storytelling in VR

Wrap/

Avatar 2 is an astonishingly beautiful technological breakthrough made possible by dozens of PhD's, 1,000+ dev-years of R&D and many thousands more artist-years to bring us back to Pandora, more vivid than any virtual world that has come before

Avatar 2 is an astonishingly beautiful technological breakthrough made possible by dozens of PhD's, 1,000+ dev-years of R&D and many thousands more artist-years to bring us back to Pandora, more vivid than any virtual world that has come before

https://twitter.com/officialavatar/status/1605978977327202305?s=20&t=DQUNXtOIfhpodQ62k7CWBA

Some sources and further reading pleasure here:

beforesandafters.com/2022/12/21/why…

studiobinder.com/blog/filming-a…

nytimes.com/2022/12/16/mov…

wellfixitinpost.com/avatar-2-the-w…

beforesandafters.com/2022/12/21/why…

studiobinder.com/blog/filming-a…

nytimes.com/2022/12/16/mov…

wellfixitinpost.com/avatar-2-the-w…

And here's a video outlining more of Weta's tools:

And here:

• • •

Missing some Tweet in this thread? You can try to

force a refresh