The AI explosion is warping our sense of time. Can you believe Stable Diffusion is only 4 months old, and ChatGPT <4 weeks old 🤯? If you blink, you miss a whole new industry. Here are my TOP 10 AI spotlights, from a breathtaking 2022 in rewind ⏮: a long thread 🧵

2022’s AI landscape is dominated by a surge in huge generative models, which are rapidly making their way out of research labs and into real-world applications. 2 other emerging areas driven by LLM technology are decision-making agents (games, robotics, …) and AI4Science.

0/

0/

I am very fortunate to work on these AI research frontiers with my wonderful colleagues @NVIDIAAI. In 2023, I’ll continue to do more exciting works myself and share hot ideas too - welcome to follow me @DrJimFan! 🙌

For each of the 10 spotlights, there may be multiple works:

0/

For each of the 10 spotlights, there may be multiple works:

0/

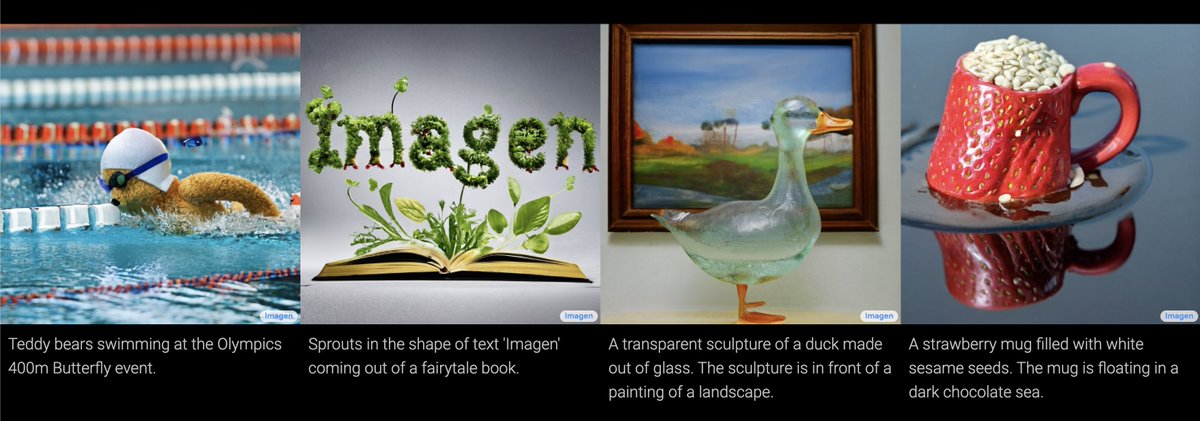

🎉No. 1: Text -> Image

DALLE-2 was the first large-scale diffusion model that can generate realistic, high-res images from an arbitrary caption. It kickstarted the AI4art revolution that spawned many new applications, startups, and ways of thinking.

openai.com/dall-e-2/

1.1/

DALLE-2 was the first large-scale diffusion model that can generate realistic, high-res images from an arbitrary caption. It kickstarted the AI4art revolution that spawned many new applications, startups, and ways of thinking.

openai.com/dall-e-2/

1.1/

But DALLE-2 is behind OpenAI’s walled garden. @StabilityAI, LMU, and @runwayml took the heroic step to train their own internet-scale text2image model, based on the “latent diffusion” algorithm. They called the model “Stable Diffusion”, and open-sourced the code & weights.

1.2/

1.2/

The open access to Stable Diffusion has proven to be a huge game changer. Numerous startups and research labs build upon SD to create novel apps, and SD itself gets improved continuously by the open-source community. SD has recently hit v2.1 and runs on a single GPU now!

1.3/

1.3/

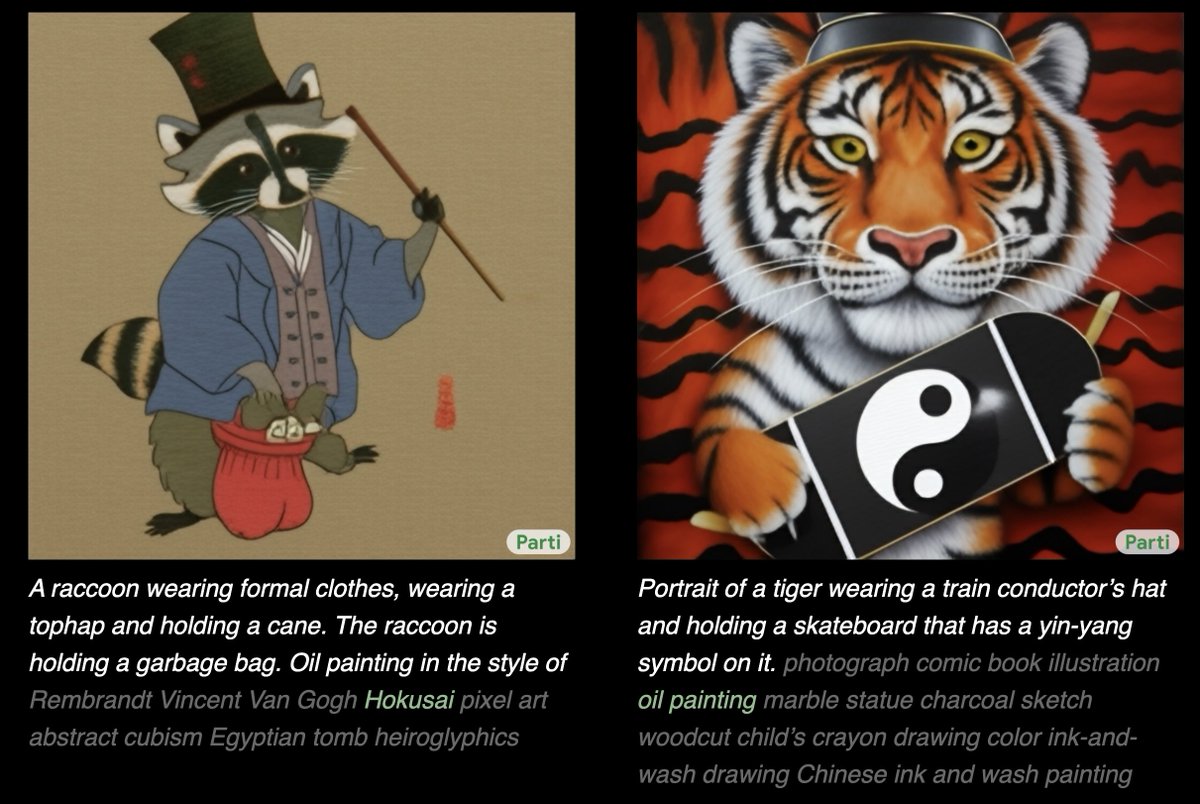

There were 2 other image2text models from @GoogleAI this year. Neither released the model or an API to play with, but the papers still had interesting insights.

1. Imagen: imagen.research.google

2. Parti: parti.research.google. A transformer model without diffusion!

1.4/

1. Imagen: imagen.research.google

2. Parti: parti.research.google. A transformer model without diffusion!

1.4/

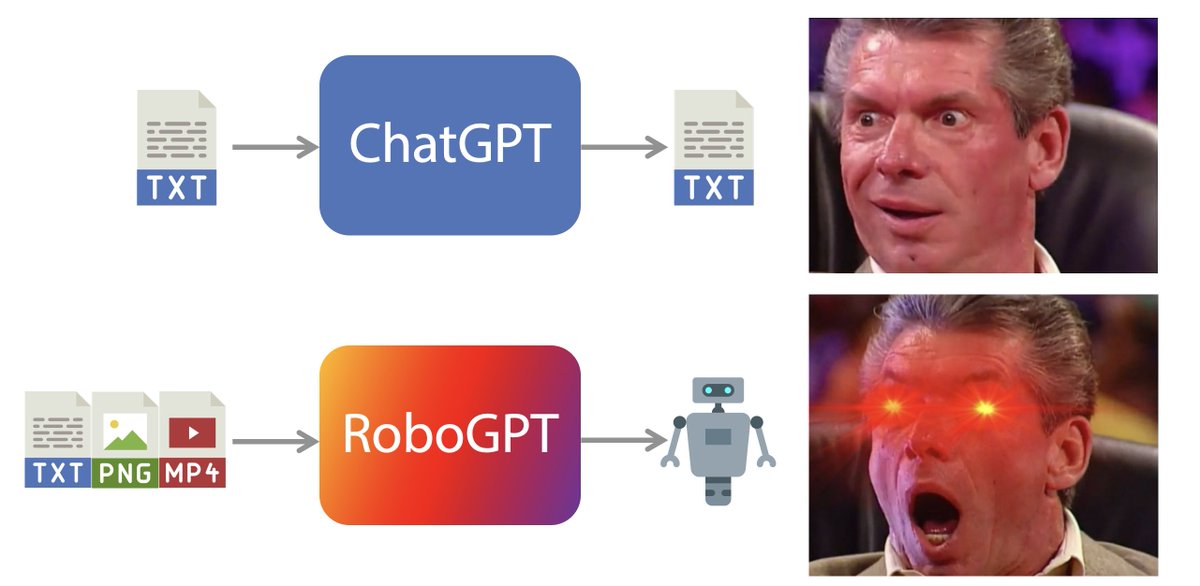

🎉No. 2: Text -> Text

Well, that’s an easy guess - ChatGPT! The only app in history that gained 1 million users in 5 days.

ChatGPT prompted out our human creativity as well. I refer you to this list for all the useful & imaginative ChatGPT ideas: github.com/f/awesome-chat…

2.1/

Well, that’s an easy guess - ChatGPT! The only app in history that gained 1 million users in 5 days.

ChatGPT prompted out our human creativity as well. I refer you to this list for all the useful & imaginative ChatGPT ideas: github.com/f/awesome-chat…

2.1/

ChatGPT & GPT-3.5 both use a new technology called RLHF (“Reinforcement Learning from Human Feedback”). Its profound implication is that prompt engineering will disappear very soon. See my deep dive 🧵 on this topic:

2.2/

2.2/

https://twitter.com/drjimfan/status/1600884299435167745

ChatGPT’s popularity spawned a wave of new startups and competitors, notably Jasper Chat, YouChat, @Replit Ghostwriter chat, and @perplexity_ai. Some of them offer much more intuitive ways to do search, so Google execs are getting sweaty! @goodside has a nice thread:

2.3/

2.3/

https://twitter.com/goodside/status/1606611869661384706

🎉No. 3: Text -> Robot🤖

How to give GPT arms and legs, so they can clean up your messy kitchen? Unlike NLP, robot models need to interact with a physical world. Big pre-trained transformers are finally starting to address the hardest problems in robotics this year!

3.1/

How to give GPT arms and legs, so they can clean up your messy kitchen? Unlike NLP, robot models need to interact with a physical world. Big pre-trained transformers are finally starting to address the hardest problems in robotics this year!

3.1/

In October, my coauthors and I took a step towards building a “Robot GPT” that takes in any *mixture* of text, images, and videos as prompt, and outputs robot motor control! Our model is called VIMA (“VisuoMotor Attention”) and has been fully open-sourced 🧵:

3.2/

3.2/

https://twitter.com/drjimfan/status/1578433493561769984

Along a similar path as VIMA, researchers from @GoogleAI announced RT-1, a robot transformer trained on 700 tasks and 130K human demonstrations. These data were collected by a literal *Iron Fleet* - 13 robots over 17 months!

I also wrote a deep dive 🧵 for RT-1:

3.3/

I also wrote a deep dive 🧵 for RT-1:

3.3/

https://twitter.com/DrJimFan/status/1602776866380578816

🎉No. 4: Text -> Video

Video is just an array of images bundled together over time, creating the illusion of motion. If we can do text2image, then why not throw in the time axis for some extra fun?

There are 3 (!!) big works in this area, but none of them open-source 😢

4.1/

Video is just an array of images bundled together over time, creating the illusion of motion. If we can do text2image, then why not throw in the time axis for some extra fun?

There are 3 (!!) big works in this area, but none of them open-source 😢

4.1/

Make-A-Video: Text-to-Video Generation without Text-Video Data. From @MetaAI.

You can sign up for trial access here: makeavideo.studio

Paper: arxiv.org/abs/2209.14792

4.2/

You can sign up for trial access here: makeavideo.studio

Paper: arxiv.org/abs/2209.14792

4.2/

https://twitter.com/deviparikh/status/1575506287009226752

Imagen Video: High Definition Video Generation with Diffusion Models. From @GoogleAI - a natural follow-up on the Imagen static image generator.

Demos: imagen.research.google/video/

Paper: arxiv.org/abs/2210.02303

4.3/

Demos: imagen.research.google/video/

Paper: arxiv.org/abs/2210.02303

4.3/

Phenaki: Variable Length Video Generation From Open Domain Textual Description. Also from @GoogleAI

Demos: phenaki.video

Paper: arxiv.org/abs/2210.02399

4.4/

Demos: phenaki.video

Paper: arxiv.org/abs/2210.02399

4.4/

🎉No. 5: Text -> 3D

From designing innovative products to creating stunning visual effects in movies & games, 3D modeling will be the next step in the creative AI field to materialize ideas from text. 2022 has seen a few primitive but promising 3D generative models!

5.1/

From designing innovative products to creating stunning visual effects in movies & games, 3D modeling will be the next step in the creative AI field to materialize ideas from text. 2022 has seen a few primitive but promising 3D generative models!

5.1/

DreamFusion: Text-to-3D using 2D Diffusion. From @GoogleAI. Based on the NeRF algorithm, the 3D model generated from a given text can be viewed from any angle, relit by arbitrary illumination, or composited into any 3D environment.

Project site: dreamfusion3d.github.io

5.2/

Project site: dreamfusion3d.github.io

5.2/

2 works from my colleagues @NVIDIAAI:

👉 GET3D: A Generative Model of High Quality 3D Textured Shapes Learned from Images. nv-tlabs.github.io/GET3D/

👉 Magic3D: High-Resolution Text-to-3D Content Creation. deepimagination.cc/Magic3D/

5.3/ twitter.com/i/web/status/1…

👉 GET3D: A Generative Model of High Quality 3D Textured Shapes Learned from Images. nv-tlabs.github.io/GET3D/

👉 Magic3D: High-Resolution Text-to-3D Content Creation. deepimagination.cc/Magic3D/

5.3/ twitter.com/i/web/status/1…

Point-E: A System for Generating 3D Point Clouds from Complex Prompts. By @OpenAI, a preliminary version of 3D DALLE!

Paper: arxiv.org/abs/2212.08751

5.4/

Paper: arxiv.org/abs/2212.08751

5.4/

https://twitter.com/drjimfan/status/1605175485897625602

🎉No. 6: AI can now play Minecraft!

The game is a perfect testbed for general intelligence because (1) it is infinitely open-ended & creative; (2) it’s played by 140M people - twice UK’s population, and a treasure trove of human data!

Can AI be as imagnative as we are?

6.1/

The game is a perfect testbed for general intelligence because (1) it is infinitely open-ended & creative; (2) it’s played by 140M people - twice UK’s population, and a treasure trove of human data!

Can AI be as imagnative as we are?

6.1/

My coauthors and I developed the first Minecraft AI that can solve many tasks given a *natural language prompt*. Our ultimate goal is to build an “Embodied ChatGPT”. We’ve fully open-sourced our development platform “MineDojo”. Deep dive 🧵 on our NeurIPS Outstanding Paper:

6.2/

6.2/

https://twitter.com/DrJimFan/status/1595459499732926464

Concurrently, @jeffclune’s team also announced a model called VPT (“Video Pre-Training”) that directly outputs keyboard and mouse actions. It is able to solve longer horizons, but not language conditioned. MineDojo & VPT complement each other!

openai.com/blog/vpt/

6.3/

openai.com/blog/vpt/

6.3/

🎉No. 7: AI learns to negotiate!

CICERO @MetaAI is the first AI agent to achieve human-level performance in Diplomacy, a strategy game that requires extensive natural language negotiation to cooperate & compete with humans. AI can now persuade and bluff effectively 😮!

7.1/

CICERO @MetaAI is the first AI agent to achieve human-level performance in Diplomacy, a strategy game that requires extensive natural language negotiation to cooperate & compete with humans. AI can now persuade and bluff effectively 😮!

7.1/

https://twitter.com/polynoamial/status/1595076658805248000

Concurrently, @DeepMind also announced their Diplomacy-playing agent. What would happen if CICERO plays DeepMind’s AI? 🤔

dpmd.ai/diplomacy-natu…

7.2/

dpmd.ai/diplomacy-natu…

7.2/

🎉No. 8: Audio -> Text

OpenAI Whisper is a large Transformer that approaches human-level robustness & accuracy on English speech recognition. It’s trained on 680,000 hours of audio data from the web! Will Whisper unlock more text tokens to feed GPT-4? 8/

openai.com/blog/whisper/

OpenAI Whisper is a large Transformer that approaches human-level robustness & accuracy on English speech recognition. It’s trained on 680,000 hours of audio data from the web! Will Whisper unlock more text tokens to feed GPT-4? 8/

openai.com/blog/whisper/

🎉No. 9: Nuclear Fusion control☢️

@DeepMind & @EPFL developed the first deep reinforcement learning system that can keep nuclear fusion plasma stable inside its tokamaks (a device that uses a powerful magnetic field to confine plasma in a torus).

nature.com/articles/s4158…

9.1/

@DeepMind & @EPFL developed the first deep reinforcement learning system that can keep nuclear fusion plasma stable inside its tokamaks (a device that uses a powerful magnetic field to confine plasma in a torus).

nature.com/articles/s4158…

9.1/

Also this month, Department of Energy announced a huge breakthrough: nuclear fusion now generates more energy than it consumes to initiate the reaction! It is the first time humankind has achieved this landmark. We may become a fusion-powered civilization in this lifetime!

9.2/

9.2/

🎉No. 10: Biology Transformers🧬

AlphaFold (2021) was the first model to predict the 3D structure of a protein accurately. In July, DeepMind announced the “protein universe” - expanding AlphaFold’s protein database to 200M structures! What a treasure chest for science!

10.1/

AlphaFold (2021) was the first model to predict the 3D structure of a protein accurately. In July, DeepMind announced the “protein universe” - expanding AlphaFold’s protein database to 200M structures! What a treasure chest for science!

10.1/

@NVIDIAAI also expands the BioNeMo LLM framework to help biotech companies and researchers to generate, predict and understand biomolecular data.

blogs.nvidia.com/blog/2022/09/2…

10.2/

blogs.nvidia.com/blog/2022/09/2…

10.2/

This concludes our whirlwind tour of the top 10 AI highlights of 2022! There‘re countless other exciting works that contribute to these advancements, too many to fit in a 🧵. I believe every paper is a brick in the cathedral of AI, and all the efforts should be celebrated🥳. 11/

Parting thought: as AI systems are growing ever more powerful, it is crucial that we remain aware of the potential dangers & risks and take steps to mitigate them, whether through careful training design, proper deployment oversight, or novel safeguard approaches. 12/

Here's to a year filled with so many moments that took our breath away! 🥂🍻🍾

Happy holidays everyone. Follow me for more deep dives in 2023 🙌

END/🧵

Happy holidays everyone. Follow me for more deep dives in 2023 🙌

END/🧵

• • •

Missing some Tweet in this thread? You can try to

force a refresh