How to get URL link on X (Twitter) App

GR00T N1 kickstarted our open-source initiative to provide a frontier foundation for the entire robotics ecosystem to build on:

GR00T N1 kickstarted our open-source initiative to provide a frontier foundation for the entire robotics ecosystem to build on:https://x.com/DrJimFan/status/1902117478496616642?s=20

https://x.com/yukez/status/1795477675739357679

Here's a highlight thread on the exciting research that we spearheaded!

Here's a highlight thread on the exciting research that we spearheaded! https://x.com/DrJimFan/status/1715397393842401440?s=20

https://x.com/zipengfu/status/1742602881390477771?s=20

https://x.com/DrJimFan/status/1715397393842401440?s=20

Sharing appetizers with my distinguished guests: here are my team's research highlights!

Sharing appetizers with my distinguished guests: here are my team's research highlights!https://x.com/DrJimFan/status/1662115266933972993?s=20

"An adorable minion holding a sign that says "It's over, MidJourney", spelled exactly, 3d render, typography"

"An adorable minion holding a sign that says "It's over, MidJourney", spelled exactly, 3d render, typography"

Noam Brown's announcement @polynoamial:

Noam Brown's announcement @polynoamial: https://twitter.com/polynoamial/status/1676971503261454340?s=20

No-gradient architecture is the future for decision-making agents. LLM acts as a "prefrontal cortex" that orchestrates lower-level control APIs via code generation. Voyager takes the first step in Minecraft. @karpathy says it best, as always:

No-gradient architecture is the future for decision-making agents. LLM acts as a "prefrontal cortex" that orchestrates lower-level control APIs via code generation. Voyager takes the first step in Minecraft. @karpathy says it best, as always:https://twitter.com/karpathy/status/1662160997451431936?s=20

https://twitter.com/DrJimFan/status/1595459499732926464?s=20

NVIDIA AI Foundation, a new initiative that Jensen announced in March:

NVIDIA AI Foundation, a new initiative that Jensen announced in March:https://twitter.com/DrJimFan/status/1638211601944948736?s=20

MEGABYTE from Meta AI, a multi-resolution Transformer that operates directly on raw bytes. This signals the beginning of the end of tokenization.

MEGABYTE from Meta AI, a multi-resolution Transformer that operates directly on raw bytes. This signals the beginning of the end of tokenization.https://twitter.com/karpathy/status/1657949234535211009?s=20

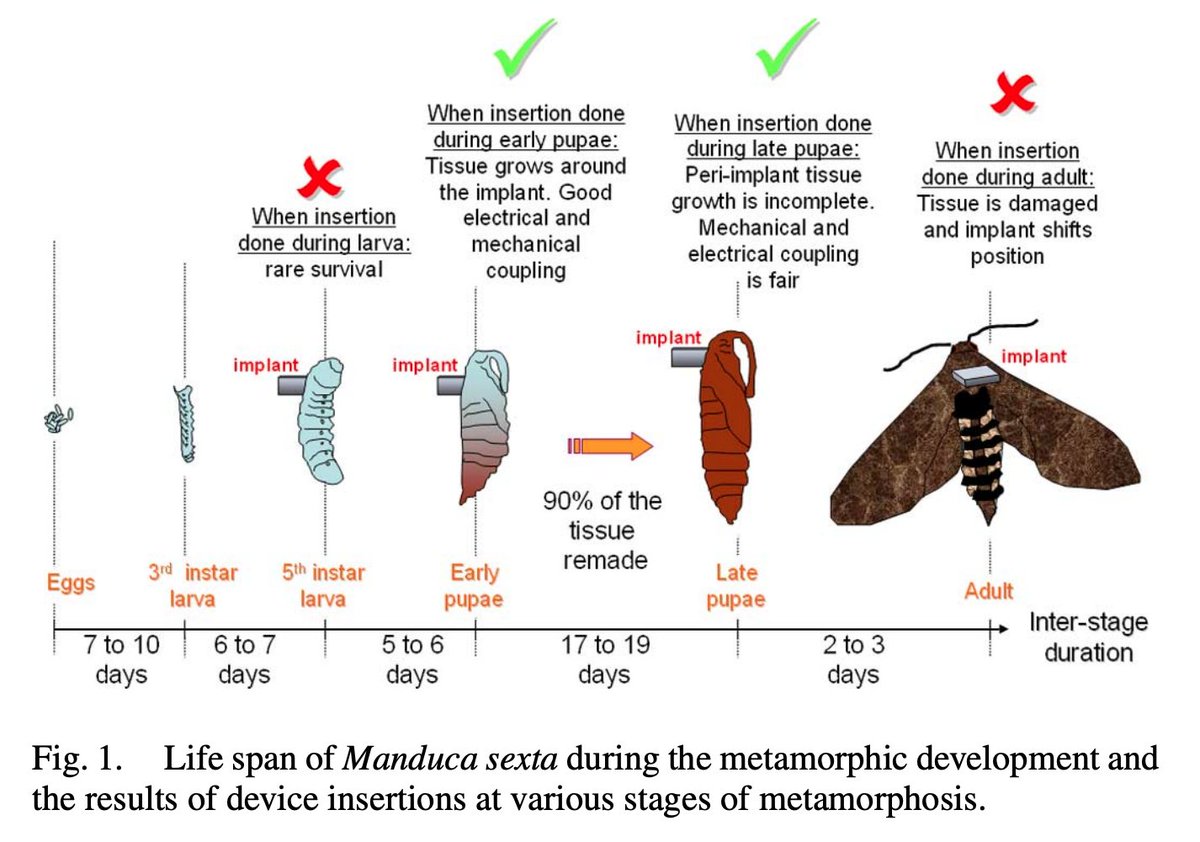

Paper: Insect–Machine Interface Based Neurocybernetics.

Paper: Insect–Machine Interface Based Neurocybernetics.

If you only have 1 seat to follow in AI Twitter, don't give that seat to me. Give it to @karpathy.

If you only have 1 seat to follow in AI Twitter, don't give that seat to me. Give it to @karpathy. https://twitter.com/karpathy/status/1654892810590650376?s=20