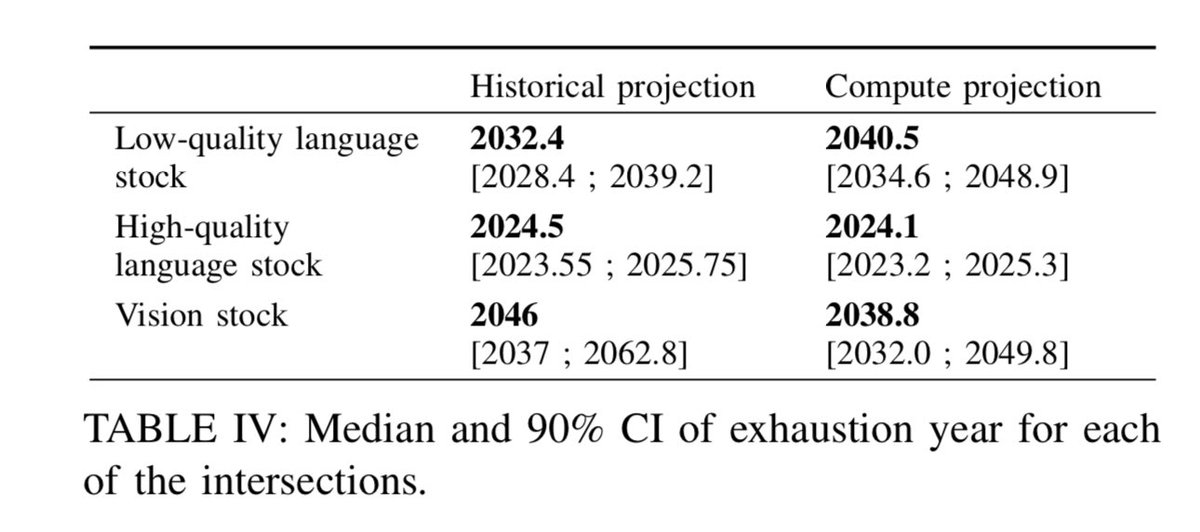

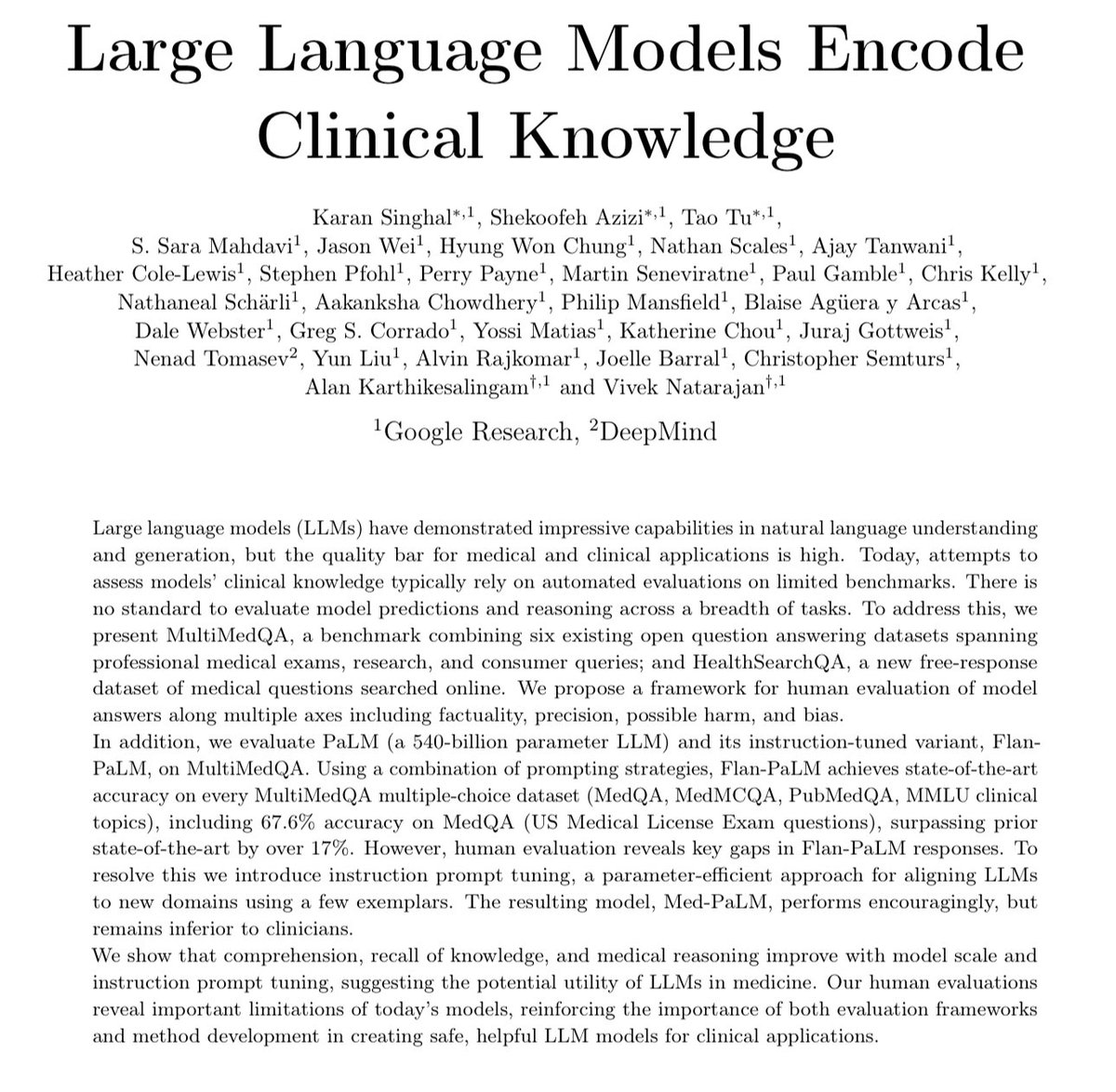

Extraordinary new paper from Google on medicine & AI: When Google tuned a AI chatbot to answer common medical questions, doctors judged 92.6% of its answers right … compared to 92.9% of answers given by other doctors.

And look at the pace of improvement! arxiv.org/pdf/2212.13138…

And look at the pace of improvement! arxiv.org/pdf/2212.13138…

Doctors also rated the likelihood and extent of the harm that came from giving the wrong answers.

The percentage of harmful advice from the trained chatbot (Med-PaLM) was essentially rated the same as the percentage of potentially harmful advice provided by other real doctors!

The percentage of harmful advice from the trained chatbot (Med-PaLM) was essentially rated the same as the percentage of potentially harmful advice provided by other real doctors!

Also, to be clear, there are lots of caveats in the paper, and the system is nowhere close to replacing doctors.

But the rate of improvement is fast, and there is a lot of potential for AI to work with doctors to improve their own diagnoses.

But the rate of improvement is fast, and there is a lot of potential for AI to work with doctors to improve their own diagnoses.

https://twitter.com/emollick/status/1499972354696486919

• • •

Missing some Tweet in this thread? You can try to

force a refresh