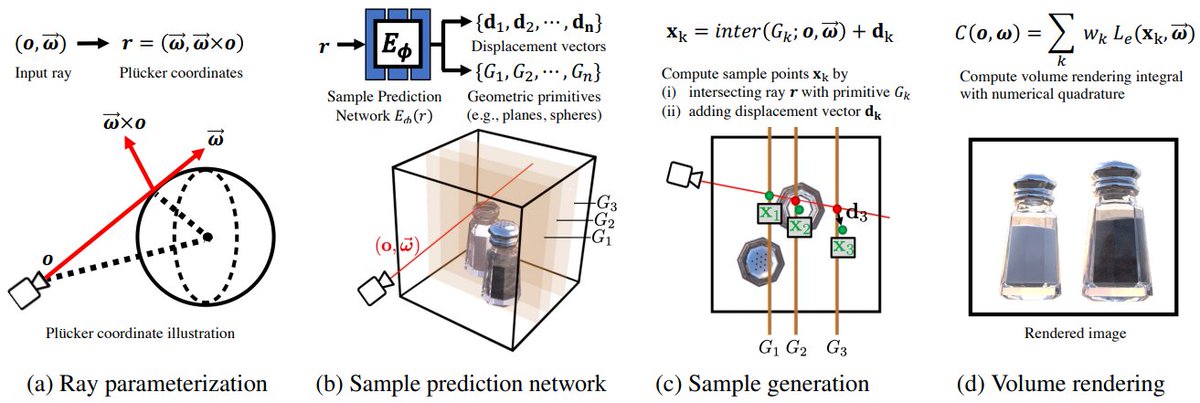

How to make ChatGPT 100x better at solving math, science, and engineering problems for real?

Teach it to use the Wolfram language.

ChatGPT: the best neural reasoning engine.

Mathematica: the best symbolic reasoning engine.

I can’t think of a happier marriage. 🧵 with example:

Teach it to use the Wolfram language.

ChatGPT: the best neural reasoning engine.

Mathematica: the best symbolic reasoning engine.

I can’t think of a happier marriage. 🧵 with example:

Example question: what is the determinant of a 5 by 5 matrix with "a" on the diagonal and "b" everywhere else? Not a difficult one for any undergrad student. ChatGPT is very confidently *wrong* here, generating BS reasoning:

1/

1/

Let’s give the exact same question to Wolfram Alpha, an online natural language interface to scientific computing. It completely fails to understand the question and answers “-12” 🤣. Even more hilarious than ChatGPT.

2/

2/

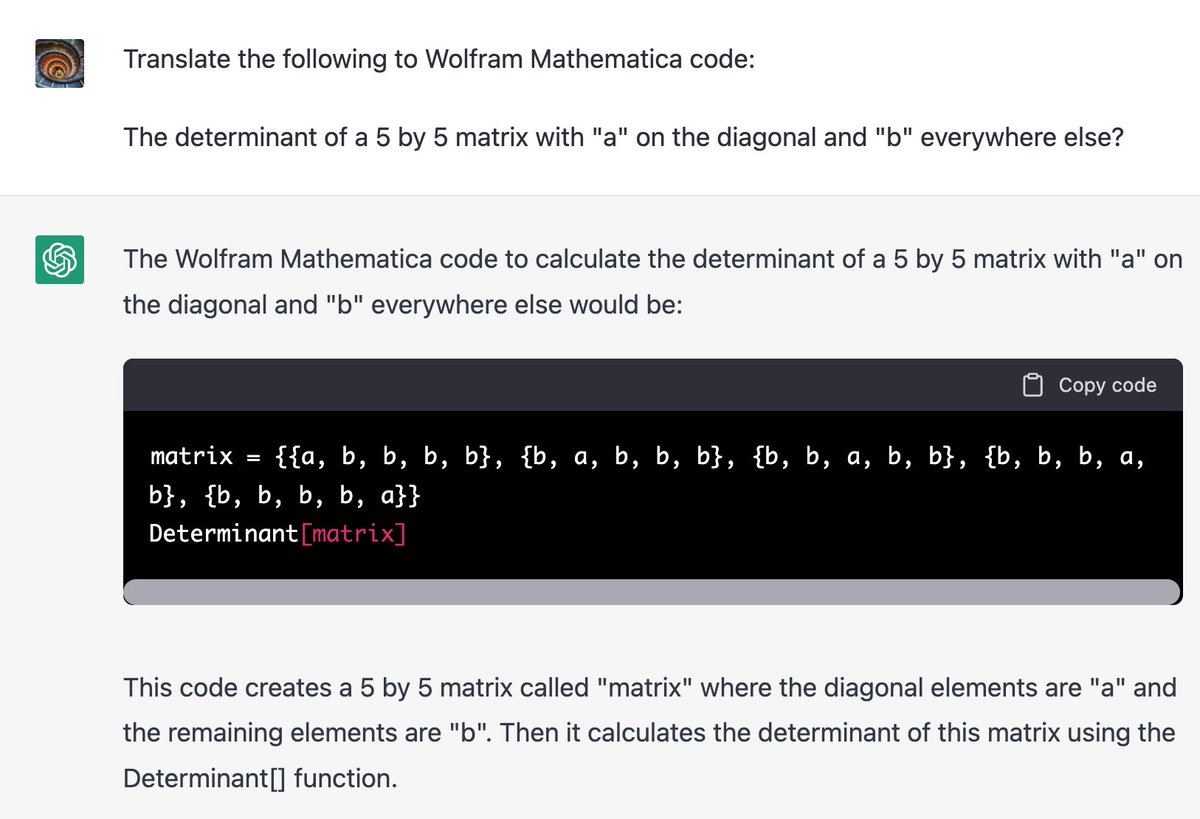

Now add a magic incantation “Translate the following to Wolfram Mathematica code:” to ChatGPT’s prompt. Instead of trying to solve the problem through neural mental math (synonym as “disaster”), it now outputs the correct code and reasons through it. Pretty impressive:

3/

3/

Transfer the code to a Mathematica cloud notebook. The code runs smoothly and gives you the precise & correct symbolic calculation result. It even draws a pretty 3D figure as a bonus!

4/

4/

This example is cherry-picked 🍒. ChatGPT doesn’t know the Mathematica language very well, so the generated code is not reliable. If we have access to the model, we can finetune it to be a lot better at using the symbolic engine.

5/

5/

I believe this is one of the most promising paths forward for neurosymbolic systems. We shouldn’t reinvent the wheels of decades of work that goes into very effective solvers like Mathematica. It’s such a low-hanging fruit. @GaryMarcus

6/

6/

@GaryMarcus I came up with this idea independently, then I did a Google search and - well, Stephen Wolfram himself beat me to it 🤔. So here you go, his blog post on combining ChatGPT & Mathematica @stephen_wolfram:

writings.stephenwolfram.com/2023/01/wolfra…

I’ll share more ideas in the future 🙌

END/🧵

writings.stephenwolfram.com/2023/01/wolfra…

I’ll share more ideas in the future 🙌

END/🧵

• • •

Missing some Tweet in this thread? You can try to

force a refresh