I have successfully compiled and run GLM-130b on a local machine! It's now running in `int4` quantization mode and answering my queries.

I'll explain the installation below; if you have any questions, feel free to ask!

github.com/THUDM/GLM-130B

I'll explain the installation below; if you have any questions, feel free to ask!

github.com/THUDM/GLM-130B

130B parameters on 4x 3090s is impressive. GPT-3 for reference is 175B parameters, but it's possible that it's over capacity for the data & compute it was trained on...

I feel like a #mlops hacker having got this to work! (Though it should be much easier than it was.)

I feel like a #mlops hacker having got this to work! (Though it should be much easier than it was.)

To get GLM to work, the hardest part was CMake from the FasterTransformer fork. I'm not a fan of CMake, I don't think anyone is.

I had to install cudnn libraries manually into my conda environment, then hack CMakeCache.txt to point to those...

I had to install cudnn libraries manually into my conda environment, then hack CMakeCache.txt to point to those...

Even then the command-line to compile didn't work, so I edited it manually to actually compile. Haven't really used C++ seriously since I left #gamedev, but muscle memory is there...

github.com/thudm/fastertr…

github.com/thudm/fastertr…

The code from the repository doesn't compile:

- There are includes to "stdio.h" missing in many places; likely I have a different linux setup.

- One : namespace character is missing in a std:vector def.

- There are includes to "stdio.h" missing in many places; likely I have a different linux setup.

- One : namespace character is missing in a std:vector def.

You then have to do a couple things:

- Sign-up to get the full GLM weights, 260Gb downloads quite fast.

- Convert the model to int4 with convert(.)py, check it's actually 4x smaller.

- Edit config/model_glm_130b_int4.sh to change path & add --from-quantized-checkpoint.

- Sign-up to get the full GLM weights, 260Gb downloads quite fast.

- Convert the model to int4 with convert(.)py, check it's actually 4x smaller.

- Edit config/model_glm_130b_int4.sh to change path & add --from-quantized-checkpoint.

Then to do inference:

- Call `bash scripts/generate.sh` to get it working.

- If any dependency went wrong, you'll find out here.

- It installed the wrong `apex` by default, I had to do it manually.

- Install that setup(.)py install --cuda_ext and --cpp_ext

github.com/NVIDIA/apex

- Call `bash scripts/generate.sh` to get it working.

- If any dependency went wrong, you'll find out here.

- It installed the wrong `apex` by default, I had to do it manually.

- Install that setup(.)py install --cuda_ext and --cpp_ext

github.com/NVIDIA/apex

From there, it takes 472.4s to load the model, but it's not on a SSD yet. (I downloaded the files to a TB archive disk, and should move the quantized model now.)

Then you can type queries...

Then you can type queries...

Quantization to int4 does hurt performance, but less than I would have thought. I think only the weights are quantized and the inference is float16... need to check the paper again.

https://twitter.com/francoisfleuret/status/1617157480802582530

Inference takes about 65s to 70s for a single query. Results seem fine in general chat/trivia, but honestly I'm more interested in some of the specialized abilities... need to figure out prompts for those!

When I say "seems fine" it basically hallucinates like all other models... so useless in production.

(e.g. OpenAI Five was not open-source, climate change and poverty not a focus for OpenAI, etc.)

(e.g. OpenAI Five was not open-source, climate change and poverty not a focus for OpenAI, etc.)

If you'd like me to try a prompt, let me know!

(Disclaimer: It doesn't seem to be on the same level as GPT-3, but it's an important step for less centralized LLMs.)

(Disclaimer: It doesn't seem to be on the same level as GPT-3, but it's an important step for less centralized LLMs.)

To reduce latency, I think more GPUs definitely help if that's an option.

From what I've seen & read, there appears to be model parallelism as GPU utilization is pretty good — not perfect but consistent 91%.

From what I've seen & read, there appears to be model parallelism as GPU utilization is pretty good — not perfect but consistent 91%.

https://twitter.com/IntuitMachine/status/1617166555166507013

The rig I'm using is a 4-slot setup with 3090s. Originally had some trouble powering it because they sold me a 1000W power unit and it needed to be 2000W to be comfortable with full utilization and other power spikes.

timdettmers.com/2023/01/16/whi…

timdettmers.com/2023/01/16/whi…

Figuring out the power usage and cost, seems to be around 1 cent of EUR per query, assuming 1 minute per query at near full utilization and current electricity prices. (Probably over estimate, but not including any hardware costs.)

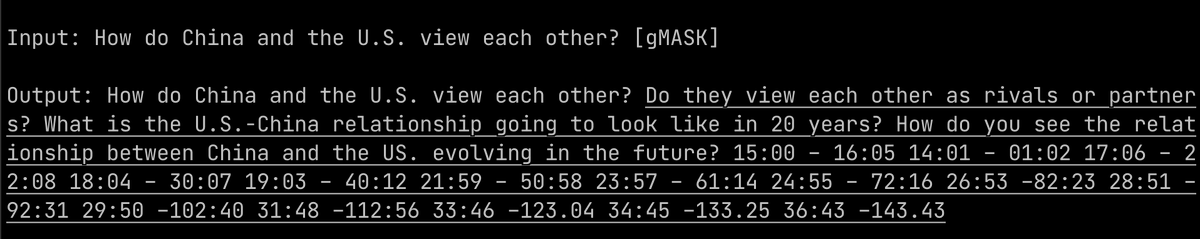

Input by @leonard_ritter from Mastodon:

> transform the lyrics of "ice ice baby" by vanilla ice to a poem written in the renaissance period

Outputs exact same input again. I'm not sure how to prompt it, and what tasks it knows. Prompting is a dark art!

> transform the lyrics of "ice ice baby" by vanilla ice to a poem written in the renaissance period

Outputs exact same input again. I'm not sure how to prompt it, and what tasks it knows. Prompting is a dark art!

https://twitter.com/alexjc/status/1616798549735260162

If you're on a continent where electricity prices have not been impacted by sanctions, it'd be much cheaper than 1 cent per query. Thanks for the calculations, @lovetheusers!

https://twitter.com/lovetheusers/status/1617226680828846082

Struggling to find use cases for this except the int4 quantization technology demo aspect. If you have any ideas for what GLM could be useful for, let me know...

(Willing to do a few more queries to find out before giving up.)

(Willing to do a few more queries to find out before giving up.)

I've tried about 10 different prompts and most are nonsense. Lots of repetition, I think contrastive search would help a lot in the generation... (Not worth pursuing otherwise.)

It likes to repeat questions, and then degenerates.

It likes to repeat questions, and then degenerates.

I can't type in line breaks with their default console, but here's the query and response: (got it wrong)

> Mountain A is 5 feet, Mountain B is 20 inches, Mountain C is 1 feet tall. Question: Which is the tallest? Answer:

> Mountain A is 5 feet, Mountain B is 20 inches, Mountain C is 1 feet tall. Question: Which is the tallest? Answer:

https://twitter.com/sir_deenicus/status/1617242689497452546

Why are models trained like FlanT5 (on many different prompts) useful as bots, whereas larger models like GLM seem to be only statistical models of language?

Maybe all models need to be trained on a variety of specific tasks to understand what's expected.

Maybe all models need to be trained on a variety of specific tasks to understand what's expected.

https://twitter.com/sir_deenicus/status/1617244133688762368

Maybe the pretraining dataset that Google uses (C4), even ignoring the specialized tasks used by Flan, is better than the one used to train GLM?

Even as a statistical model of language it doesn't seem very good and quickly degenerates. I wonder if that's because of int4?

Even as a statistical model of language it doesn't seem very good and quickly degenerates. I wonder if that's because of int4?

Assuming int4 is only slightly worse than int8 or float16, my conclusion is that how you train your model is so much more important than its size...

But since code hogs 3/4 of the GPUs at 100% when not running queries, I'm turning GLM off for tonight.

Send queries for tomorrow!

But since code hogs 3/4 of the GPUs at 100% when not running queries, I'm turning GLM off for tonight.

Send queries for tomorrow!

Since it's a dual language Chinese / English model, if you have any Chinese language questions, I can test those too...

@Ted_Underwood on Mastodon: "I’m just glad to know 130B is doable; that’s the main thing."

Yes! A clear goal for anyone building local privacy-centric LLMs could be to train a FlanT5 style model that can run quantized at int4.

You could fit 30B parameters per 24Gb GPU.

Yes! A clear goal for anyone building local privacy-centric LLMs could be to train a FlanT5 style model that can run quantized at int4.

You could fit 30B parameters per 24Gb GPU.

By default I just found it's got NUM_BEAMS=4, which explains why the execution time didn't seem to be proportional to the number of tokens in the output.

I'll try TOP_P and TOP_K tomorrow, those are likely going to be faster — let's see about the quality.

I'll try TOP_P and TOP_K tomorrow, those are likely going to be faster — let's see about the quality.

https://twitter.com/yar_vol/status/1617187144728023042

Success reducing latency and cost of #GLM130B by just RTFM! I set the number of beams in search to 1 and instead used top_k=8.

It now replies with short answers in ~15s. Long answers still same >60s.

That's about 13,9 tokens per second, and quarter of a cent per short query.

It now replies with short answers in ~15s. Long answers still same >60s.

That's about 13,9 tokens per second, and quarter of a cent per short query.

I can't tell how much quality has dropped with 1 beam, it still gets the advanced logic questions wrong.

However, it did manage to side step my trick question about the president of the U.K. First two sentences are the best so far, however... [1/2]

However, it did manage to side step my trick question about the president of the U.K. First two sentences are the best so far, however... [1/2]

After 400 characters it loses the train of thought. It asks random related questions and then starts to hallucinate facts.

Is the context too small? Has anyone studied the phenomenon of hallucinations paired with character count and context size? [2/2]

Is the context too small? Has anyone studied the phenomenon of hallucinations paired with character count and context size? [2/2]

It knows minimal Python code but I need to see if I can setup a Web UI, the console version removes line-breaks.

I believe GPT-3.5 and ChatGPT have a context of 4096, which would explain how it's so coherent in comparison...

I think I could theoretically fit a bigger context in the remaining memory on a local rig like this one.

I think I could theoretically fit a bigger context in the remaining memory on a local rig like this one.

https://twitter.com/3DTOPO/status/1617452587183374337

My take-away from this exercise:

GLM130b knows a lot as a text token model, but much of is knowledge seems hard to access. Significant work is needed to get it to perform as a chatbot, problem solver, etc.

Could be via clever prompting & search heuristics, or better training?

GLM130b knows a lot as a text token model, but much of is knowledge seems hard to access. Significant work is needed to get it to perform as a chatbot, problem solver, etc.

Could be via clever prompting & search heuristics, or better training?

• • •

Missing some Tweet in this thread? You can try to

force a refresh