For code language models, every token is a new chance to break a program. What if LLMs wrote code like people, decomposing programs into solvable parts? They can solve competition-level coding problems by writing natural language programs in Parsel🐍, beating prior SoTA by >75%!

Parsel 🐍: A Unified Natural Language Framework for Algorithmic Reasoning

Work done w/ @qhwang3 @GabrielPoesia @noahdgoodman @nickhaber

Website [🕸️]: zelikman.me/parselpaper/

Paper [📜]: zelikman.me/parselpaper/pa…

Code [💻]: github.com/ezelikman/pars…

Work done w/ @qhwang3 @GabrielPoesia @noahdgoodman @nickhaber

Website [🕸️]: zelikman.me/parselpaper/

Paper [📜]: zelikman.me/parselpaper/pa…

Code [💻]: github.com/ezelikman/pars…

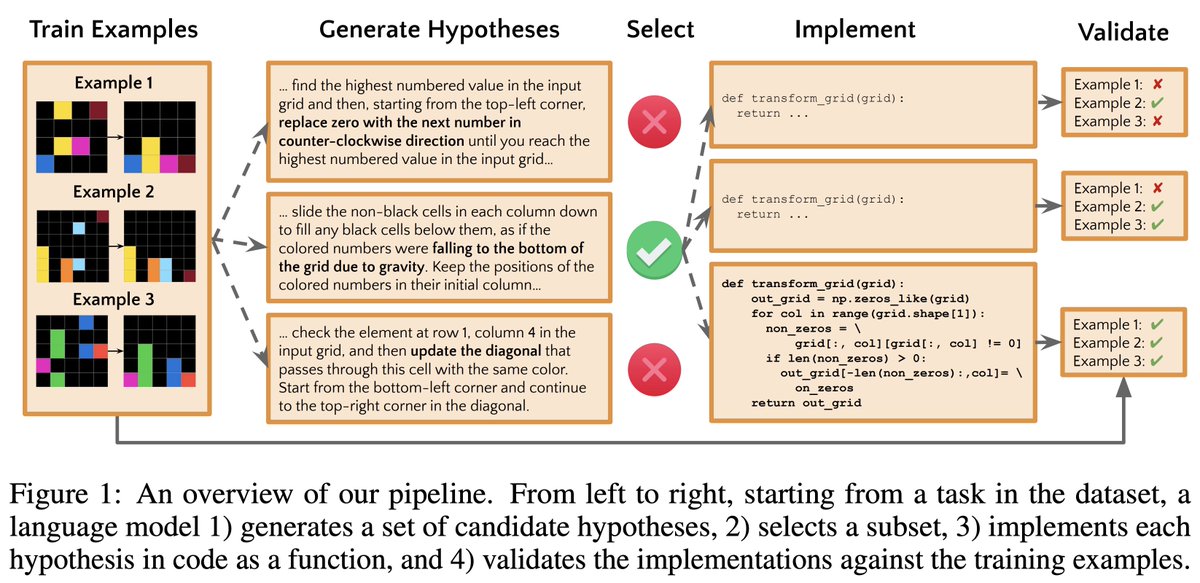

In the paper where OpenAI introduced Codex, they showed that code language models fail to generate programs that chain together many simple tasks, while humans can - Parsel solves this by separating out decomposition and implementation

Plus, excitingly, when LLMs write Parsel to generate step-by-step robotic plans from high-level tasks, the plans are consistently more accurate than a zero-shot planner baseline - more than 2/3 of the time! We've also shown Parsel can prove theorems, but highlight key challenges

Our initial goal was to let people write code in natural language, but we found LLMs are also good Parsel coders! We just asked GPT-3 to "think step by step to come up with a clever algorithm" (see arxiv.org/abs/2205.11916), then asked to translate into Parsel given a few examples

To understand the quality of the generated Parsel programs, @GabrielPoesia (an experienced competitive coder) solved a bunch of competition-level APPS problems with Parsel. He solved 5/10 problems in 6 hours, with 3 where GPT-3 failed, suggesting there's still a long way to go!

This new version of the paper goes into more detail on how Parsel addresses the limitations of code language models and better quantifies the ability of LLMs to generate Parsel programs. We think there's still a ton more to be done - we look forward to hearing your thoughts!

• • •

Missing some Tweet in this thread? You can try to

force a refresh