building ai for humans @humansand (cofounder & ceo) // was lgtm-ing @xAI, phd-ing @stanford

How to get URL link on X (Twitter) App

We start with a simple seed "improver" program that takes code and an objective function and improves the code with a language model (returning the best of k improvements). But improving code is a task, so we can pass the improver to itself! Then, repeat…

We start with a simple seed "improver" program that takes code and an objective function and improves the code with a language model (returning the best of k improvements). But improving code is a task, so we can pass the improver to itself! Then, repeat…

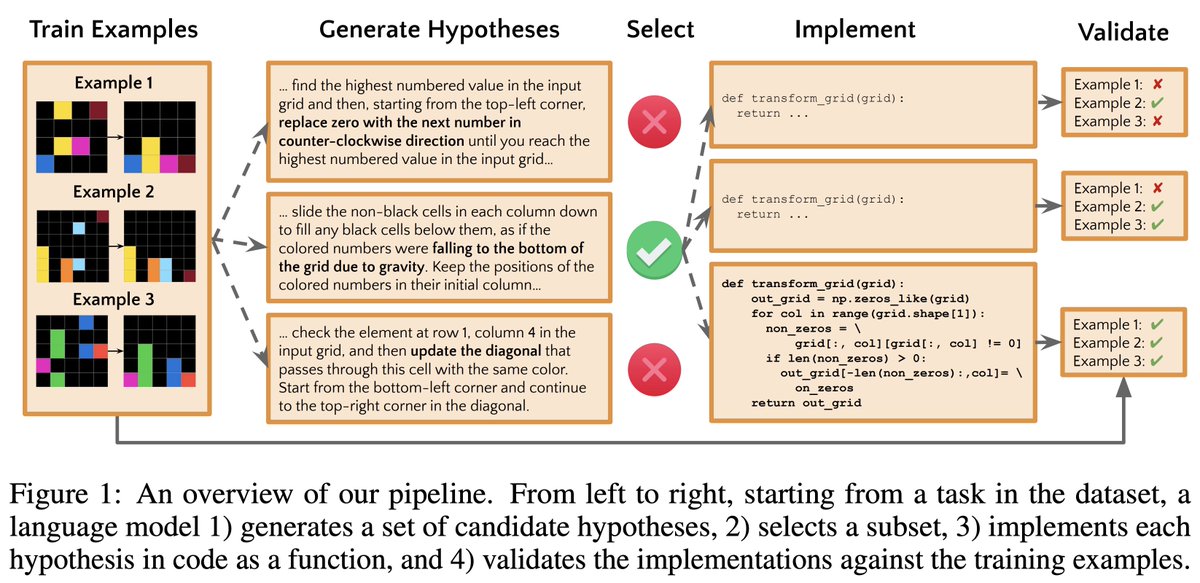

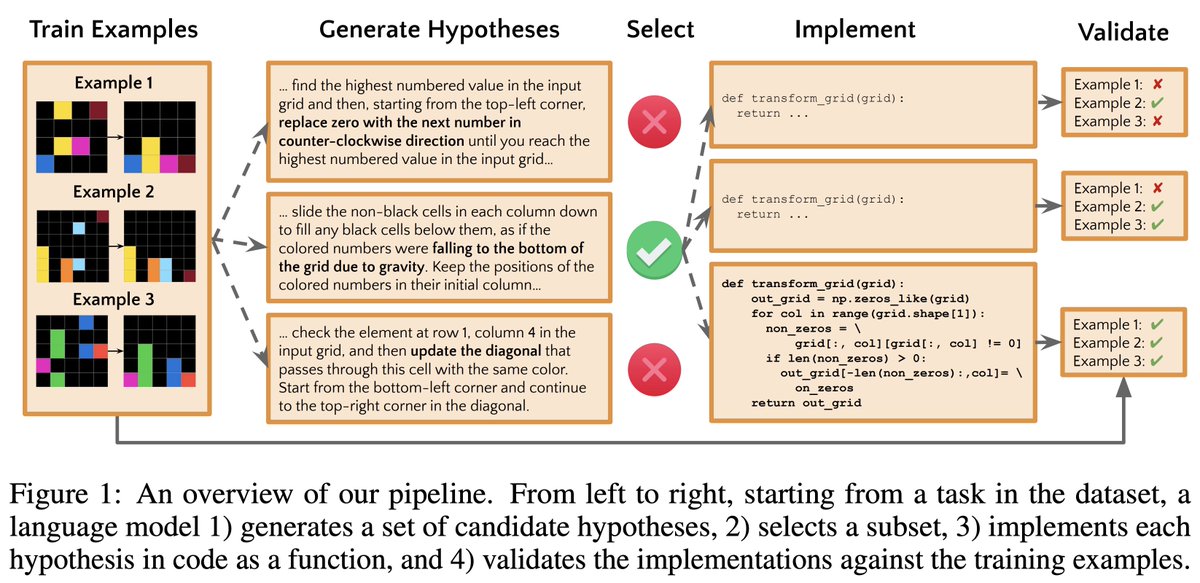

This kind of problem solving is “inductive reasoning,” and it’s essential to science and creativity. That’s why ARC has been used to argue that LLMs can’t reason and also why, when @Ruocheng suggested tackling @fchollet’s ARC, I called it a nerd snipe ()xkcd.com/356/

This kind of problem solving is “inductive reasoning,” and it’s essential to science and creativity. That’s why ARC has been used to argue that LLMs can’t reason and also why, when @Ruocheng suggested tackling @fchollet’s ARC, I called it a nerd snipe ()xkcd.com/356/

Decomposition🧩 and test generation🧪 go together well: if interconnected parts all pass tests, then it's more likely the solution and tests are good. But how do we know that the generated tests are any good? (2/5)

Decomposition🧩 and test generation🧪 go together well: if interconnected parts all pass tests, then it's more likely the solution and tests are good. But how do we know that the generated tests are any good? (2/5)

Parsel 🐍: A Unified Natural Language Framework for Algorithmic Reasoning

Parsel 🐍: A Unified Natural Language Framework for Algorithmic Reasoning