My lab is moving to #JuliaLang, and I’ll be putting together some R => Julia tips for our lab and others who are interested.

Here are a few starter facts. Feel free to tag along!

Julia draws inspiration from a number of languages, but the influence of R on Julia is clear.

Here are a few starter facts. Feel free to tag along!

Julia draws inspiration from a number of languages, but the influence of R on Julia is clear.

Let's start with packages.

Like R, Julia comes with a package manager that can be used to install pkgs from within the console (or REPL). The Pkg package isn't automatically imported in Julia but it's easy to do.

Both are different from Python's command line approach to pkgs.

Like R, Julia comes with a package manager that can be used to install pkgs from within the console (or REPL). The Pkg package isn't automatically imported in Julia but it's easy to do.

Both are different from Python's command line approach to pkgs.

Julia natively takes pkg management much further than R. Want to install a package from GitHub? Easy, just add a url argument to the add function.

Pkg.add(url = "github.com/kdpsingh/TidyT…")

Pkg.add(url = "github.com/kdpsingh/TidyT…")

Even cooler? press "]" from the Julia console and the Pkg package launches its own console for managing packages (press backspace to exit). From this view, it's even easier to add packages.

To add the Colors package, just type:

(v1.8) pkg> add Colors

To add the Colors package, just type:

(v1.8) pkg> add Colors

Not only do you not need equiv of a remotes R package, you also don't need renv bc Julia comes with built-in environments.

Use the "activate ." function from within the Pkg console to activate a folder as your environment, which gets its own set of packages, similar to renv.

Use the "activate ." function from within the Pkg console to activate a folder as your environment, which gets its own set of packages, similar to renv.

A couple of differences worth pointing out, which will be totally unsurprising to Python users.

There are two ways of loading Julia packages. If I wanted to use the Colors package, I could write:

import Colors

or

using Colors

There are two ways of loading Julia packages. If I wanted to use the Colors package, I could write:

import Colors

or

using Colors

`import` loads the package under the name Colors. So to use the `distinguishable_colors()` function, I'd need to write Colors.distinguishable_colors() to access it.

`using` is the R equivalent of `library()` since it brings all of that package's functions into the namespace.

`using` is the R equivalent of `library()` since it brings all of that package's functions into the namespace.

If you only wanted the distinguishable_colors() from the Colors package, you could also write

using Colors: distinguishable_colors

This would bring only that function into the namespace.

using Colors: distinguishable_colors

This would bring only that function into the namespace.

Another difference: Julia treats single and double quotes differently (unlike R).

Strings are written like "this". Individual characters use single quotes.

Bc a string is a collection of characters, "this"[1] == 't' will return true, while "this"[1] == "t" will return false!

Strings are written like "this". Individual characters use single quotes.

Bc a string is a collection of characters, "this"[1] == 't' will return true, while "this"[1] == "t" will return false!

That example brings up one thing I absolutely love about Julia. It's a sane language, by which I mean that it is a 1-indexed language.

I don't need to whip out a calculator to figure out how to generate starting or ending indices. In this way, it functions almost exactly like R.

I don't need to whip out a calculator to figure out how to generate starting or ending indices. In this way, it functions almost exactly like R.

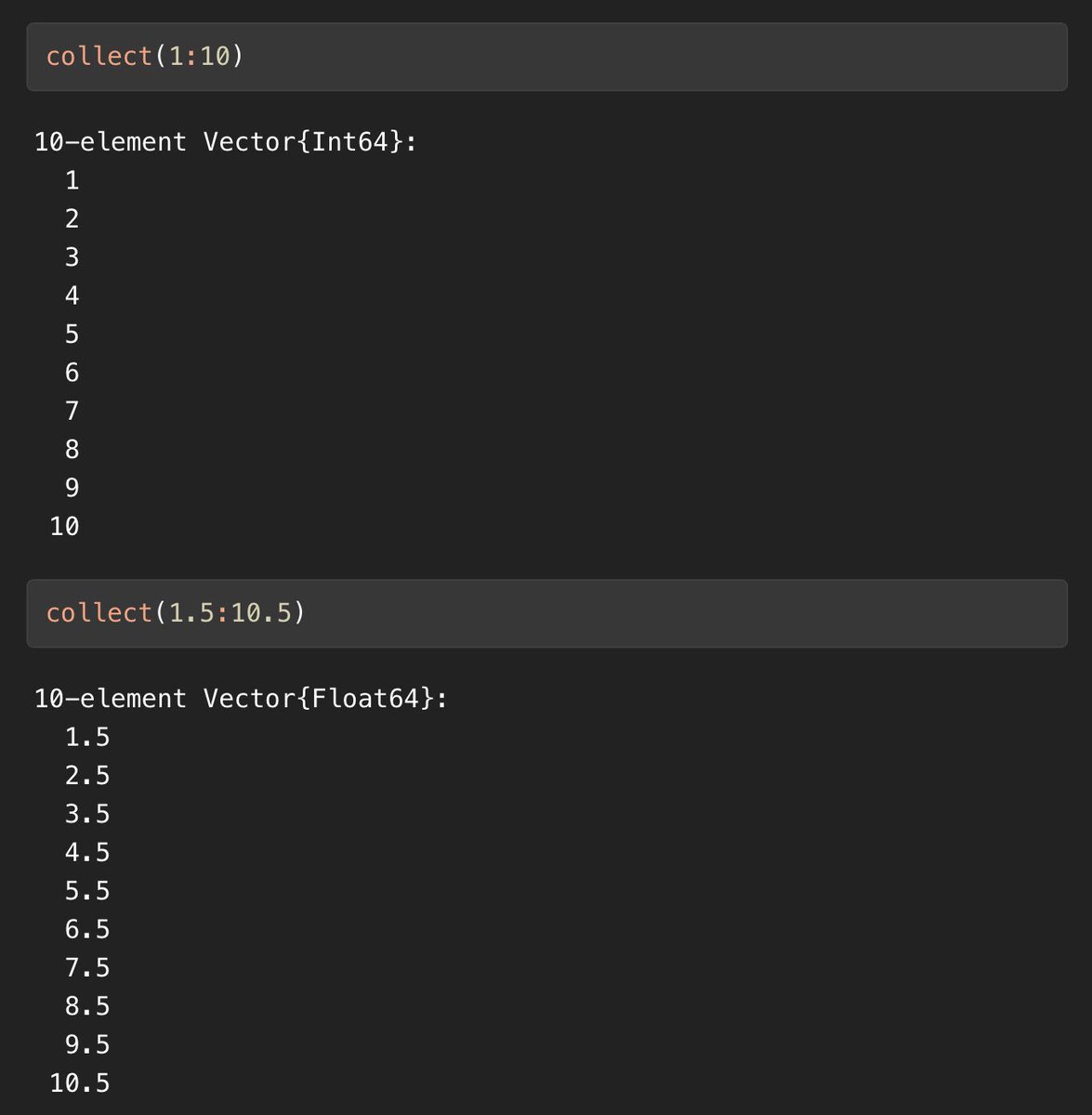

Julia also supports sequences using the `:` infix operator. To have them print out in Julia, you need to collect them bc Julia is lazy (like R but sometimes lazier!) and won't return the values until needed.

Also, note that vectors print vertically in Julia.

Also, note that vectors print vertically in Julia.

What if you want to retrieve the last 2 elements of a vector whose length you don't know in advance?

Here, the Julia syntax is lovely. It has a built in `end` word for referring to the last element.

Here, the Julia syntax is lovely. It has a built in `end` word for referring to the last element.

This is such a pain point in R that every data frame package has implemented their own helper function or keyword. Data.table uses .N for this, and tidyverse uses n().

Note also the use of the pipe in Julia. In reality, both Chain.jl and Pipes.jl provide a more useful pipe.

Note also the use of the pipe in Julia. In reality, both Chain.jl and Pipes.jl provide a more useful pipe.

Also, despite the fact that I'm using a lot of collect() functions for Julia to show you values, in reality you almost never need this.

What if you want to count up by 1 over numeric vectors? Both the R and Julia syntax is identical.

But what if you want to count up by 0.5?

What if you want to count up by 1 over numeric vectors? Both the R and Julia syntax is identical.

But what if you want to count up by 0.5?

If you want to count up by 0.5, you have to switch over to using the seq() function in R, whereas in Julia you can use the from:by:to syntax. If you really prefer the seq() function, Julia has a range() function with identical functionality.

Here’s R syntax, then Julia.

Here’s R syntax, then Julia.

Another really minor but nice touch.

If you write `a = 1:10` in R and want to see the value of a in the console, you have to either separately write `a` on the next line or wrap the entire line in parentheses `(a = 1:10)`.

How about in Julia?

If you write `a = 1:10` in R and want to see the value of a in the console, you have to either separately write `a` on the next line or wrap the entire line in parentheses `(a = 1:10)`.

How about in Julia?

In Julia, if you write `a = 1:10`, it'll print out 1:10 in the console without needing to do anything else.

If you want to suppress this behavior, you can add a semicolon at the end, as in `a = 10;`

If you want to suppress this behavior, you can add a semicolon at the end, as in `a = 10;`

A few final points on sequences/ranges. In R, if you write 8:4, this will return 8 7 6 5 4. In Julia, it will return an empty integer.

Why?

Because Julia assumes a default step of +1, and if you want to change it, you can using 8:-1:4, which counts by -1 from 8 to 4.

Why?

Because Julia assumes a default step of +1, and if you want to change it, you can using 8:-1:4, which counts by -1 from 8 to 4.

If you’ve ever written a for-loop over 1:length(a) where `a` turned out to have a length of zero, you’ll love this. No need for seq_along!

This is the topic of several StackOverflow topics, like this one:

stackoverflow.com/questions/6221…

This is the topic of several StackOverflow topics, like this one:

stackoverflow.com/questions/6221…

Ok now one weird thing (for R users).

In R, if you do 1:10 + 1, this returns a new vector containing the numbers 2:11.

In Julia, (1:10) + 1 produces an error. Why? Because 1:10 and 1 are different lengths so they can’t be added element-wise.

In R, if you do 1:10 + 1, this returns a new vector containing the numbers 2:11.

In Julia, (1:10) + 1 produces an error. Why? Because 1:10 and 1 are different lengths so they can’t be added element-wise.

If you want to vectorize the operation by “broadcasting” the scalar 1 into a vector of length 10 containing all 1s (aka R’s vector recycling), you have to do this explicitly by adding a period. Like this:

(1:10) .+ 1

This produces the expected output.

(1:10) .+ 1

This produces the expected output.

Also, the `+` function has a higher precedence than the `:` in Julia, so I had to surround the 1:10 in parentheses to make sure it got applied in the correct order.

As an R user, you may not be used to thinking about scalars bc everything is a vector in R.

As an R user, you may not be used to thinking about scalars bc everything is a vector in R.

However, not all functions are vectorized in R. For example, compare if() vs ifelse(): the first is not vectorized while the second is. lapply() and map() exist to vectorize functions that aren’t vectorized.

In Julia, *every* function is *automatically* vectorized by adding a period to it. For operators, the period goes before the operator (.+) and for other functions, it goes after the function.

In Julia, ifelse() isn’t vectorized, but ifelse.() is.

Compiler magic 🌟.

In Julia, ifelse() isn’t vectorized, but ifelse.() is.

Compiler magic 🌟.

Let’s move onto pipes, and then we’ll talk about non-standard eval.

Julia comes with a built-in pipe: |>

Recognize it? Yup, same as the R native pipe, which they both borrowed from F#.

Just like in R 4.1, the native Julia pipe doesn’t have a placeholder.

Julia comes with a built-in pipe: |>

Recognize it? Yup, same as the R native pipe, which they both borrowed from F#.

Just like in R 4.1, the native Julia pipe doesn’t have a placeholder.

Two competing pipes have emerged from other packages to fill this gap: one from Pipes.jl and one from Chain.jl.

Pipes.jl uses the same |> and adds a _ placeholder.

Chain.jl uses the _ placeholder but no pipe operator.

Pipes.jl uses the same |> and adds a _ placeholder.

Chain.jl uses the _ placeholder but no pipe operator.

Chain.jl is emerging as the more popular choice. To see why, compare the syntax for Pipes vs. Chain.

Pipes.jl

@pipe a |>

do_this |>

do_that(1, _, _)

Chain.jl

@chain a begin

do_this

do_that(1, _, _)

end

Pipes.jl

@pipe a |>

do_this |>

do_that(1, _, _)

Chain.jl

@chain a begin

do_this

do_that(1, _, _)

end

Unlike the R 4.2 |> pipe, both of these pkgs can re-use the placeholder (like magrittr).

What if you don’t want to do_that() anymore?

Removing this is fine for Chain.jl. With Pipes.jl, commenting this out will leave a hanging |> at the end, so my preference is to use Chain.jl.

What if you don’t want to do_that() anymore?

Removing this is fine for Chain.jl. With Pipes.jl, commenting this out will leave a hanging |> at the end, so my preference is to use Chain.jl.

The `@` at the beginning of @pipe and @chain indicate that these are actually macros and not regular functions.

Unlike regular functions, macros are able to access the call and calling environment, which gives them superpowers.

Unlike regular functions, macros are able to access the call and calling environment, which gives them superpowers.

Also, the `begin` and `end` keywords are Julia’s equivalent for curly braces in R. Curly braces serve a different purpose in Julia.

Even tho I show code as aligned, Julia doesn’t enforce any whitespace rules (unlike Python).

Even tho I show code as aligned, Julia doesn’t enforce any whitespace rules (unlike Python).

A quick word on non-standard evaluation (NSE): this is a common programming pattern in tidyverse R and is controversial bc it’s not always clear whether a function expects NSE (eg Age) or SE (eg “Age”) and this introduces the need for quasiquotation, etc.

In Julia, unquoted variable names (eg Age) are called symbols and always preceded by a colon (eg :Age). Expressions also have a shorthand involving a colon, eg :(1+1) is a quoted expression.

So you can generally differentiate NSE from SE in Julia.

So you can generally differentiate NSE from SE in Julia.

Lots more to learn and share. Resources I have found super helpful:

- docs.julialang.org/en/v1/manual/n…

- dataframes.juliadata.org/stable/

- aog.makie.org/stable/generat…

- manning.com/books/julia-fo…

- juliadatascience.io

and many others!

Thanks to the language and package devs!

- docs.julialang.org/en/v1/manual/n…

- dataframes.juliadata.org/stable/

- aog.makie.org/stable/generat…

- manning.com/books/julia-fo…

- juliadatascience.io

and many others!

Thanks to the language and package devs!

• • •

Missing some Tweet in this thread? You can try to

force a refresh