Faculty @UCSanDiego @InnovationUCSDH. Chief Health AI Officer @UCSDHealth. Creator of @Tidierjl #JuliaLang. #GoBlue. Views own.

2 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/annalsofim/status/1711834106009419777If you evaluate such a model *after* it has been linked to a clinical workflow, the model’s “apparent” performance will look worse.

TidierPlots.jl is getting to be crazily feature-complete, even supporting `geom_text()`, `geom_label()`, and faceting.

TidierPlots.jl is getting to be crazily feature-complete, even supporting `geom_text()`, `geom_label()`, and faceting.

https://twitter.com/prpayne5/status/1642917015739416577In earlier single-center study @umichmedicine, our paper and accompanying editorial framed our AUC 0.63 as a failure of “external” validity. The result was somewhat surprising bc other studies reported higher AUCs/sens/spec.

One interesting thing is that lag() and lead() take in a vector and return a vector (similar to ntile).

One interesting thing is that lag() and lead() take in a vector and return a vector (similar to ntile).

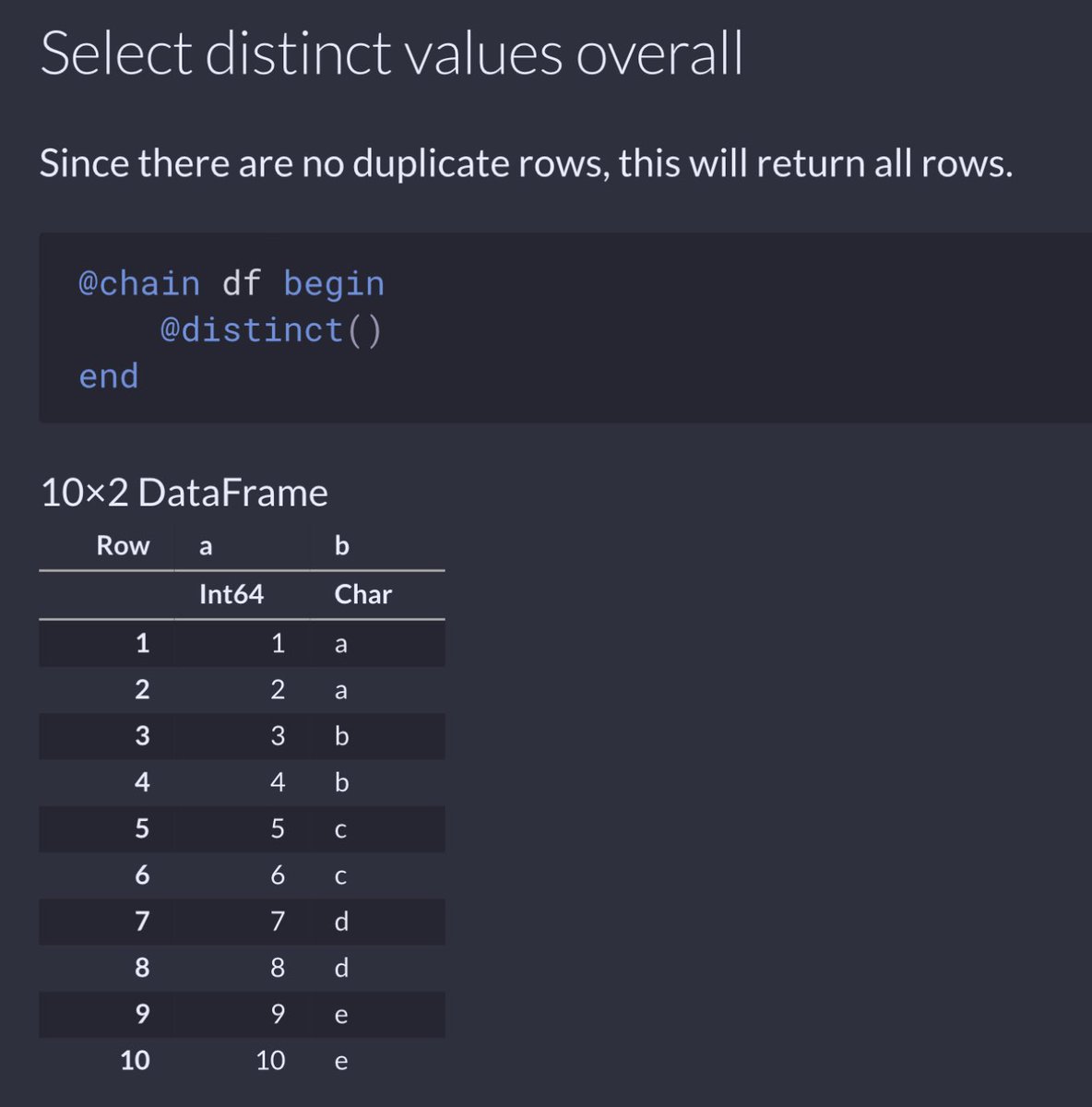

If you use distinct() without any arguments, it behaves just like the #rstats {tidyverse} distinct().

If you use distinct() without any arguments, it behaves just like the #rstats {tidyverse} distinct().

https://twitter.com/kdpsinghlab/status/1628464344785727489When we link an intervention to a model threshold (eg alerts), we often worry about overalerting.

We use 4 case studies to show how a resource constraint diminishes the usefulness of a model and changes the optimal resource allocation strategy.

We use 4 case studies to show how a resource constraint diminishes the usefulness of a model and changes the optimal resource allocation strategy.

While the FDA was established formally by the FD&C Act in 1938, it didn't gain the authority to regulate medical devices until 1976 when the FD&C Act was amended.

While the FDA was established formally by the FD&C Act in 1938, it didn't gain the authority to regulate medical devices until 1976 when the FD&C Act was amended.

https://twitter.com/gwstagg/status/1495495339444473858When the web was first introduced, there wasn't a clear choice of what scripting language should be used, before the world settled on using JavaScript, which implements the ECMAScript specification (see here: ).

When people talk about risk stratifying cancer outcomes, there’s an implicit assumption that’s what being modeled is biology.

When people talk about risk stratifying cancer outcomes, there’s an implicit assumption that’s what being modeled is biology.

https://twitter.com/IAmSamFin/status/1415417258873131011Why silent? Shouldn’t it be obvious if models get miscalibrated over time?

https://twitter.com/kdpsinghlab/status/1186114527668199425

https://twitter.com/kdpsinghlab/status/1407208969039396866Our biggest limitation is that our results come from a single center.

https://twitter.com/jamainternalmed/status/1407005406514319361Here are some questions that come up:

https://twitter.com/kdpsinghlab/status/1370216736130220037

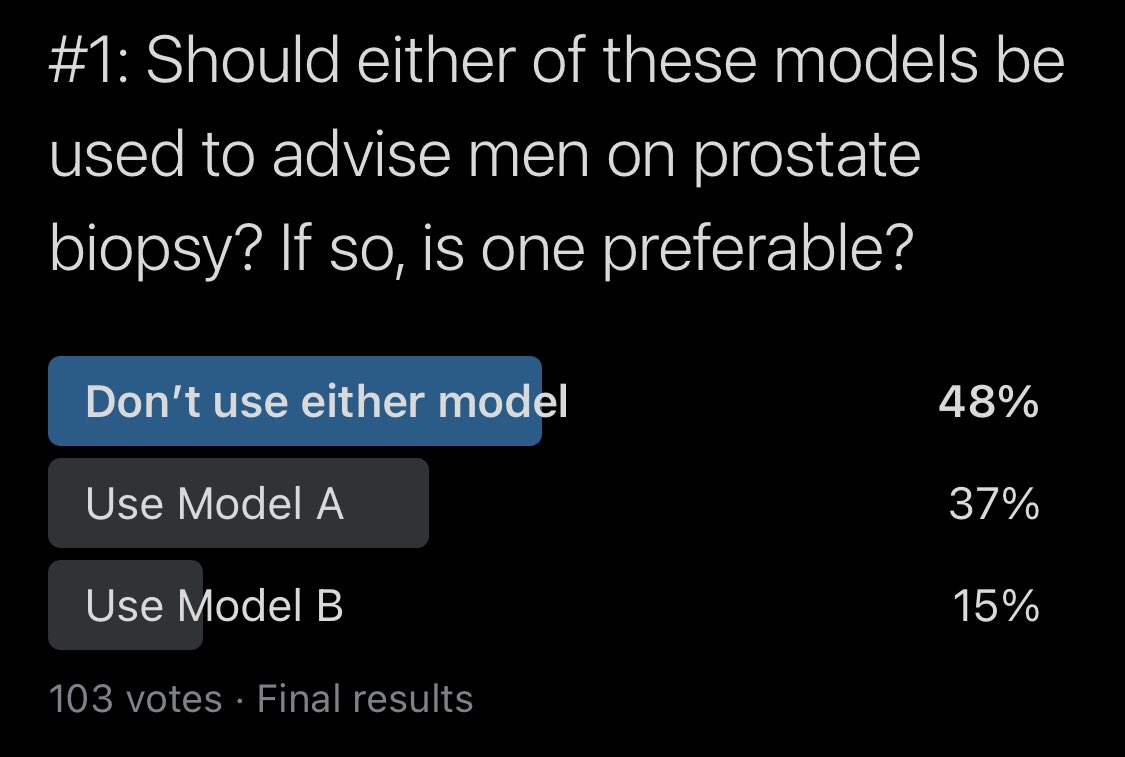

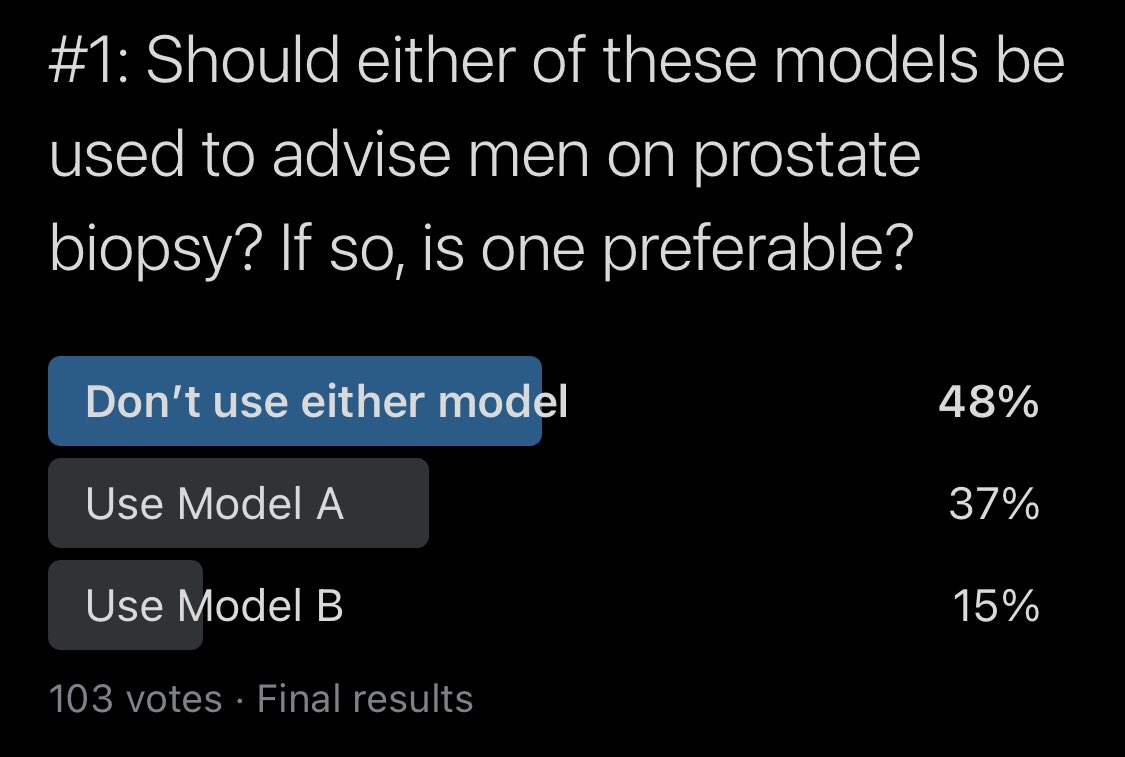

First, let’s poll folks who felt the model shouldn’t be used. What aspect of the model were you dissatisfied with?

First, let’s poll folks who felt the model shouldn’t be used. What aspect of the model were you dissatisfied with?

https://twitter.com/kdpsinghlab/status/1370978346763444224The maximal net benefit of a model in a given setting is determined by the proportion of people who experience the outcome.

https://twitter.com/kdpsinghlab/status/1367569074759294978

https://twitter.com/kdpsinghlab/status/1370216729889140737

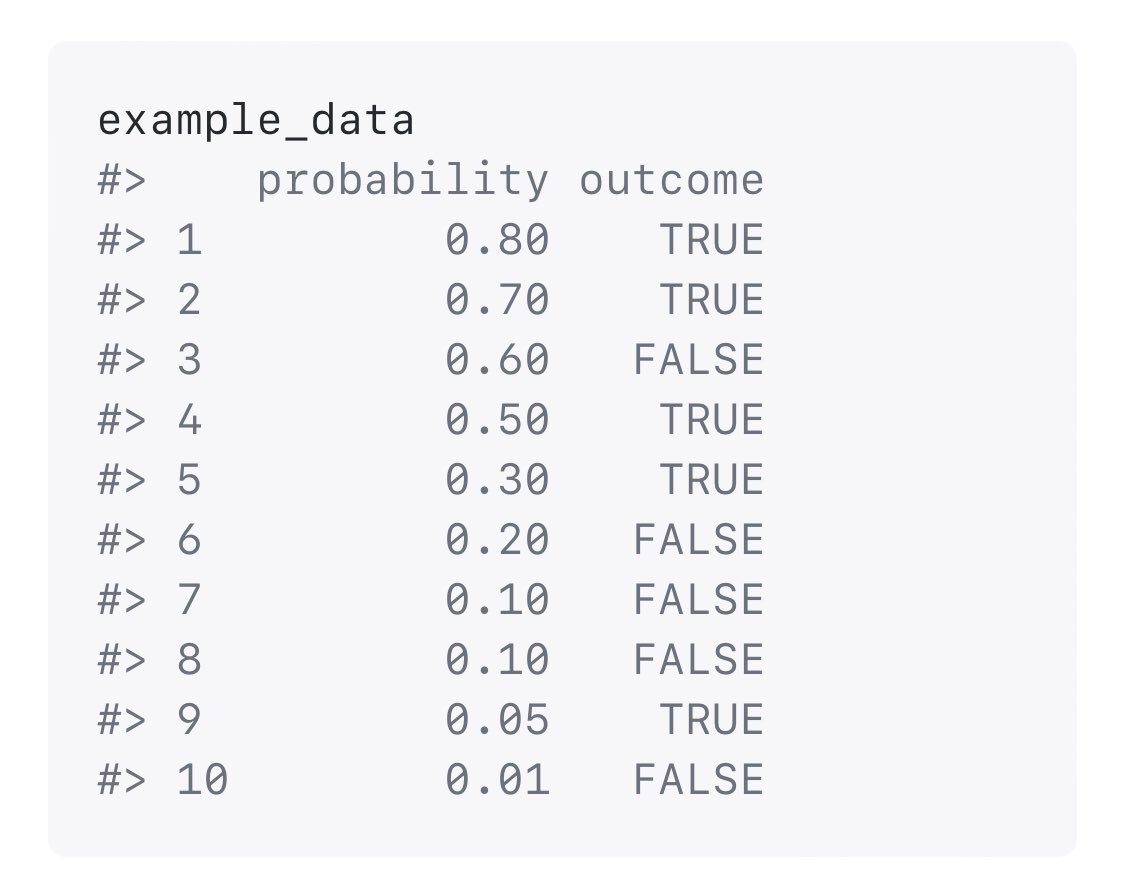

I’ll get to why I voted for Model B but I’ll start in order and share everything I looked at to arrive at that opinion.

I’ll get to why I voted for Model B but I’ll start in order and share everything I looked at to arrive at that opinion.

One issue that touched a nerve was that in my example, I calculated a post-hoc threshold based on sensitivity. Was I wrong to do this? Let me give an example as to why this happened in this situation, why it *might've* been our only option, and how I would do it differently.

One issue that touched a nerve was that in my example, I calculated a post-hoc threshold based on sensitivity. Was I wrong to do this? Let me give an example as to why this happened in this situation, why it *might've* been our only option, and how I would do it differently. https://twitter.com/vickersbiostats/status/1366068394408222730

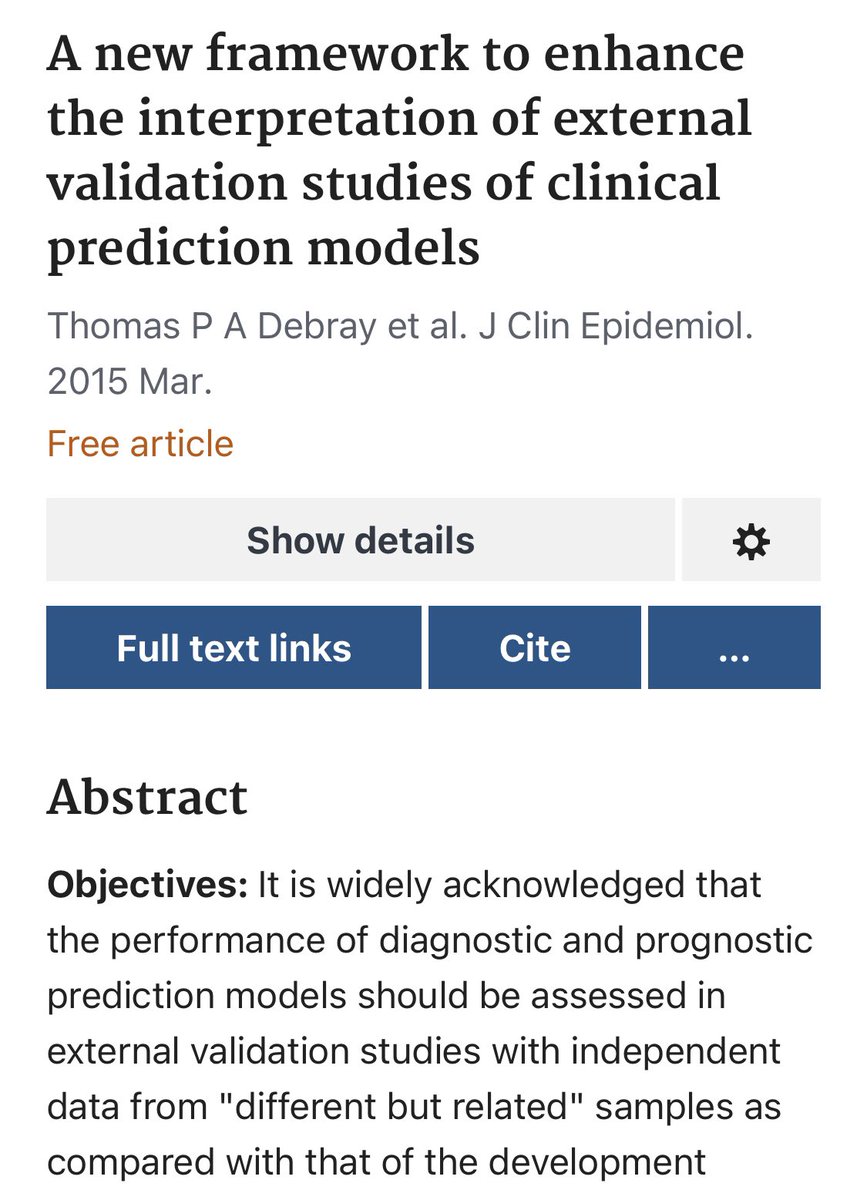

Lack of understanding isn't for a lack of trying on the part of its authors. There are dozens of papers w/ thousands of citations!

Lack of understanding isn't for a lack of trying on the part of its authors. There are dozens of papers w/ thousands of citations!