Music & sound effect industry has not fully understood the size of the storm about to hit.

There’re not just one, or two, but FOUR audio models in the past week *alone*

If 2022 is the year of pixels for generative AI, then 2023 is the year of sound waves.

Deep dive with me: 🧵

There’re not just one, or two, but FOUR audio models in the past week *alone*

If 2022 is the year of pixels for generative AI, then 2023 is the year of sound waves.

Deep dive with me: 🧵

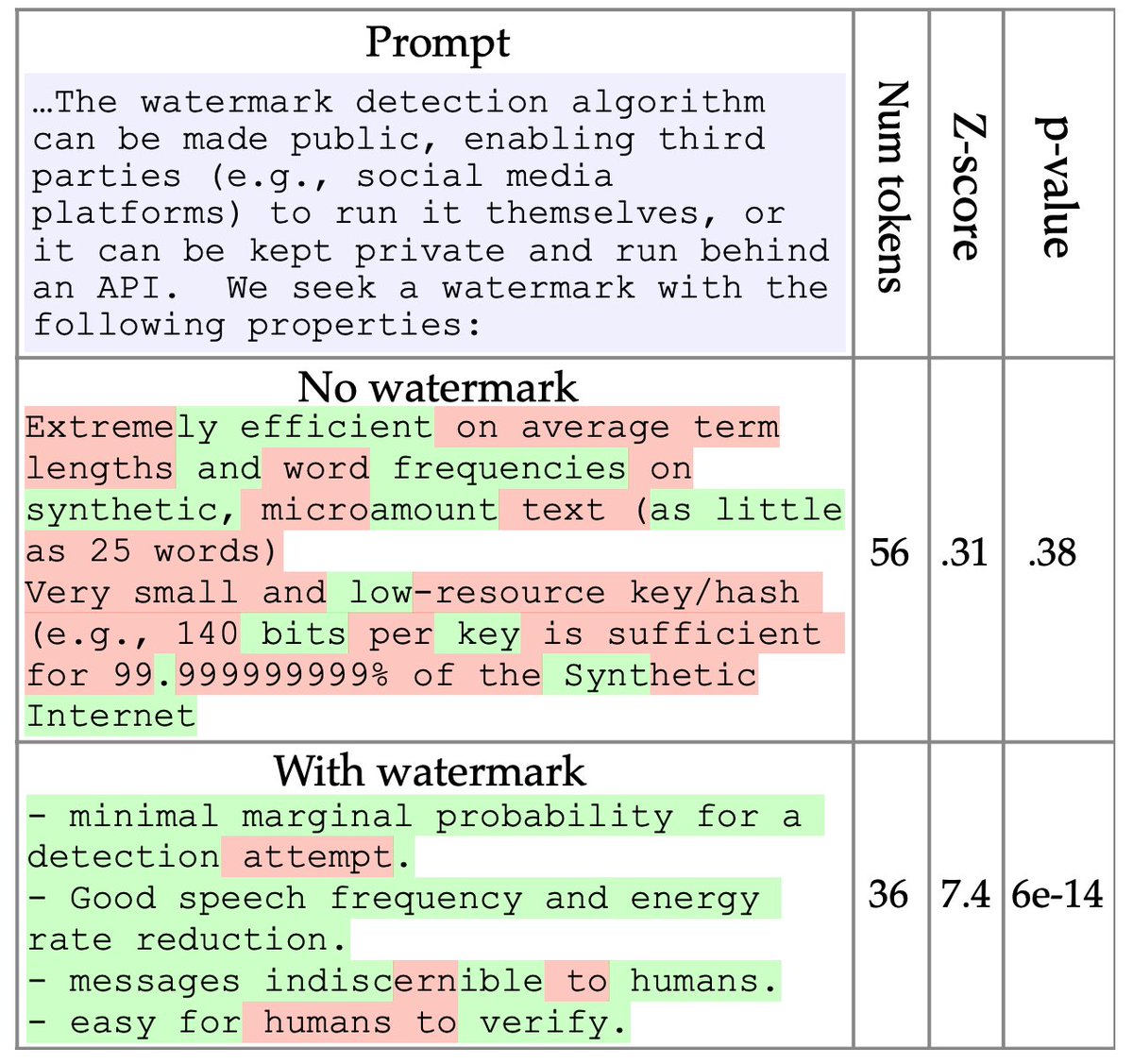

MusicLM by @GoogleAI, a hierarchical text-to-audio model that generates music at 24 kHz that remains consistent over several minutes. It relies on 3 key pre-trained modules: SoundStream, w2v-BERT, and MuLan.

1.1/

1.1/

Among the three, MuLan is particularly interesting - it’s a CLIP-like model that learns to encode paired audio and text closer to each other in the embedding space. MuLan helps address the limited paired data issue - now MusicLM can learn from large audio-only corpus.

1.2/

1.2/

Google also publicly release MusicCaps, a dataset of 5.5k music-text pairs.

MusicLM demo: google-research.github.io/seanet/musiclm…

Paper: arxiv.org/abs/2301.11325

1.3/

MusicLM demo: google-research.github.io/seanet/musiclm…

Paper: arxiv.org/abs/2301.11325

1.3/

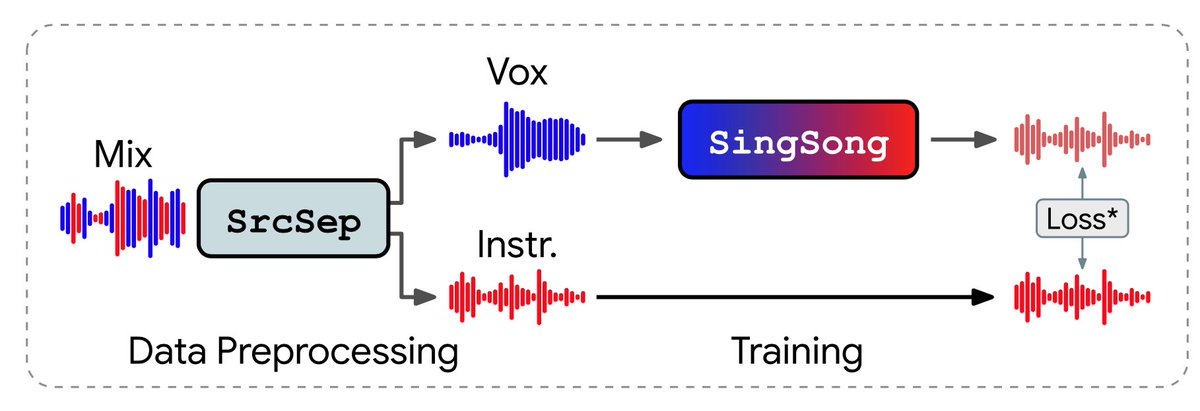

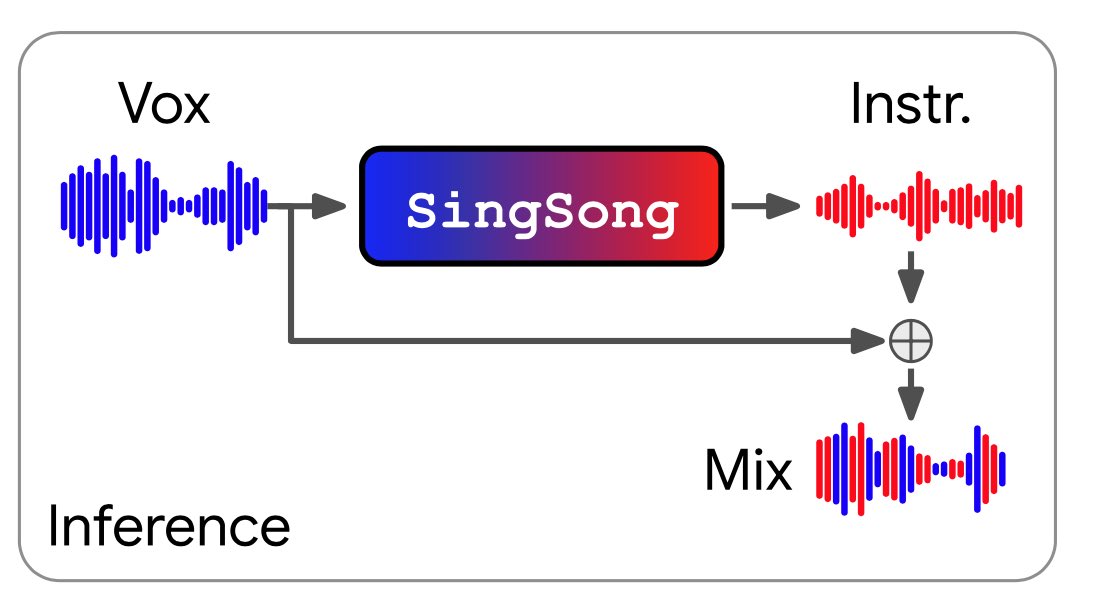

SingSong, a super clever application that maps singing voice to instrument accompaniment. Now you get your own private, customized, deluxe band! Made by @GoogleMagenta

See my spotlight thread below:

2/

See my spotlight thread below:

2/

https://twitter.com/drjimfan/status/1620802891123671040

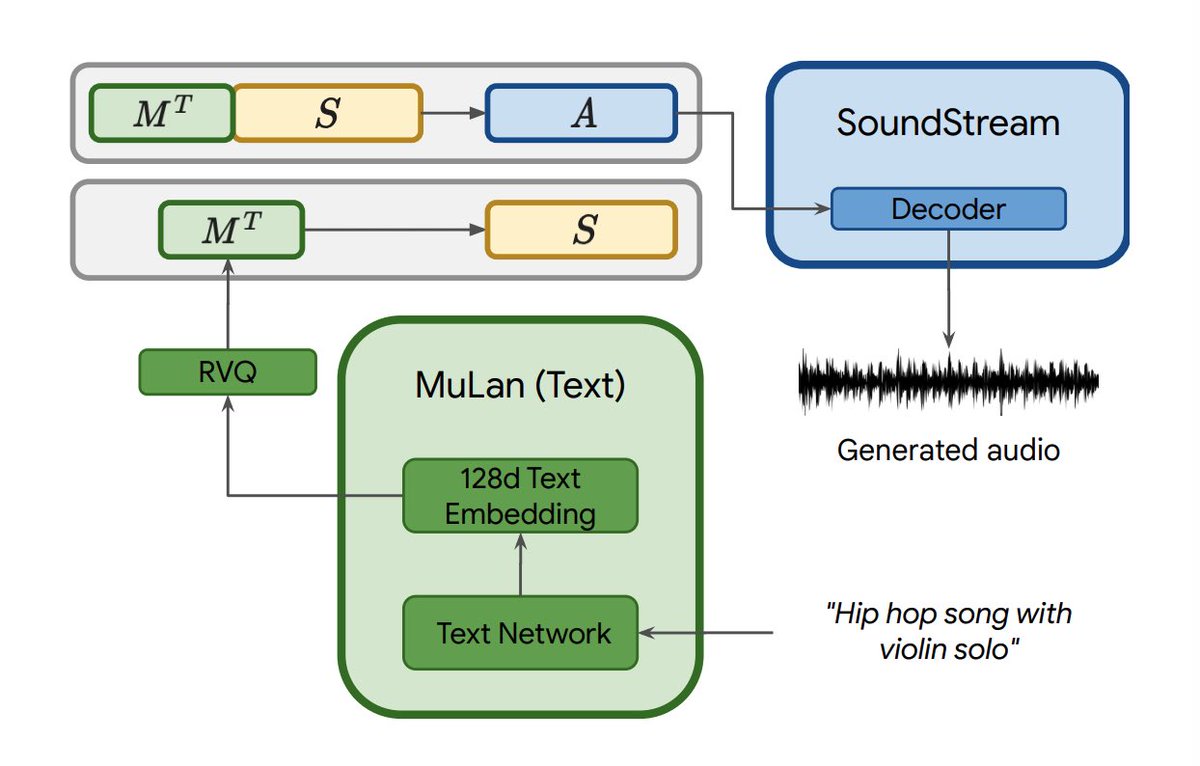

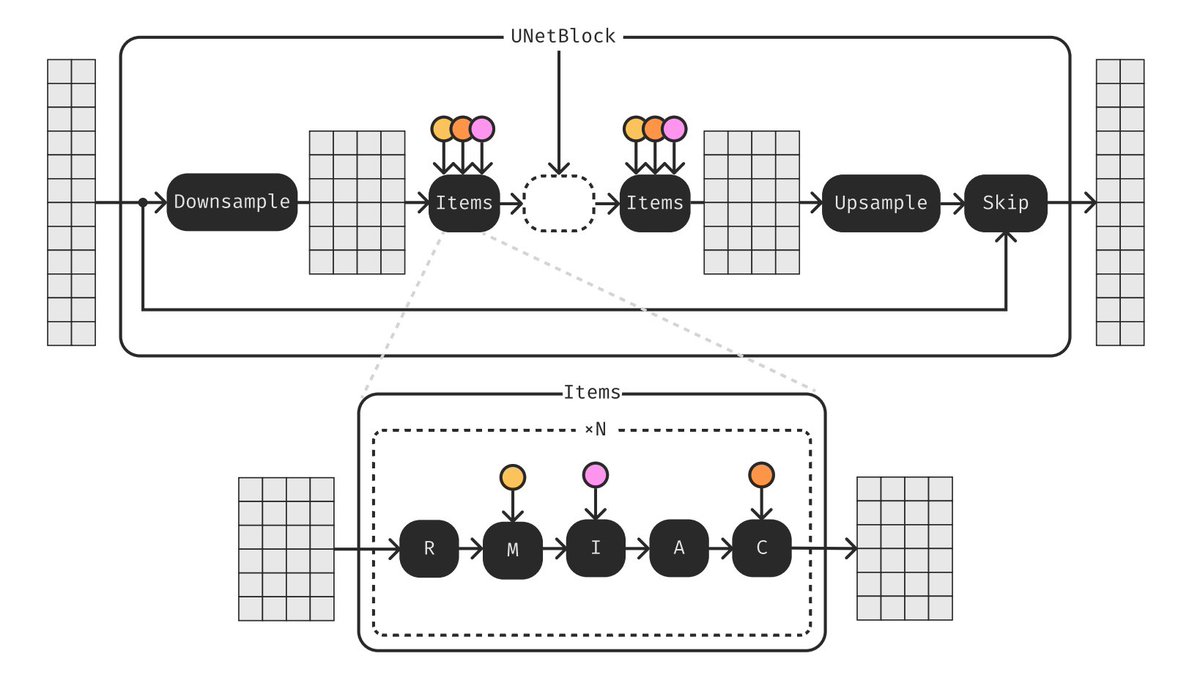

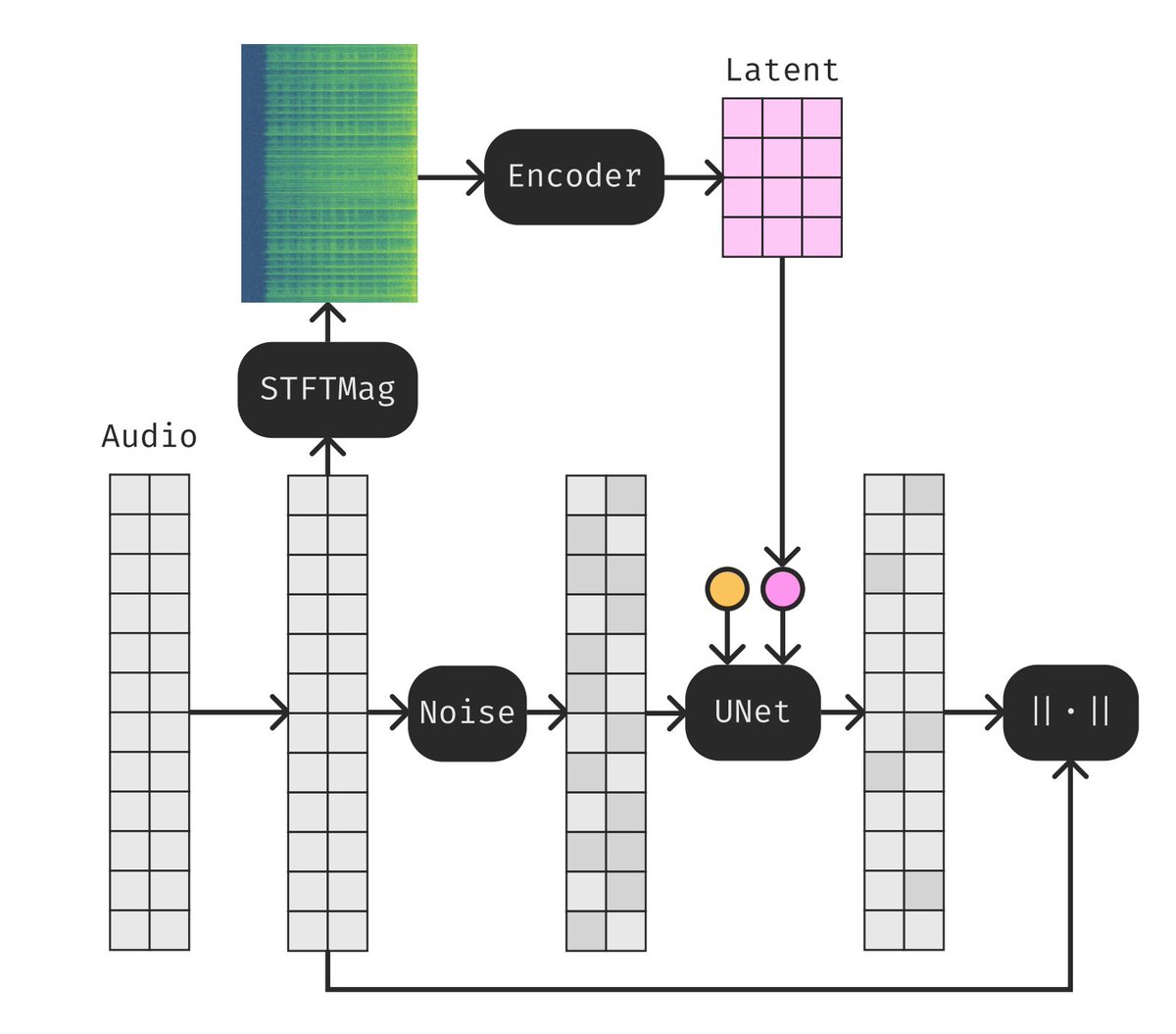

Moûsai, another text-to-music generative model that leverages latent diffusion. Yes, this is the same underlying technique as Stable Diffusion! Using latent diffusion is good for dealing with longer context while keeping efficiency. The neural workflow is as follows:

3.1/

3.1/

First, the text prompt is encoded by a pretrained and frozen language model into a text embedding. Conditioned on the text, the model generates a compressed latent with the diffusion generator, which then gets translated into the final waveform by a diffusion decoder.

3.2/

3.2/

Moûsai can generate minutes of high-quality stereo music at 48kHz from captions.

Paper by Schneider et al: arxiv.org/abs/2301.11757

Demo: bit.ly/3YnQFUt

Code is open-source!! github.com/archinetai/aud…

3.3/

Paper by Schneider et al: arxiv.org/abs/2301.11757

Demo: bit.ly/3YnQFUt

Code is open-source!! github.com/archinetai/aud…

3.3/

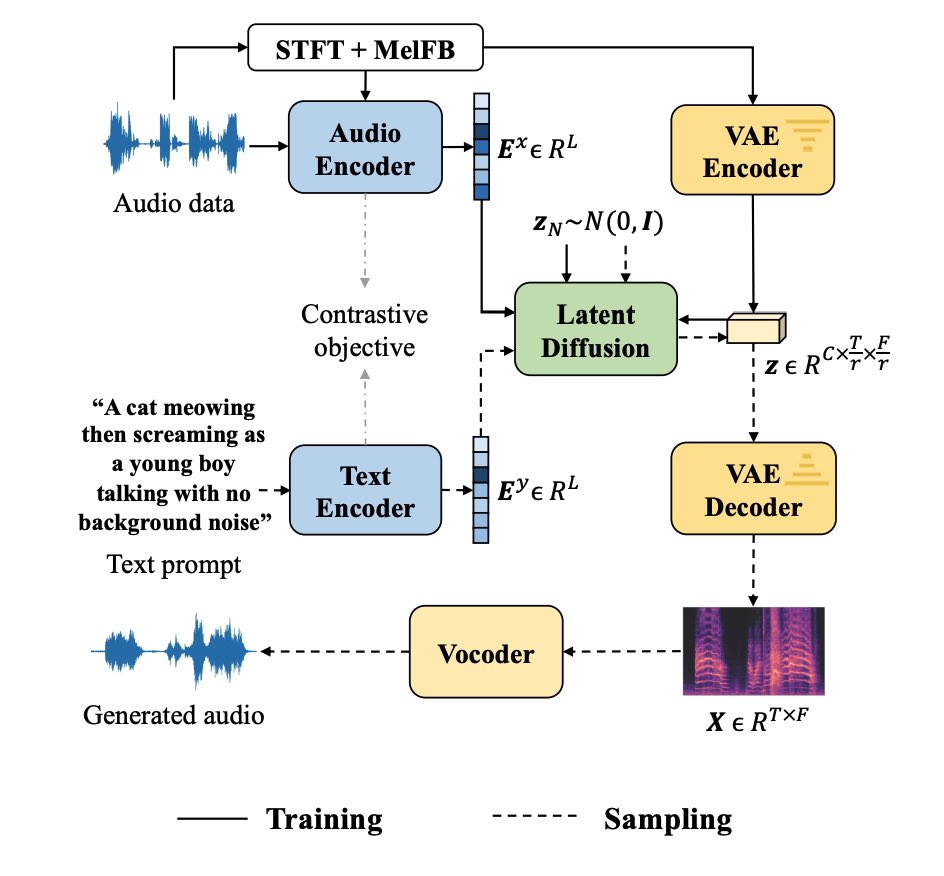

AudioLDM: also a latent diffusion model for audio generation. Similar to Google’s MusicLM, they train a CLIP-style, audio-text contrastive model called CLAP to provide high-quality embeddings.

4.1/

4.1/

Their demos cover not just music, but sound effects like “Two space shuttles are fighting in the space”: audioldm.github.io

Paper by Liu et al: arxiv.org/abs/2301.12503

4.2/

Paper by Liu et al: arxiv.org/abs/2301.12503

4.2/

Parting words: I do NOT believe any of these models will replace human musicians and SFX artists. Instead, I think they will change the industry by making artists more *productive*, serving as their inspiration and co-pilots.

Follow me for deep dives on the latest in AI 🙌

END/

Follow me for deep dives on the latest in AI 🙌

END/

• • •

Missing some Tweet in this thread? You can try to

force a refresh