Strap in folks --- we have a blog post from @sundarpichai at @google about their response to #ChatGPT to unpack!

blog.google/technology/ai/…

#MathyMath #AIHype

blog.google/technology/ai/…

#MathyMath #AIHype

Step 1: Lead off with AI hype. AI is "profound"!! It helps people "unlock their potential"!!

There is some useful tech that meets the description in these paragraphs. But I don't think anything is clarified by calling machine translation or information extraction "AI".

>>

There is some useful tech that meets the description in these paragraphs. But I don't think anything is clarified by calling machine translation or information extraction "AI".

>>

And then another instance of "standing in awe of scale". The subtext here is it's getting bigger so fast --- look at all of that progress! But progress towards what and measured how?

#AIHype #InAweOfScale

>>

#AIHype #InAweOfScale

>>

And then a few glowing paragraphs about "Bard", which seems to be the direct #ChatGPT competitor, built off of LaMDA. Note the selling point of broad topic coverage: that is, leaning into the way in which apparent fluency on many topics provokes unearned trust.

>>

>>

Let's sit with that prev quote a bit longer. No, the web is not "the world's knowledge" nor does the info on the web represent the "breadth" of same. Also, large language models are neither intelligent nor creative.

>>

>>

Next some reassurance that they're using the lightweight version, so that when millions of people use it every day, it's a smaller amount of electricity (~ carbon footprint) multiplied by millions. Okay, better than the heavyweight version, but just how much carbon, Sundar?

>>

>>

"High bar for quality, safety and groundedness" in the prev quote links to this page:

ai.googleblog.com/2022/01/lamda-…

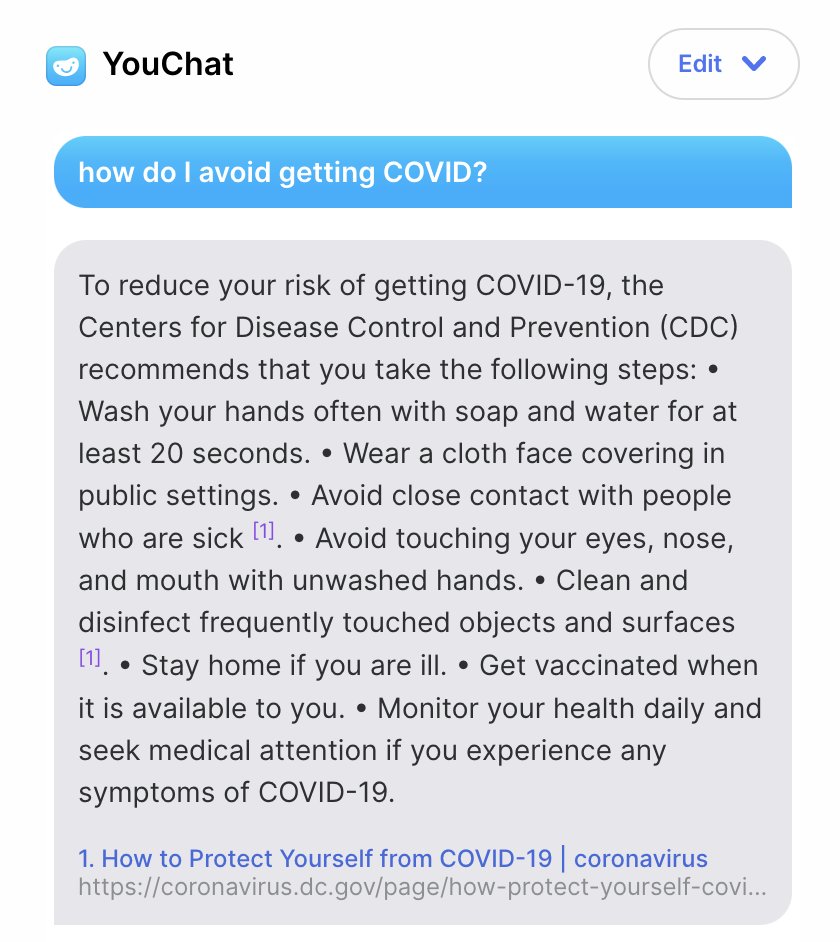

Reminder: The state of the art for providing the source of the information you are linking to is 100%, when what you return is a link, rather than synthetic text.

>>

ai.googleblog.com/2022/01/lamda-…

Reminder: The state of the art for providing the source of the information you are linking to is 100%, when what you return is a link, rather than synthetic text.

>>

Finally, we get Sundar/Google promising exactly what @chirag_shah and I warned against in our paper "Situating Search" (CHIIR 2022): It is harmful to human sense making, information literacy and learning for the computer to do this distilling work, even when it's not wrong. >>

Why aren't chatbots good replacements for search engines? See this thread:

https://twitter.com/emilymbender/status/1606734504412221440?s=20&t=MrDe8bjfFBg5WvqRaiel3Q

• • •

Missing some Tweet in this thread? You can try to

force a refresh