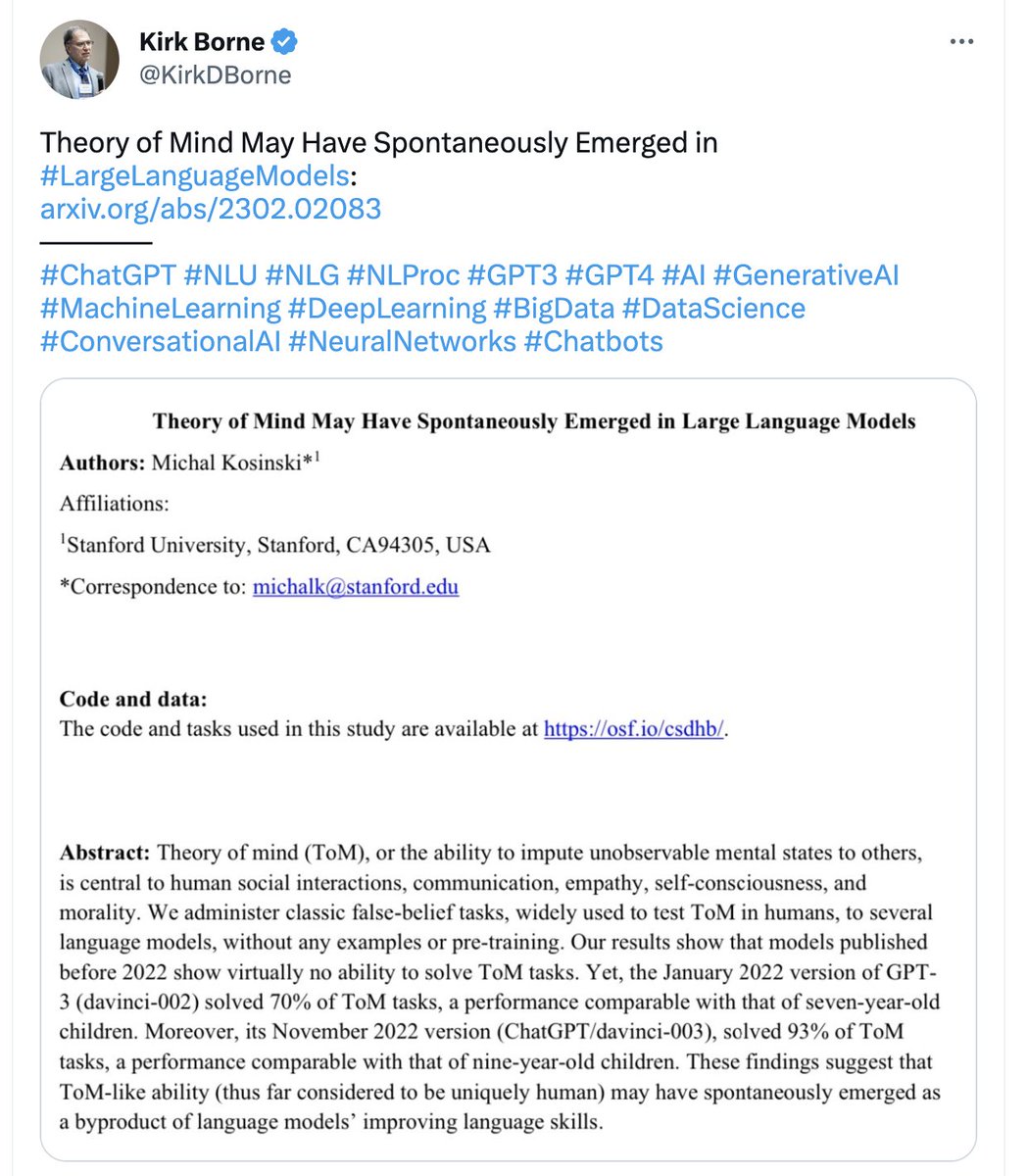

TFW an account with 380k followers tweets out a link to a fucking arXiv paper claiming that "Theory of Mind May Have Spontaneously Emerged in Large Language Models".

#AIHype #MathyMath

#AIHype #MathyMath

That feeling is despair and frustration that researchers at respected institutions would put out such dreck, that it gets so much attention these days, and that so few people seem to be putting any energy into combatting it.

>>

>>

NB: The author of that arXiv (= NOT peer reviewed) paper is the same asshole behind the computer vision gaydar study from a few years ago.

>>

>>

Mixed in with the despair and frustration is also some pleasure/relief at the idea that through Mystery AI Hype Theater 3000 I have an outlet in which I can give this work (and the tweeting about it) the derision it deserves, together with @alexhanna .

>>

>>

• • •

Missing some Tweet in this thread? You can try to

force a refresh