*Decentralized* training will become a formidable force in open-source, large-scale AI developments. We need an infrastructure that enables LLM to scale as the community scales.

GPT-JT is a great example that distributes training over slow network and diverse devices.

1/

GPT-JT is a great example that distributes training over slow network and diverse devices.

1/

GPT-JT-6B is a fork from @AiEleuther’s GPT-J, fine-tuned on 3.53 billion tokens. The most distinguishing feature is that its training pipeline is distributed over 1 Gbps network - very slow compared to conventional centralized data center networks.

2/

2/

This enables geo-distributed computing across cities or even countries. Now everyone can BYOC (“Bring Your Own Compute”) and join the training fleet to contribute to the open-source development. The scheduling algorithm makes no assumption about the device types.

3/

3/

Blog from @togethercompute: together.xyz/blog/releasing…

Paper “Decentralized Training of Foundation Models in Heterogeneous Environments”: arxiv.org/abs/2206.01288

Authors: @Hades317 @Yong_jun_He, Jared Quincy Davis, @Tianyi_Zh, @tri_dao, @BeidiChen, @percyliang, Chris Re, Ce Zhang

Paper “Decentralized Training of Foundation Models in Heterogeneous Environments”: arxiv.org/abs/2206.01288

Authors: @Hades317 @Yong_jun_He, Jared Quincy Davis, @Tianyi_Zh, @tri_dao, @BeidiChen, @percyliang, Chris Re, Ce Zhang

Here’s another success story of decentralized & democratized AI training:

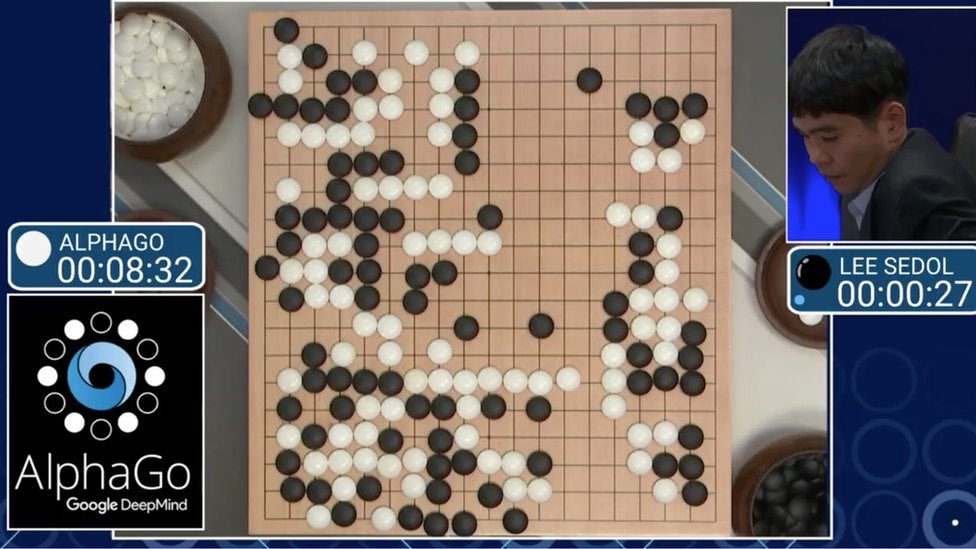

Leela Zero, the community effort to reproduce the mighty AlphaGo and AlphaZero for Go and Chess.

Leela Zero, the community effort to reproduce the mighty AlphaGo and AlphaZero for Go and Chess.

https://twitter.com/drjimfan/status/1627354160529285120

Open training is awesome, open-sourcing ideas is even better ;)

Express thread to my past writings:

Express thread to my past writings:

https://twitter.com/DrJimFan/status/1622637571431092224

• • •

Missing some Tweet in this thread? You can try to

force a refresh