Something that surprised me last year regarding LLMs was their ability to do mathematics well. I now suspect that mathematics is not much harder for computers to understand than ordinary natural language documents. This has pretty interesting implications. 🧵

I was previously too anchored to statements that researchers made about how we weren't making progress.

For example: "While scaling Transformers is automatically solving most other text-based tasks, scaling is not currently solving MATH."

arxiv.org/abs/2103.03874

For example: "While scaling Transformers is automatically solving most other text-based tasks, scaling is not currently solving MATH."

arxiv.org/abs/2103.03874

One thing I didn't understand was that simply allowing models to "think longer" allowed the models to generate much better results. It seems obvious in hindsight, but I didn't realize the magnitude of the effect was so large.

arxiv.org/abs/2211.14275…

arxiv.org/abs/2211.14275…

I suspect most mathematics-inclined people are not fully aware of how dramatically LLMs could change how mathematics is performed in the coming decade.

Take the problem of proof verification. Right now, almost all mathematicians do their work informally. That is, they write their proofs using informal notation, rather than via a proof assistant.

en.wikipedia.org/wiki/Proof_ass…

en.wikipedia.org/wiki/Proof_ass…

This practice is somewhat problematic because it's hard to verify informal mathematical proofs. Papers supposedly proving P!=NP are routinely submitted to journals, including from many with reputable credentials, but it is burdensome to formally verify these claims.

However, LLMs should soon become far more adept at converting informal mathematics into machine-verifiable code. That means that it may soon become possible to quickly verify whether a controversial proof is valid, helping us filter cranks from geniuses.

Unlike other uses for LLMs, mathematics is not as prone to problems of model hallucination. That's because invalid, hallucinated proofs can efficiently be verified as invalid by proof assistants.

arxiv.org/abs/2210.12283

arxiv.org/abs/2210.12283

In the longer term, LLMs will outcompete mathematicians outright. Predictors on Metaculus currently expect it will not be very long before we have AI that can get a perfect score on what may be the hardest math competition in the world.

metaculus.com/questions/1167…

metaculus.com/questions/1167…

When LLMs outperform top mathematicians at proof-generation, professional mathematics will likely become more like recreational mathematics. Humans may still contribute, but their contributions will rarely be seen as groundbreaking.

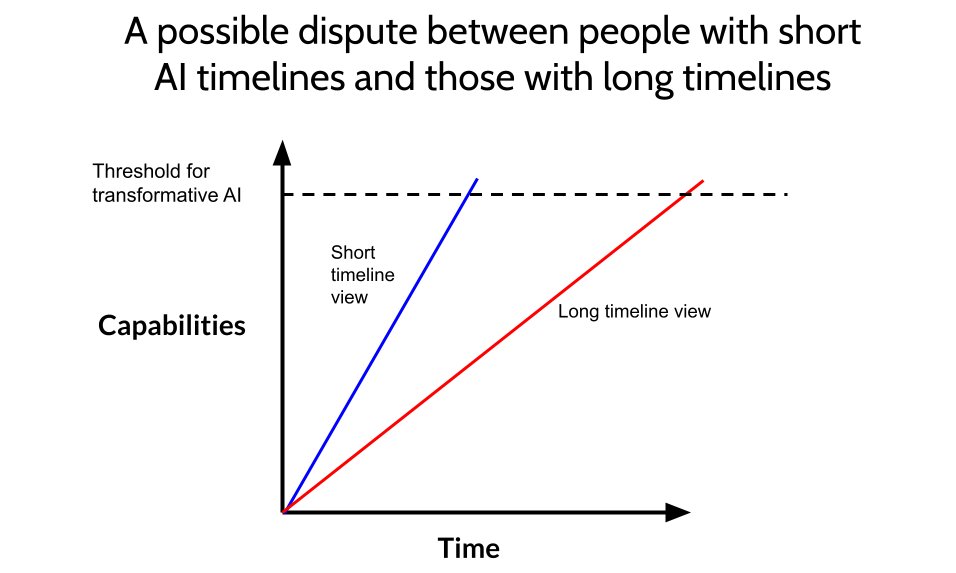

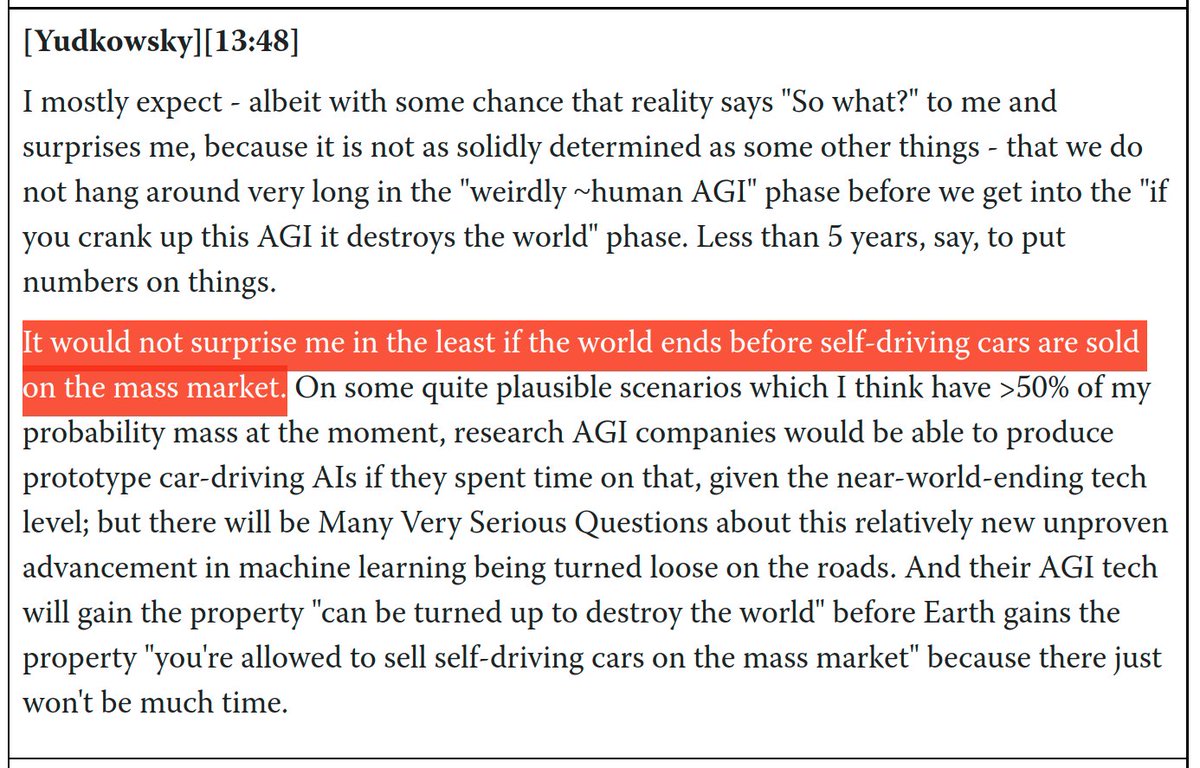

Some are worried about even more dramatic implications of mathematician AIs. For example, some people seem to think that when computers outperform human mathematicians, they'll be capable of rapid recursive self-improvement.

I'm not so sure about these claims. I don't currently think machine learning progress is severely bottlenecked by mathematical talent. I suspect progress is more bottlenecked by experimentation.

But I don't know. What would the world look like if the best mathematicians in the world are computer programs that we can summon by paying a few dollars to access an API?

• • •

Missing some Tweet in this thread? You can try to

force a refresh