Co-founder of @MechanizeWork

Married to @natalia__coelho

email: matthew at mechanize dot work

How to get URL link on X (Twitter) App

https://twitter.com/EpochAIResearch/status/1854993676524831046The first thing to understand about FrontierMath is that it's genuinely extremely hard. Almost everyone on Earth would score approximately 0%, even if they're given a full day to solve *each* problem. For fun, here's what a few people on Reddit said after looking at the problems.

What's remarkable about this milestone is that it could have been forecasted many decades ago. One paper reviewed estimates of the size of the human brain over 150 years and found that, "Overall... most of the [estimates] are relatively consistent."

What's remarkable about this milestone is that it could have been forecasted many decades ago. One paper reviewed estimates of the size of the human brain over 150 years and found that, "Overall... most of the [estimates] are relatively consistent."

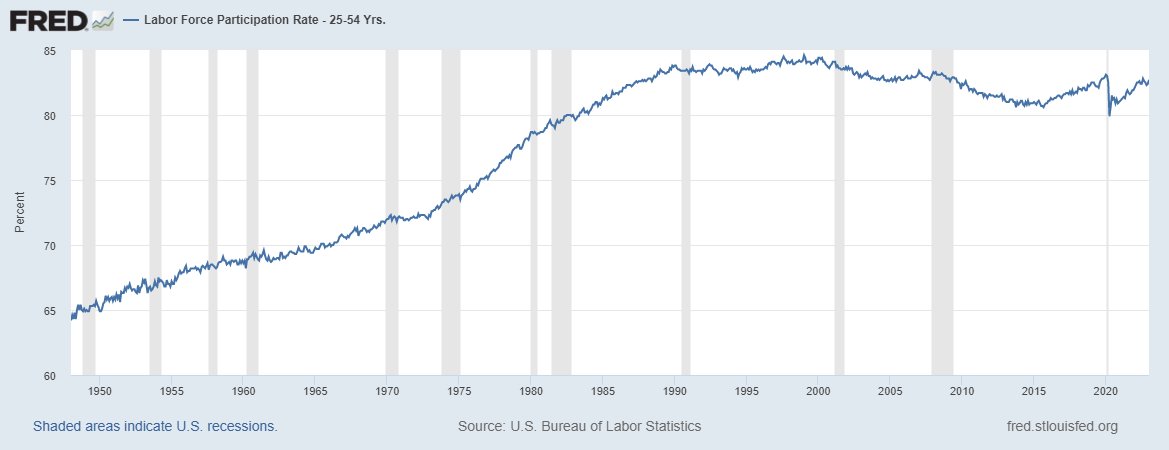

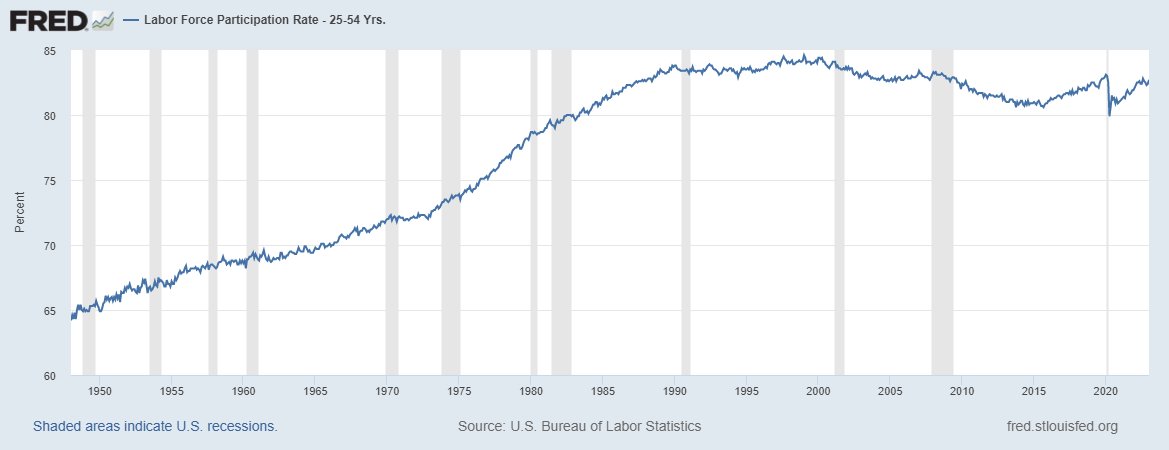

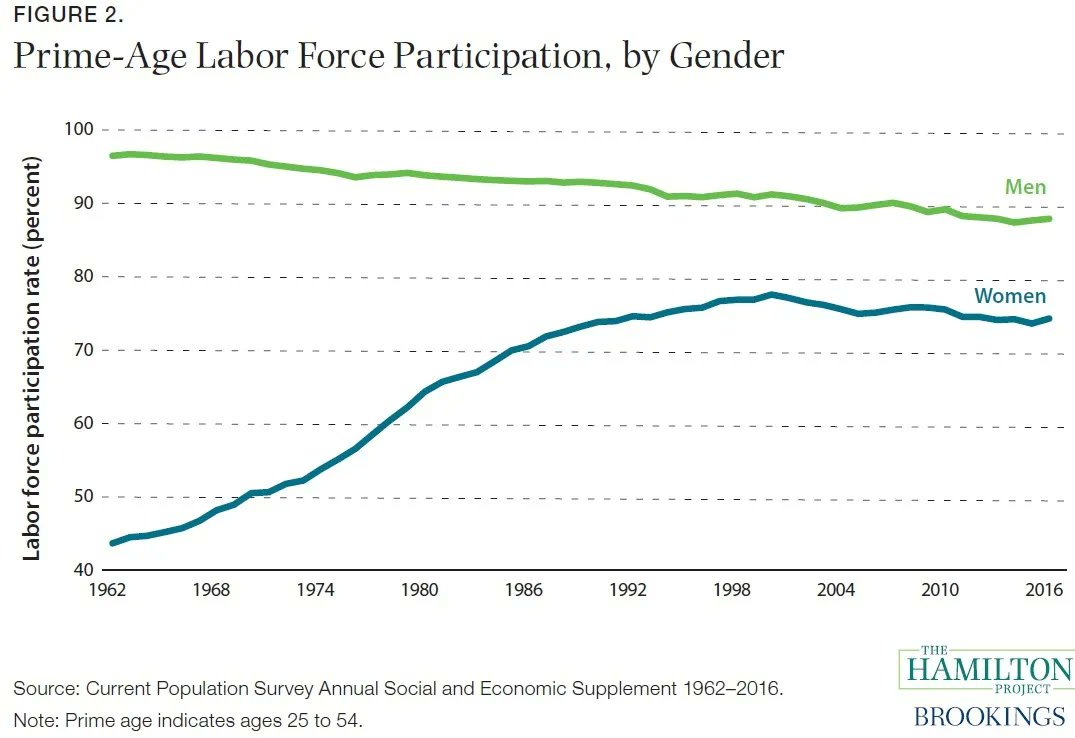

https://twitter.com/JosephPolitano/status/1632029149828202496It's true, for example, that the prime age labor force participation rate in the United States went up in the last 70 years, peaking in the 90s. However, this mostly reflects a shift from women doing non-market labor to holding formal jobs.

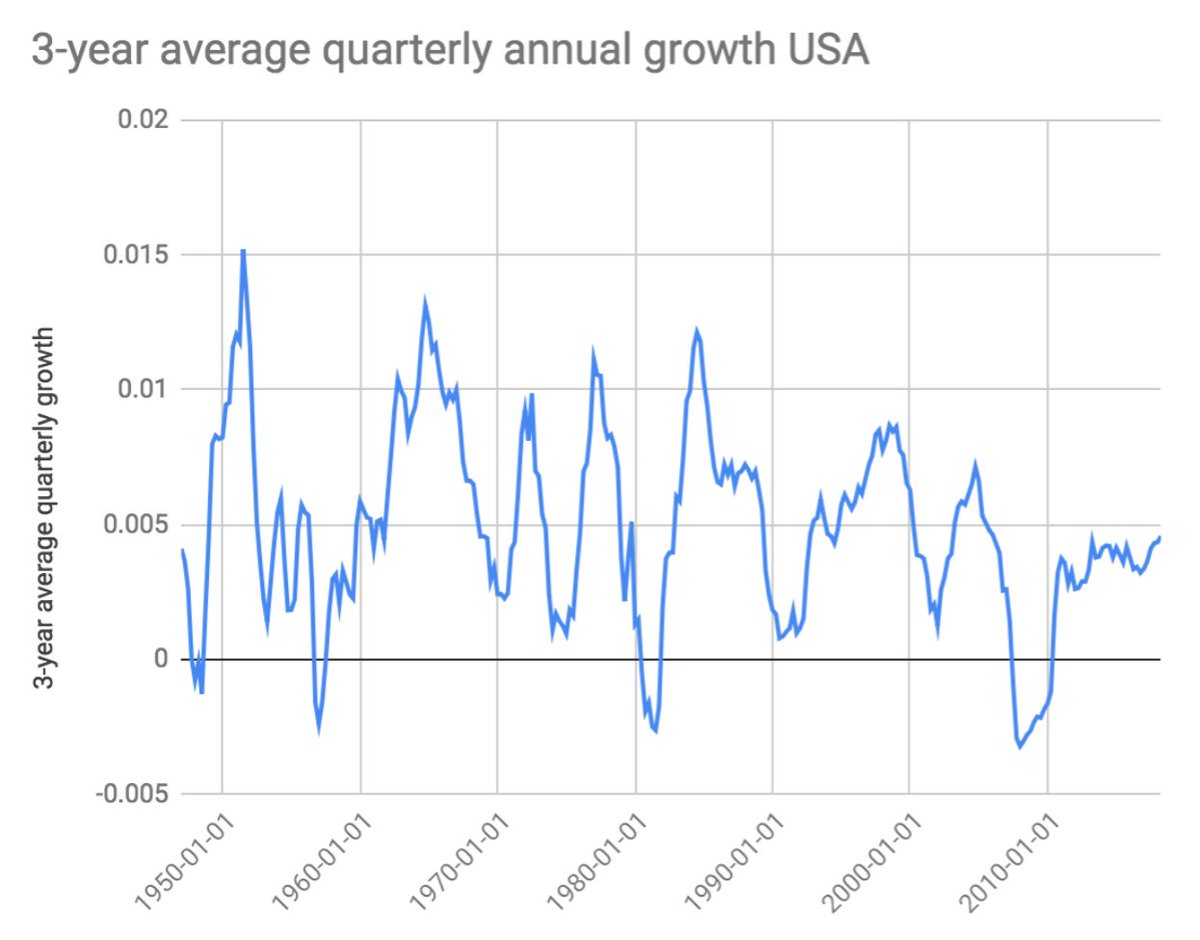

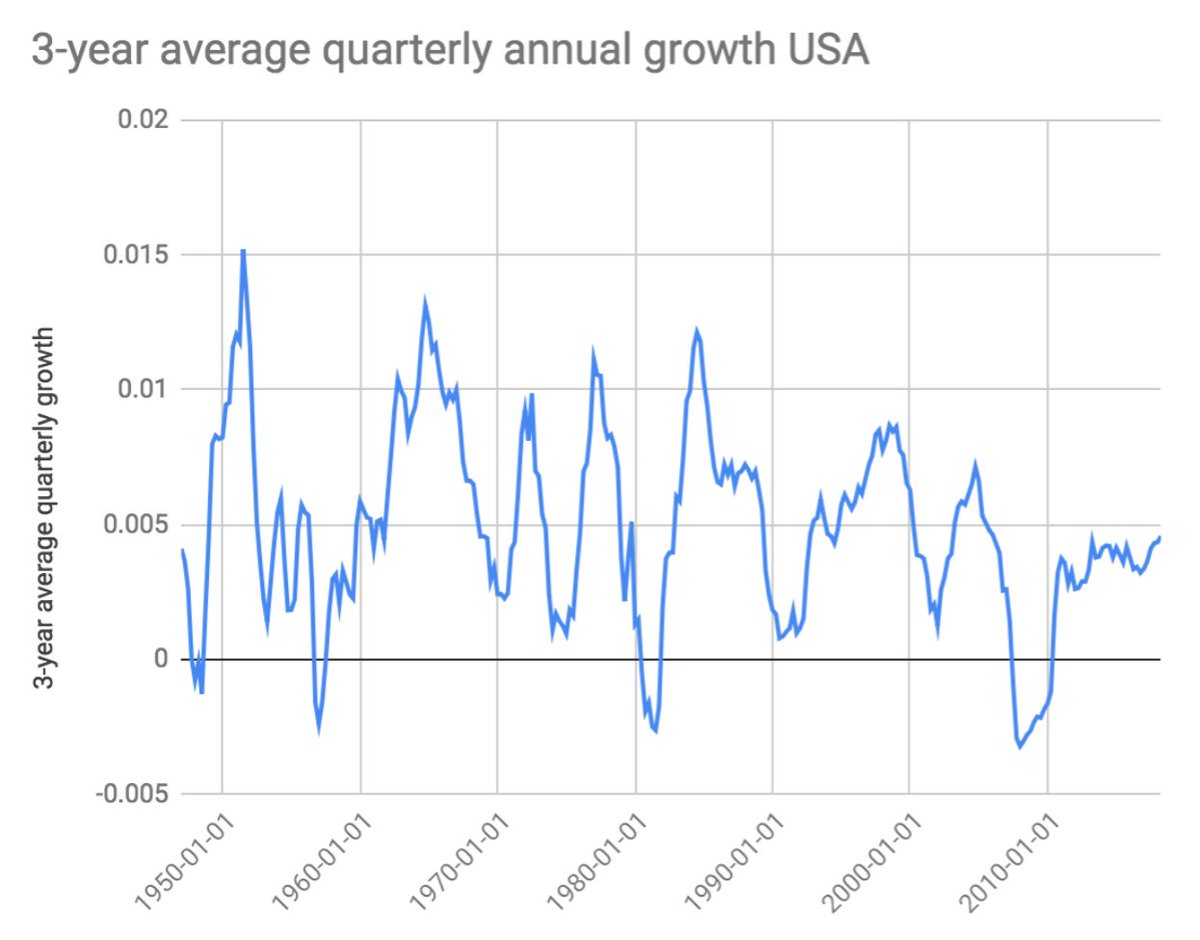

https://twitter.com/JgaltTweets/status/1618783360608006145The great stagnation theory is the idea that economic progress has been slowing down in recent decades. It has often been assumed to be true on the basis of GDP statistics, which show declining rates of per-capita economic growth in recent times.

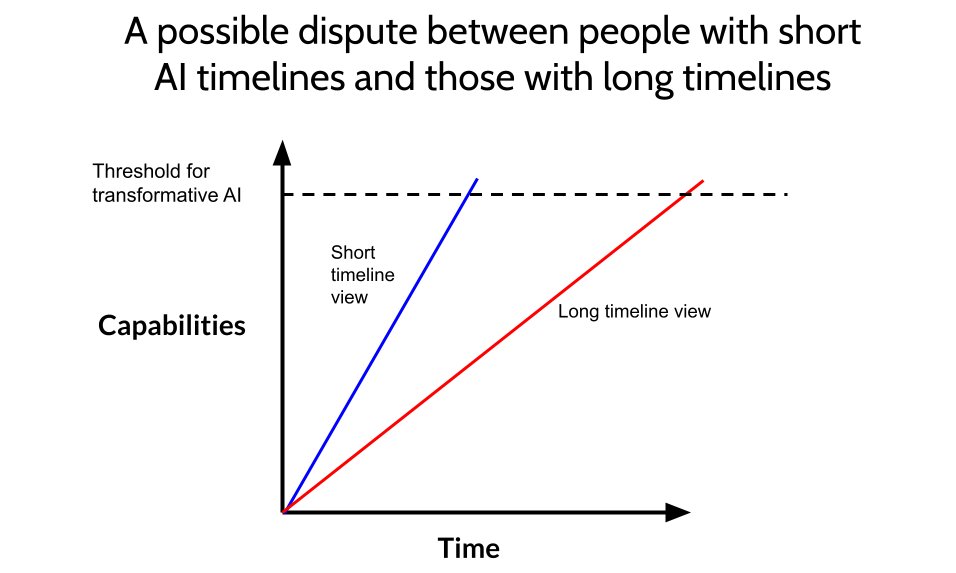

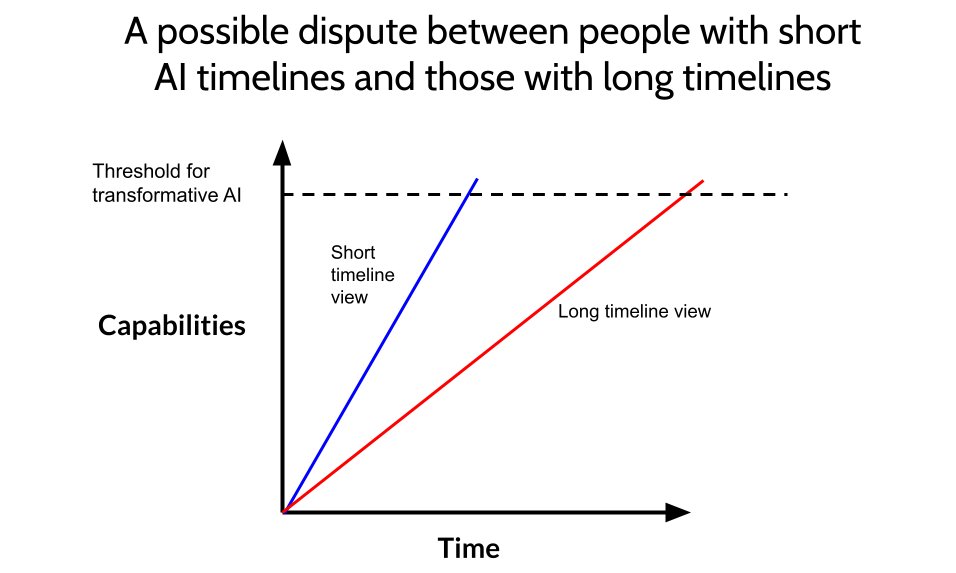

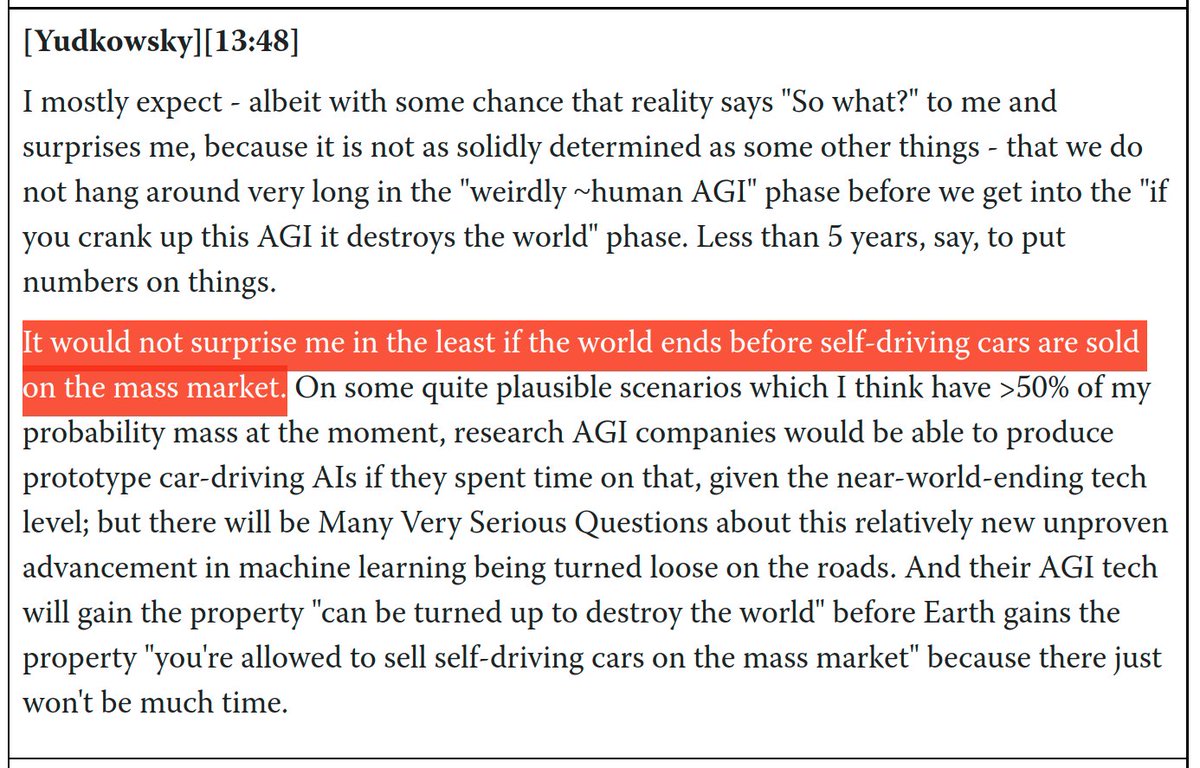

This disagreement manifests, for example, in Eliezer Yudkowsky's statement that it would "not surprise [him] in the least" if AGI is created and destroys the world before consumers are able to purchase self-driving cars.

This disagreement manifests, for example, in Eliezer Yudkowsky's statement that it would "not surprise [him] in the least" if AGI is created and destroys the world before consumers are able to purchase self-driving cars.

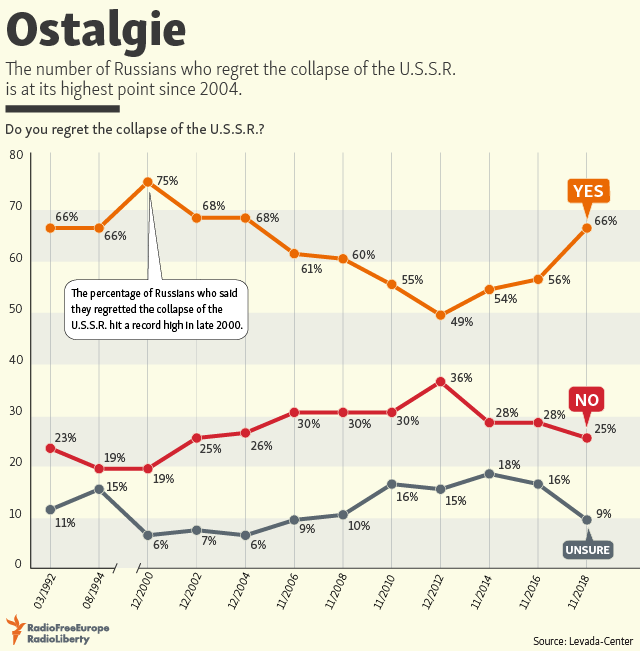

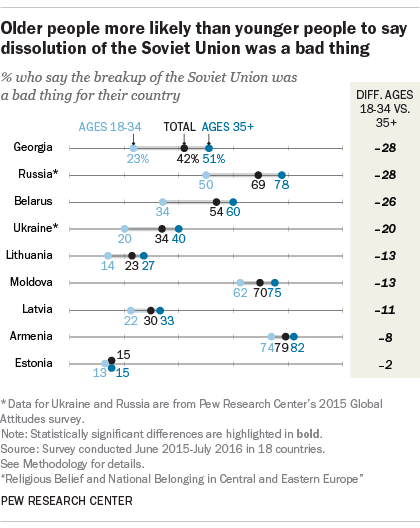

The data is likely driven by nostalgia. Old people are more likely to regret the fall of communism.

The data is likely driven by nostalgia. Old people are more likely to regret the fall of communism.

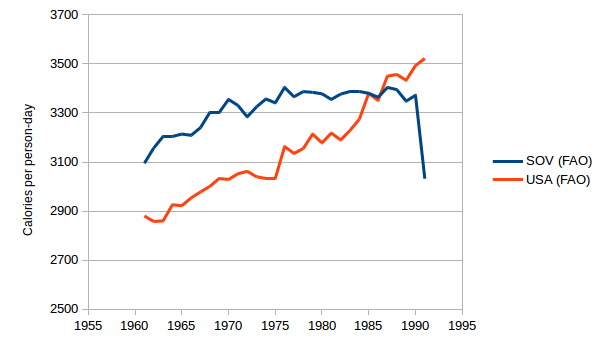

It is true that the Soviet Union experienced extreme famine in the 1930s. The most famous incident was the Holodomor, a famine in Ukraine that most scholars believe was partially intentional.

It is true that the Soviet Union experienced extreme famine in the 1930s. The most famous incident was the Holodomor, a famine in Ukraine that most scholars believe was partially intentional.