I see some folks starting to use the “TESCREAL” acronym. So, here’s a short thread on what it stands for and why it’s important. 🧵

https://twitter.com/athundt/status/1635151131247808512

Consider the following line from a recent NYT article by Ezra Klein. He’s talking about people who work on “AGI,” or artificial general intelligence. He could have just written: “Many—not all—are deeply influenced by the TESCREAL ideologies.”

Where did “TESCREAL” come from? Answer: a paper that I coauthored with the inimitable @timnitgebru, which is currently under review. It stands for “transhumanism, extropianism, singularitarianism, cosmism, Rationalism, Effective Altruism, and longtermism.”

Incidentally, these ideologies emerged, historically, in roughly that order.

There are at least four reasons for grouping them together as a single “bundle” of ideologies. First, all trace their lineage back to the first-wave Anglo-American eugenics tradition.

There are at least four reasons for grouping them together as a single “bundle” of ideologies. First, all trace their lineage back to the first-wave Anglo-American eugenics tradition.

I touched on this a bit in a recent article for @Truthdig . Transhumanism was developed by eugenicists, and the idea dates back at least to a 1927 book revealingly titled “Religion Without Revelation.” Transhumanism was introduced as a secular religion.

truthdig.com/dig/nick-bostr…

truthdig.com/dig/nick-bostr…

The first organized transhumanist movement appeared in the late 1980s and early 1990s. It was called “extropianism,” inspired by the promise that advanced tech could enable us to become radically enhanced posthumans. This is from a 1994 Wired article about the Extropians:

Reason two for conceptualizing TESCREAL ideologies as a bundle: their communities overlap both across time and contemporarily. All extropians were transhumanists; the leading cosmist was an extropian; many rationalists are transhumanists, singularitarians, longtermists, and EAs;

longtermism was founded by transhumanists and EAs; and so on. The sociological overlap is extensive. Many who identify as one of the letters in “TESCREAL” also identify as others. It thus make sense to talk about the “TESCREAL community.”

Third reason: as this suggests, the worldviews of these ideologies are interlinked. Underlying all is a kind of techno-utopianism + a sense that one is genuinely saving the world. Over and over again, you find talk of “saving the world” among transhumanists, Rationalists, EAs,

and longtermists. Here's an example from Luke Muehlhauser, who works for the EA organization Open Philanthropy, leading their "grantmaking on AI governance and policy." He used to work for the Peter Thiel-funded Machine Intelligence Research Institute.

The vision is to subjugate the natural world, maximize economic productivity, create digital consciousness, colonize the accessible universe, build planet-sized computers on which to run virtual-reality worlds full of 10^58 digital people, and generate “astronomical” amounts of ![Astronomical Waste: The Opportunity Cost of Delayed Technological Development NICK BOSTROM Oxford University http://www.nickbostrom.com [Utilitas Vol. 15, No. 3 (2003): pp. 308-314] [pdf] [translations: Russian, Portuguese, Bosnian] ABSTRACT. With very advanced technology, a very large population of people living happy lives could be sustained in the accessible region of the universe. For every year that development of such technologies and colonization of the universe is delayed, there is therefore an opportunity cost: a potential good, lives worth living, is not being realized. Given som...](/images/1px.png)

![Astronomical Waste: The Opportunity Cost of Delayed Technological Development NICK BOSTROM Oxford University http://www.nickbostrom.com [Utilitas Vol. 15, No. 3 (2003): pp. 308-314] [pdf] [translations: Russian, Portuguese, Bosnian] ABSTRACT. With very advanced technology, a very large population of people living happy lives could be sustained in the accessible region of the universe. For every year that development of such technologies and colonization of the universe is delayed, there is therefore an opportunity cost: a potential good, lives worth living, is not being realized. Given som...](https://pbs.twimg.com/media/FrHK_htWAAE1Y9H.jpg)

“value” by exploiting, plundering, and colonizing. However, TESCREALists also realize that the very same tech needed to create Utopia also carries unprecedented risks to our very survival. Hence, as with most religions, there’s also an apocalyptic element to their vision as well:

the tech that could "save" us might also destroy us. This is why transhumanists and longtermists introduced the word “existential risk.” Nonetheless, the utopia they imagine is so tantalizingly good that they believe putting the entire human species at risk by plowing ahead with

AGI research is worth it. (Imagine: eternal life! Unending pleasures! Superhuman mental abilities! No, I am not making this up—read “Letter from Utopia,” a canonical piece of the longtermist literature.)

nickbostrom.com/utopia

nickbostrom.com/utopia

The fourth reason is the most frightening: the TESCREAL ideologies are HUGELY influential among AI researchers. And since AI is shaping our world in increasingly profound ways, it follows that our world is increasingly shaped by TESCREALism! Pause on that for a moment. 😰

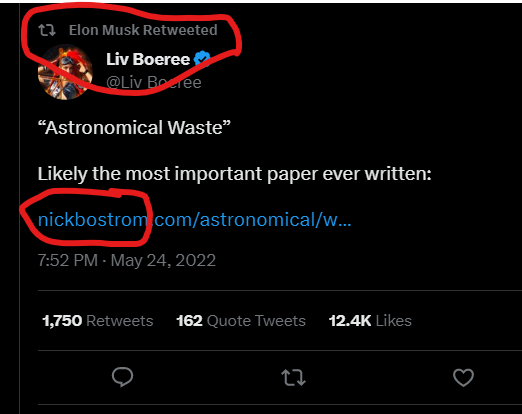

Elon Musk, for example, calls longtermism “a close match for my philosophy,” and retweeted one of the founding documents of longtermism by the (totally not racist!) transhumanist Nick Bostrom, who’s lionized by Rationalists and EAs.

Musk’s company Neuralink is essentially a transhumanist organization hoping to "kickstart transhuman evolution." 👇 Note that transhumanism is literally classified by philosophers as a form of so-called "liberal eugenics."

futurism.com/elon-musk-is-l…

futurism.com/elon-musk-is-l…

Sam Altman, who runs OpenAI, acknowledges that getting AGI right is important because “galaxies” are at stake. That’s a reference to the TESCREAL vision outlined above: colonize, subjugate, exploit, and maximize. "More is better," as William MacAskill writes.

Gebru and I point out that the “AI race” to create ever larger LLMs (like ChatGPT) is, meanwhile, causing profound harms to actual people in the present. It’s further concentrating power in the hands of a few white dudes—the tech elite. It has an enormous environmental footprint.

And the TESCREAL utopianism driving all this work doesn’t represent, in any way, what most people want the future to look like. This vision is being *imposed* upon us, undemocratically, by a small number of super-wealthy, super-powerful people in the tech industry who genuinely

believe that a techno-utopian world of immortality and “surpassing bliss and delight” (quoting Bostrom) awaits us. Worse, they often use the language of social justice to describe what they’re doing: it’s about “benefitting humanity,” they say, when in reality it’s hurting people

right now and there’s absolutely ZERO reason to believe that once they create “AGI” (if it’s even possible) this will somehow magically change. If OpenAI cared about humanity, it wouldn’t have paid Kenyan workers as little as $1.32 an hour: time.com/6247678/openai…

The “existential risk” here *IS* the TESCREAL bundle. You should be afraid of these transhumanists, Rationalists, EAs, and longtermists claiming that they’re benefiting humanity. They're not. They're elitist, power-hungry eugenicists who don’t care about anything but their Utopia

The framework of the “TESCREAL bundle” thus helps make sense of what’s going on—of why OpenAI, DeepMind, etc. are so obsessed with “AGI,” of why there’s an AI race to create ever larger LLMs. If the question is “What the f*ck?,” the answer is “TESCREALism.” 🤬

I forgot to add: if you'd like to read more on the harms of this AI race, check out this excellent and important article by Timnit Gebru, @emilymbender, and @mcmillan_majora.

dl.acm.org/doi/pdf/10.114…

dl.acm.org/doi/pdf/10.114…

• • •

Missing some Tweet in this thread? You can try to

force a refresh