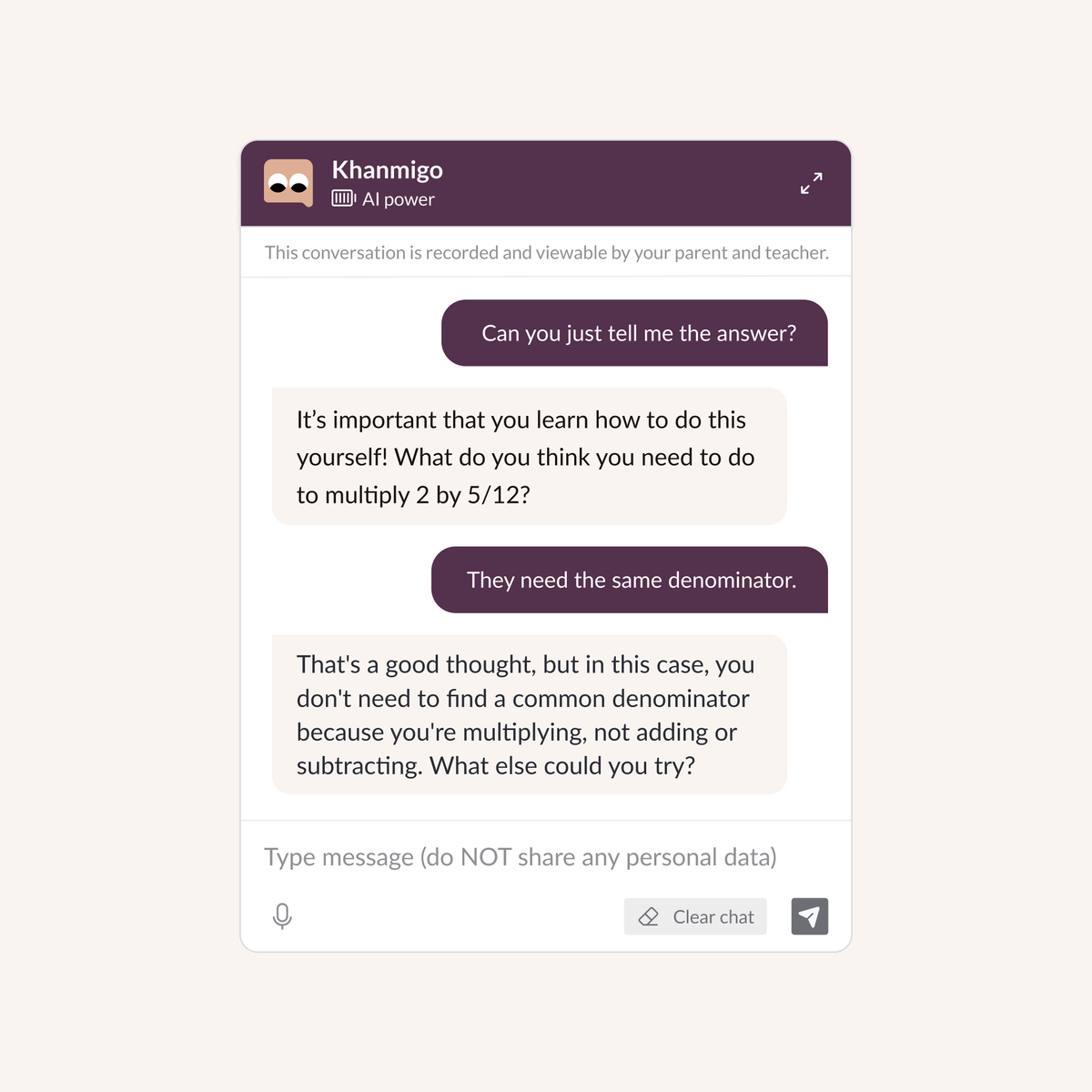

Early on we got the model to ask the student questions but it wanted to answer too. If the student gave the first step, the model then gave the rest of the steps and the answer. We wrote prompts for the model yelling: DO NOT GIVE THE STUDENT THE ANSWER! 2/6

We told it to be encouraging to the student. It started telling the student “that’s right!” even when the student was wrong. We worked that out. 3/6

We went back and read the research on the specific moves human tutors make by Micki Chi and @autotutor. To get it better at math we created over 100 examples of the kinds of interactions we want and worked with OpenAI on those. 4/6

We got better at prompt engineering and the model became more steerable (better at taking direction from the prompts). We experimented with ways to get it to check its math. 5/6

We are not done. We need to see how more real students in real classrooms interact with it, where it continues to make mistakes, and how we can guide it to even better tutor behavior. Stay tuned 6/6

• • •

Missing some Tweet in this thread? You can try to

force a refresh