🧹Tidier.jl 0.6.0 is available on the #JuliaLang registry.

What’s new?

- New logo!

- distinct()

- n(), row_number() work *everywhere*

- `!` for negative selection

- pivoting functions are better

- bug fixes to mutate() and slice()

Docs: kdpsingh.github.io/Tidier.jl/dev/

A short tour.

What’s new?

- New logo!

- distinct()

- n(), row_number() work *everywhere*

- `!` for negative selection

- pivoting functions are better

- bug fixes to mutate() and slice()

Docs: kdpsingh.github.io/Tidier.jl/dev/

A short tour.

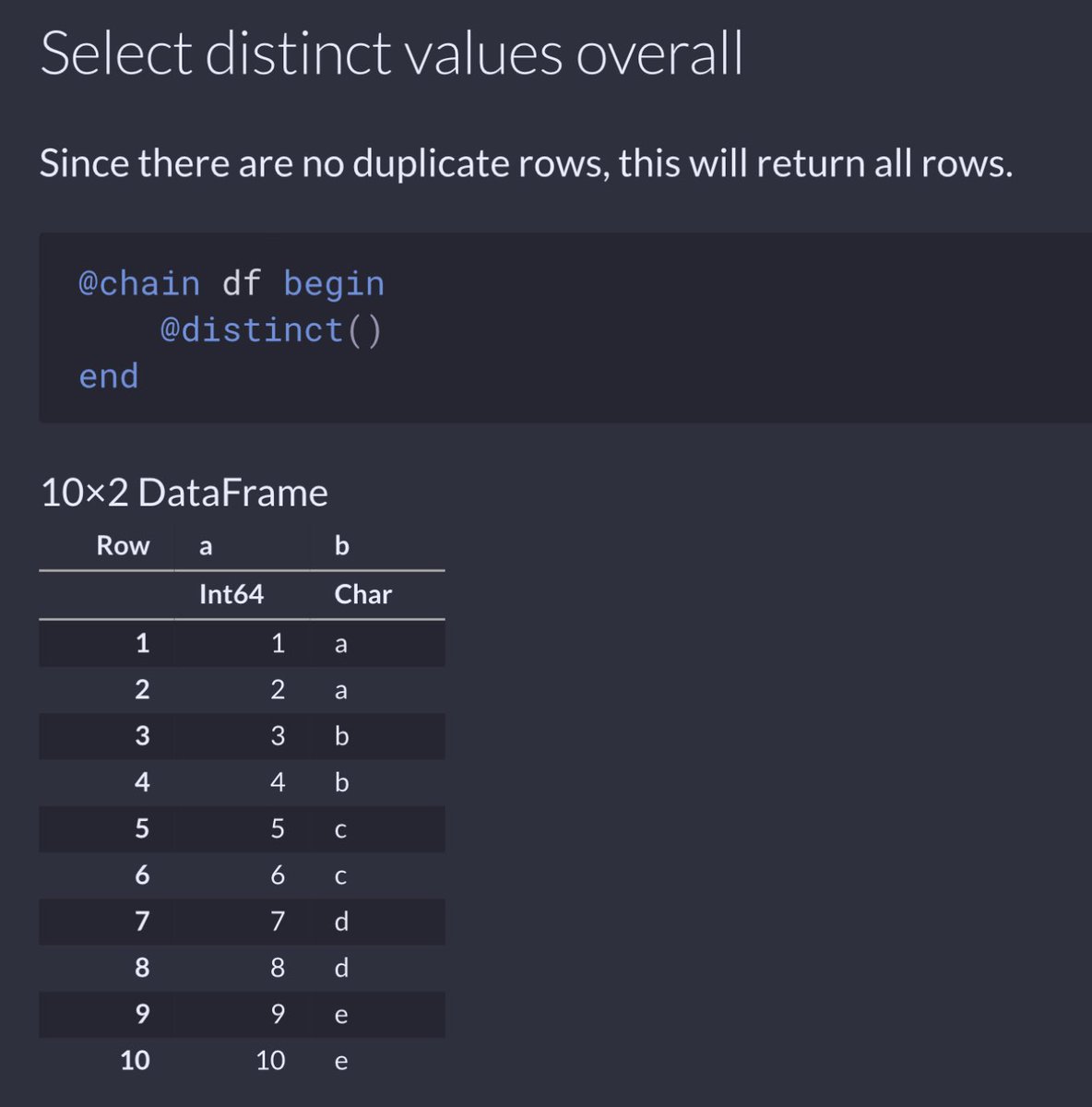

If you use distinct() without any arguments, it behaves just like the #rstats {tidyverse} distinct().

It checks if rows are unique, and returns all columns just as you would expect.

It checks if rows are unique, and returns all columns just as you would expect.

If you use distinct() with arguments as shown here, then it returns all columns for unique values of the supplied column.

This is slightly different behavior than {tidyverse} distinct(), but I kind of like it. Can easily pair this with select() to mimic dplyr behavior.

This is slightly different behavior than {tidyverse} distinct(), but I kind of like it. Can easily pair this with select() to mimic dplyr behavior.

Another thing that’s new is the helper function n().

While seemingly simple, implementing this was fairly difficult. When used inside of summarize/summarise, it behaves just like DataFrames.jl’s nrow() function.

So far, so good.

While seemingly simple, implementing this was fairly difficult. When used inside of summarize/summarise, it behaves just like DataFrames.jl’s nrow() function.

So far, so good.

However, n() — and its counterpart row_number() — also work inside of mutate(), where nrow() from DataFrames.jl isn’t as straightforward to use, particularly inside of an expression (e.g. n() + 1).

n() provides a standard interface from all functions.

n() provides a standard interface from all functions.

You can even use n() inside of slice().

To select the last 2 rows of a dataframe?

slice(n() - 1 : n()) — notice the order of operations is slightly different from R bc the `-` takes precedence over the `:` so no need for extra `()`.

Otherwise, this is exactly like R tidyverse.

To select the last 2 rows of a dataframe?

slice(n() - 1 : n()) — notice the order of operations is slightly different from R bc the `-` takes precedence over the `:` so no need for extra `()`.

Otherwise, this is exactly like R tidyverse.

And when the update says that n() and row_number() work *everywhere*, it’s really true.

You can even use row_number() inside of filter() to mimic the functionality provided by slice(), just like in R.

You can even use row_number() inside of filter() to mimic the functionality provided by slice(), just like in R.

A hearty thanks to @PVDimens @randyboyes @zzhaozhe @PietroMonticone @Gaspardelanoche @LazarusAlon for contributions to Tidier.jl, to many others in the #JuliaLang community for code, blogs, and inspiration, and to #rstats and @posit_pbc colleagues for the tidyverse ecosystem! 🙏

And finally, ICYMI, here’s the new logo thanks to @PVDimens!

• • •

Missing some Tweet in this thread? You can try to

force a refresh