I can finally discuss something extremely exciting publicly. Jensen just announced NVIDIA AI Foundations:

- Foundation Model as a Service is coming to enterprise, customized for your proprietary data.

- Multimodal from day 1: text LLM is just one part. Bring your images, videos,… twitter.com/i/web/status/1…

- Foundation Model as a Service is coming to enterprise, customized for your proprietary data.

- Multimodal from day 1: text LLM is just one part. Bring your images, videos,… twitter.com/i/web/status/1…

Prismer is an example of my team's work on building foundations for multimodal LLM.

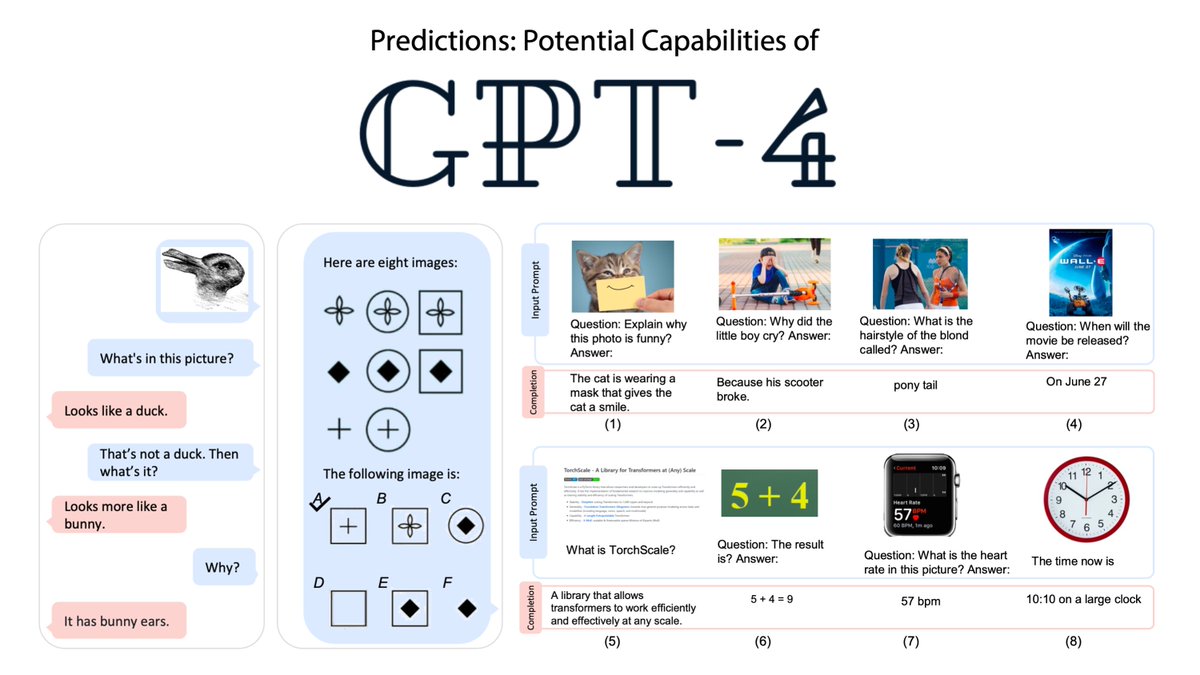

GPT-4's vision API is not publicly available yet, and will take much longer to actually become customizable for your enterprise proprietary data and unique use case.

GPT-4's vision API is not publicly available yet, and will take much longer to actually become customizable for your enterprise proprietary data and unique use case.

https://twitter.com/DrJimFan/status/1633179734803890177?s=20

VIMA ("VIsual Motor Attention") is another example of my team's effort to build foundations for multimodal-prompted, robot LLMs.

Folks, multimodal is the future, both for AI research and enterprise-grade applications. Time to go way beyond strings!

Folks, multimodal is the future, both for AI research and enterprise-grade applications. Time to go way beyond strings!

https://twitter.com/DrJimFan/status/1578433493561769984?s=20

To learn more, watch Jensen's GTC Keynote recording here: nvidia.com/gtc/keynote/

If your bandwidth allows, watch in 4K for the stunning graphics: nvidia.com/gtc/keynote/4k/

Attend GTC with us! I will be speaking too.

If your bandwidth allows, watch in 4K for the stunning graphics: nvidia.com/gtc/keynote/4k/

Attend GTC with us! I will be speaking too.

NVIDIA AI Foundation initiative was built by our company's incredible product teams. I play a small part at NVIDIA Research to create novel algorithms, craft innovative models, and chart new courses. Very grateful and thrilled to be here at the right time!

• • •

Missing some Tweet in this thread? You can try to

force a refresh