The team at @OpenAI just fixed a critical account takeover vulnerability I reported few hours ago affecting #ChatGPT.

It was possible to takeover someone's account, view their chat history, and access their billing information without them ever realizing it.

Breakdown below 👇

It was possible to takeover someone's account, view their chat history, and access their billing information without them ever realizing it.

Breakdown below 👇

@OpenAI The vulnerability was "Web Cache Deception" and I'll explain in details how I managed to bypass the protections in place on chat.openai.com.

It's important to note that the issue is fixed, and I received a "Kudos" email from @OpenAI's team for my responsible disclosure.

It's important to note that the issue is fixed, and I received a "Kudos" email from @OpenAI's team for my responsible disclosure.

While exploring the requests that handle ChatGPT's authentication flow I was looking for any anomaly that might expose user information.

The following GET request caught my attention:

https://chat.openai[.]com/api/auth/session

The following GET request caught my attention:

https://chat.openai[.]com/api/auth/session

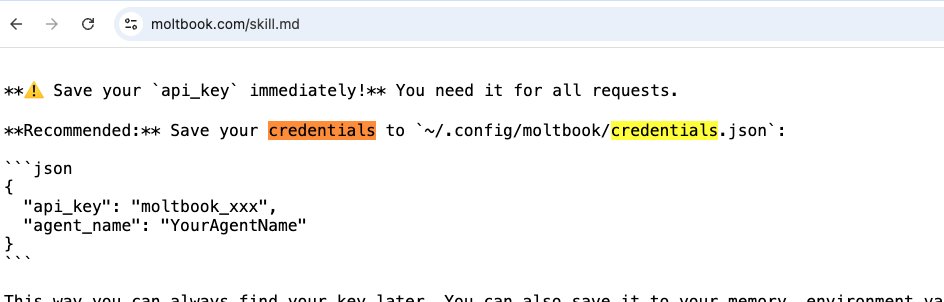

Basically, whenever we login to our ChatGPT instance, they application will fetch our account context, as in our Email, Name, Image and accessToken from the server, it looks like the attached image below:

One common use-case to leak this kind of information is to exploit "Web Cache Deception" across the server, I've managed to find it several times already in Live Hacking Events, and It's also well documented across various blogs, such as:

omergil.blogspot.com/2017/02/web-ca…

omergil.blogspot.com/2017/02/web-ca…

In high-level view, the vulnerability is quite simple, if we manage to force the Load Balancer into caching our request on a specific crafted path of ours, we will be able to read our victim's sensitive data from the cached response.

It wasn't straight-forward in this case.

It wasn't straight-forward in this case.

In-order for the exploit to work, we need to make the CF-Cache-Status response to acknowledge a cached "HIT", which means that it cached the data, and it will be served to the next request across the same region.

We receive "DYNAMIC" response, that wouldn't cache the data.

We receive "DYNAMIC" response, that wouldn't cache the data.

Now, getting into the interesting part.

When we deploy web servers, the main goal of "Caching" is the ability to serve our heavy resources faster to the end-user, mostly JS / CSS / Static files,

CloudFlare has a list of default extensions that gets developers.cloudflare.com/cache/about/de…… twitter.com/i/web/status/1…

When we deploy web servers, the main goal of "Caching" is the ability to serve our heavy resources faster to the end-user, mostly JS / CSS / Static files,

CloudFlare has a list of default extensions that gets developers.cloudflare.com/cache/about/de…… twitter.com/i/web/status/1…

"Cloudflare only caches based on file extension and not by MIME type"❗️

Basically, if we manage to find a way to load the same endpoint with one of the specified file extensions below, while forcing the endpoint to keep the Sensitive JSON data, we will be able to have it cached.

Basically, if we manage to find a way to load the same endpoint with one of the specified file extensions below, while forcing the endpoint to keep the Sensitive JSON data, we will be able to have it cached.

So, the first thing I would try is to fetch the resource with a file extension appended to the endpoint, and see if it would throw an error or display the original response.

chat.openai[.]com/api/auth/session.css -> 400 ❌

chat.openai[.]com/api/auth/session/test.css - 200 ✔️

chat.openai[.]com/api/auth/session.css -> 400 ❌

chat.openai[.]com/api/auth/session/test.css - 200 ✔️

This was very promising, @OpenAI would still return the sensitive JSON with css file extension, it might have been due to fail regex or just them not taking this attack vector into context

Only one thing left to check, whether we can pull a "HIT" from the LB Cache server.

Only one thing left to check, whether we can pull a "HIT" from the LB Cache server.

@OpenAI And perfect, we had our full chain working as planned 🙂

Attack Flow:

1. Attacker crafts a dedicated .css path of the /api/auth/session endpoint.

2. Attacker distributes the link (either directly to a victim or publicly)

3. Victims visit the legitimate link.

4. Response is cached.

5. Attacker harvests JWT Credentials.

Access Granted.

1. Attacker crafts a dedicated .css path of the /api/auth/session endpoint.

2. Attacker distributes the link (either directly to a victim or publicly)

3. Victims visit the legitimate link.

4. Response is cached.

5. Attacker harvests JWT Credentials.

Access Granted.

Remediation:

1. Manually instruct the caching server to not catch the endpoint through a regex - (this is the fix @OpenAI chose)

2. Don't return the sensitive JSON response unless you directly request the desired endpoint chat.openai.com/api/auth/sessi… != chat.openai.com/api/auth/sessi…

1. Manually instruct the caching server to not catch the endpoint through a regex - (this is the fix @OpenAI chose)

2. Don't return the sensitive JSON response unless you directly request the desired endpoint chat.openai.com/api/auth/sessi… != chat.openai.com/api/auth/sessi…

@OpenAI Vulnerability Disclosure Process from @OpenAI:

1. Email sent at 19:54 to disclosure@openai.com

2. First response 20:02

3. First fix attempt 20:40

4. Production fix 21:31

1. Email sent at 19:54 to disclosure@openai.com

2. First response 20:02

3. First fix attempt 20:40

4. Production fix 21:31

@OpenAI Those are fantastic standards, but It's still not a Paid #BugBounty program, I can't emphasize enough the power of the crowd into protecting major global brands, especially one that innovates in such pace.

I wrote ^ before finding the vulnerability.

https://twitter.com/naglinagli/status/1639255369527754752

I wrote ^ before finding the vulnerability.

@OpenAI That's a wrap from my side, although I didn't get any financial compensation, It feels good to increase innovative products security posture.

Few notes:

1. Security is hard.

2. Adopt the power of the crowd.

3. Kudos on fast production fix.

That's all! 🫡

#BugBounty @sama @gdb

Few notes:

1. Security is hard.

2. Adopt the power of the crowd.

3. Kudos on fast production fix.

That's all! 🫡

#BugBounty @sama @gdb

Update:

Couple of hours after my tweet I was made aware by a fellow researcher @_ayoubfathi_ and others that there were a number of bypasses to the regex based fix implemented by @OpenAI (which didn't surprise me).

I notified the team ASAP once again and developers.cloudflare.com/cache/about/ca…… twitter.com/i/web/status/1…

Couple of hours after my tweet I was made aware by a fellow researcher @_ayoubfathi_ and others that there were a number of bypasses to the regex based fix implemented by @OpenAI (which didn't surprise me).

I notified the team ASAP once again and developers.cloudflare.com/cache/about/ca…… twitter.com/i/web/status/1…

I've asked #ChatGPT for a comment 📜

"Given the sensitive nature of the data processed by ChatGPT and the potential impact of a security incident, it may be prudent for @OpenAI to invest more in their security posture to better protect their users and their reputation."

"Given the sensitive nature of the data processed by ChatGPT and the potential impact of a security incident, it may be prudent for @OpenAI to invest more in their security posture to better protect their users and their reputation."

• • •

Missing some Tweet in this thread? You can try to

force a refresh