Yann LeCun kicking off the debate with a bold prediction: nobody in their right mind will use autoregressive models 5 years from now #phildeeplearning

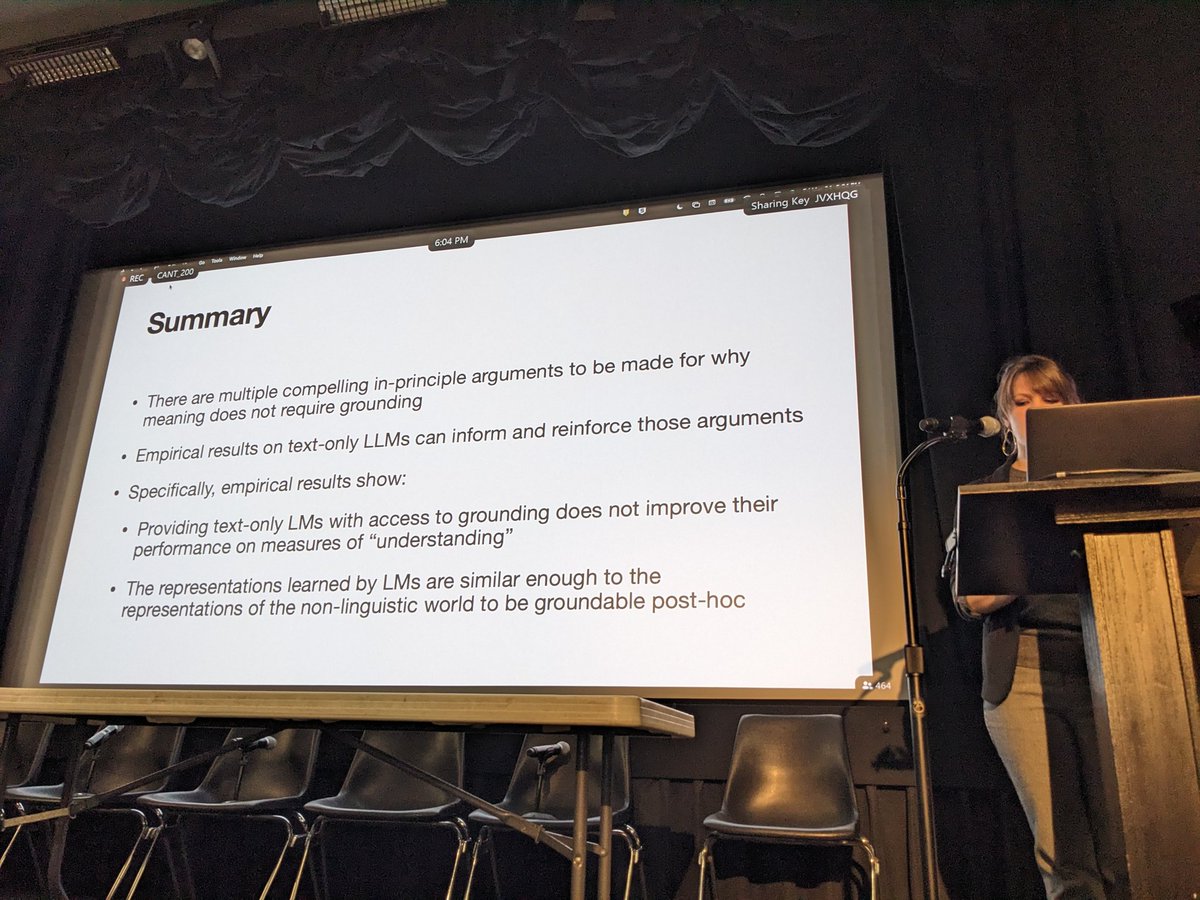

Ellie Pavlick @BrownCSDept leading the charge on the "No" side!

Ellie's conclusions #phildeeplearning

@LakeBrenden opts instead for a qualified "yes" on the general question, and a strong "yes" on the more specific question – Do LLMs need sensory grounding to understand words as people do?

Next up: @davidchalmers42 starting his talk on the "Yes" side with a bit of philosophical history about the grounding problem

Dave's answer: "No, but – it's complicated" #phildeeplearning

Our final speaker before the discussion: @glupyan starting with the results of his recent survey #phildeeplearning

Conclusion slide from @glupyan

And now for the discussion! Let's find out if out speakers can meet somewhere in the middle #phildeeplearning

Correction: that was of course the "No" side (as in: LLMs don't need sensory grounding for understanding)

• • •

Missing some Tweet in this thread? You can try to

force a refresh