Philosopher of Artificial Intelligence & Cog Science @Macquarie_Uni (incoming @UniofOxford)

@raphaelmilliere.com on 🦋

Blog: https://t.co/2hJjfSid4Z

How to get URL link on X (Twitter) App

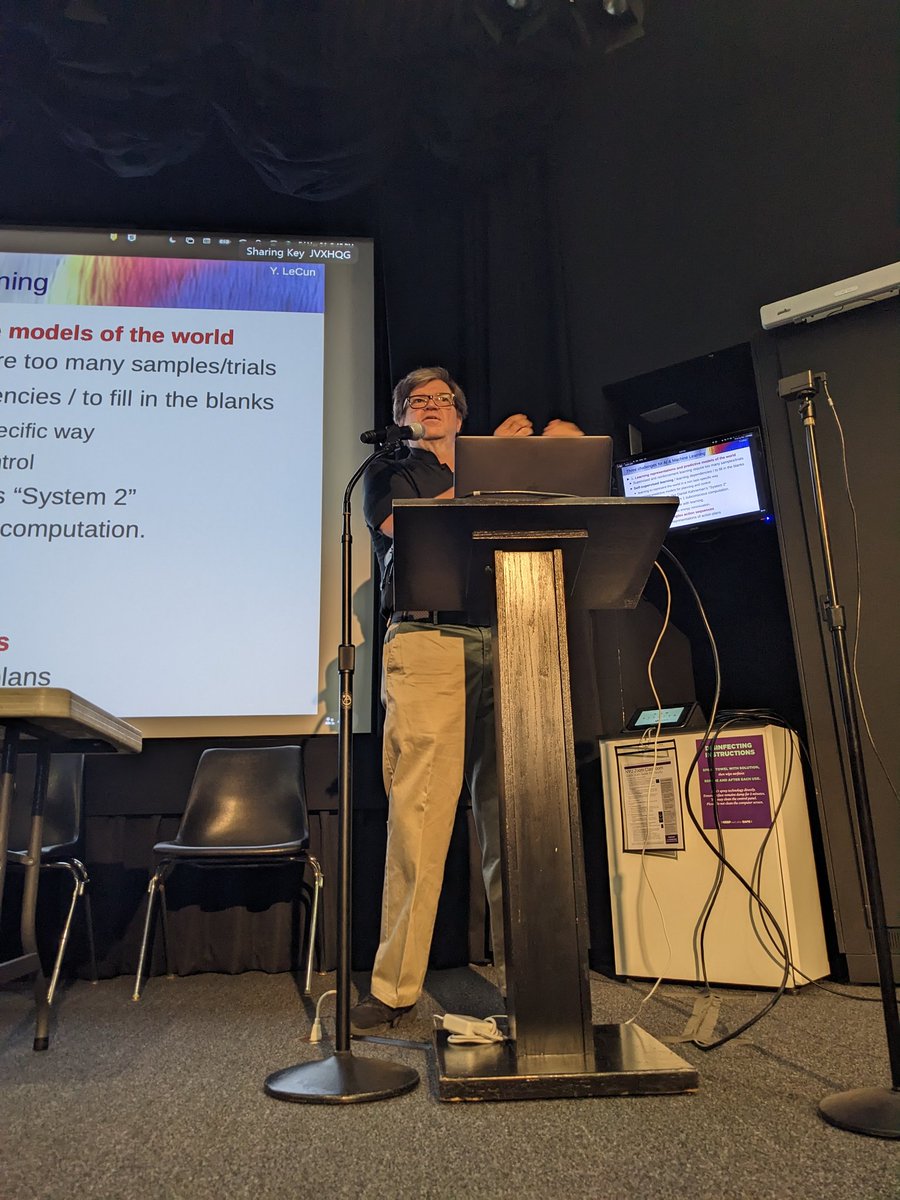

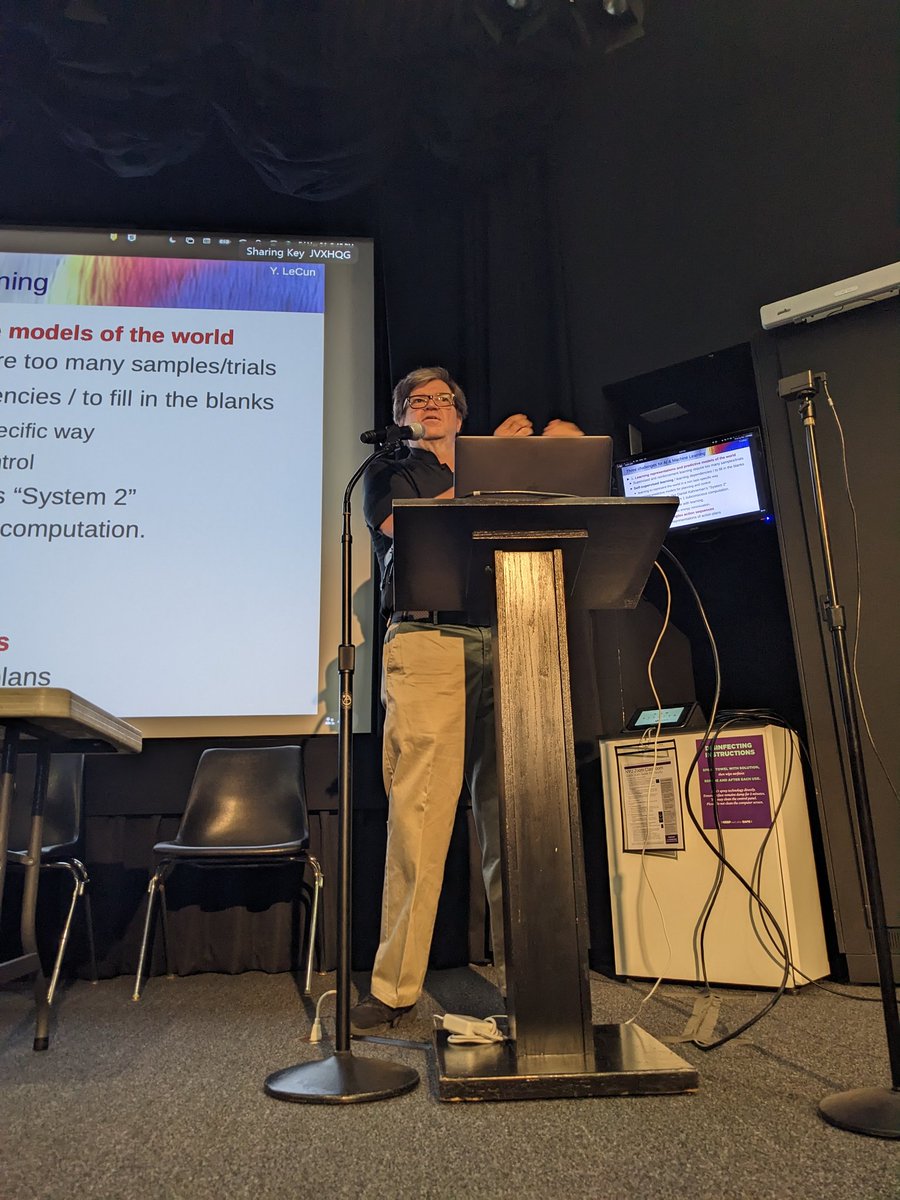

@ylecun closing his presentation with some conjectures #phildeeplearning

@ylecun closing his presentation with some conjectures #phildeeplearning

https://twitter.com/lmthang/status/1539664610596225024@GoogleAI "A portrait photo of a kangaroo wearing an orange hoodie and blue sunglasses standing on the grass in front of the Sydney Opera House holding a sign on the chest that says Welcome Friends!"

https://twitter.com/Dr_Atoosa/status/1539136439979544577As LLMs become easier and cheaper to use for companies and individuals, LLM-based (chat)bots will become commonplace online. I worry that this could eventually threaten to degrade human communication, by making people increasingly suspicious that they are talking to machines. 2/

https://twitter.com/giannis_daras/status/1531693093040230402

"Wa ch zod rea" yields specific dogs

"Wa ch zod rea" yields specific dogs

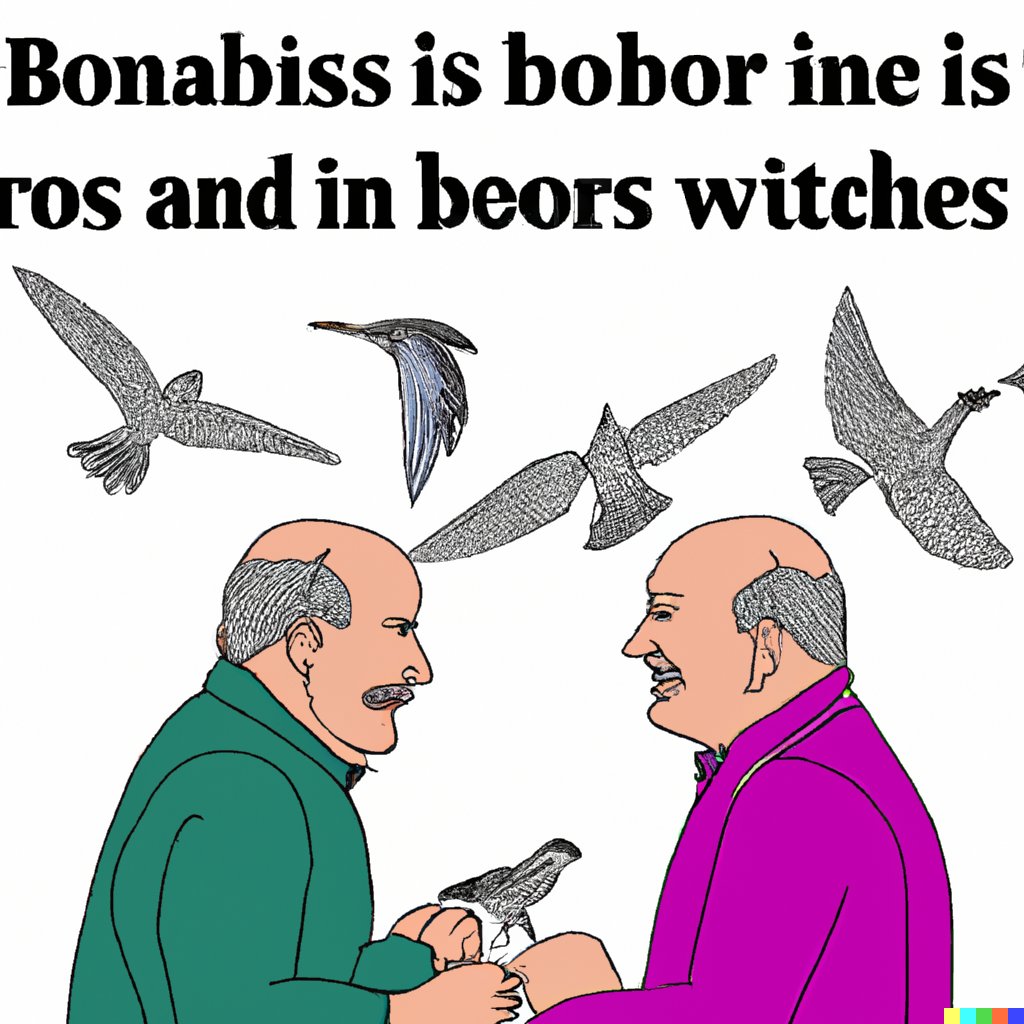

First, I prompted DALL-E with "Bonabiss is bobor ine is ros and in beors witches" a few times. Perplexing - something about bugs, fruits, and witches? The latter is hardly surprising given its presence in the prompt, but seems out of place. 2/14

First, I prompted DALL-E with "Bonabiss is bobor ine is ros and in beors witches" a few times. Perplexing - something about bugs, fruits, and witches? The latter is hardly surprising given its presence in the prompt, but seems out of place. 2/14

https://twitter.com/FelixHill84/status/1529361172922867713I disagree that "it makes no sense to criticise DALL-E (or neural networks in general) for their poor composition", if that simply means pointing out current limitations. I also emphasized DALL-E's strengths, but it clearly struggles with some forms of compositionality. 2/6

A few weeks ago DALL-E 2 was unveiled. It exhibits both very impressive success cases and clear failure cases – especially when it comes to counting, relative position, and some forms of variable binding. Why?

A few weeks ago DALL-E 2 was unveiled. It exhibits both very impressive success cases and clear failure cases – especially when it comes to counting, relative position, and some forms of variable binding. Why? https://twitter.com/raphaelmilliere/status/15146197213411450912/11

The prompt contained the essays themselves, plus a blurb explaining that GPT-3 had to respond to them. Full disclosure: I produced a few outputs and cherry-picked this one, although they were all interesting in their own way.

The prompt contained the essays themselves, plus a blurb explaining that GPT-3 had to respond to them. Full disclosure: I produced a few outputs and cherry-picked this one, although they were all interesting in their own way.