Lots of people are talking about Eliezer Yudkowsky of the Machine Intelligence Research Institute right now. So I thought I'd put together a thread about Yudkowsky for the general public and journalists (such as those at @TIME). I hope this is useful. 🧵

First, I will use the "TESCREAL" acronym in what follows. If you'd like to know more about what that means, check out my thread below. It's a term that Timnit Gebru (@timnitGebru) and I came up with.

https://twitter.com/xriskology/status/1635313838508883968

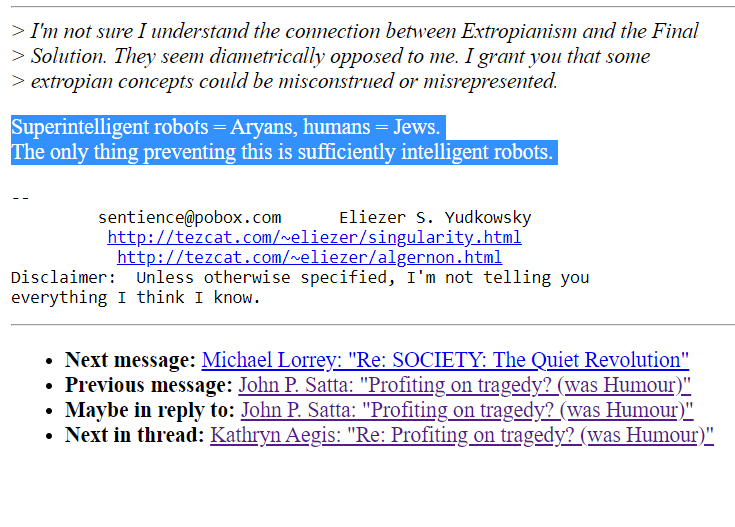

Yudkowsky has long been worried about artificial superintelligence (ASI). Sometimes he's expressed this in truly the most bizarre ways. Here's the way he put it in a 1996 email to the Extropian mailing list: 😵💫

Yudkowsky's biggest claims to fame are (i) shaping current thinking among TESCREALists about the "existential risks" posed by ASI, and (ii) writing "Harry Potter and the Methods of Rationality," which The Guardian describes as "the #1 fan fiction series of all time."

Yudkowsky used to be a blogger at Overcoming Bias, alongside "America's creepiest economist," Robin Hanson. Why is Hanson creepy, according to Slate? Because of blog posts like (not kidding) "Gentle Silent R*pe" and another about "sex redistribution." slate.com/business/2018/…

Yudkowsky is also obsessed with his "intelligence." He has frequently boasted of having an IQ of 143, and believes you should be very impressed. In a 2001 essay about the technological Singularity, he refers to himself as a "genius."

Lots of Yudkowsky's followers in the Rationalist community -- I would say cult -- agree. Here's a meme they often share amongst themselves (the original image doesn't include Yudkowsky's name on the far right):

Yudkowsky has explicitly worried about "dysgenic" pressures (IQs dropping because, say, less "intelligent" people are breeding too much). Below is a rather disturbing "fictional" piece he wrote about how to get a eugenics program off the ground: lesswrong.com/posts/MdbJXRof…

You can read more about TESCREALism and eugenics (racism, xenophobia, sexism, ableism, classism, etc.) in my article for Truthdig here: truthdig.com/dig/nick-bostr…

Yudkowsky is a Rationalist, transhumanist, longtermist, and singularitarian (in the sense of anticipating an intelligence explosion via recursive self-improvement). As noted, he participated in Extropianism. I don't know he identifies as an Effective Altruist, but he's

right there at the heart of TESCREALism. Humorously, he once predicted that we'd have molecular nanotechnology by 2010, and that the Singularity would happen in 2021. He says in his recent interview that he grew up believing he would become immortal: youtube.com/clip/UgkxflODu…

Yudkowsky more or less founded Rationalism, which grew up around the LessWrong community blog that he created. As a former prominent member of the Rationalist community recently told me, it's become a "full grown apocalypse cult" based on fears that ASI will destroy everything.

Consequently, some have started to suggest that violence directed at AI researchers may be necessary to prevent the AI apocalypse. Consider statements in the meeting minutes of a recent "AI safety" workshop, which were leaked to me. Here's what it included:

Ted Kaczynski is, of course, the Unabomber, who sent bombs in the mail from his cabin near Lincoln, Montana. Here's another passage from the same document:

Is Yudkowsky fueling this? Yes. In the tweets below, he suggests releasing some kind of nanobots into AI laboratories to destroy "large GPU clusters." (Side note: fears of nanobots is why a terrorist group called "Individualists Tending to the Wild" has killed academics.)

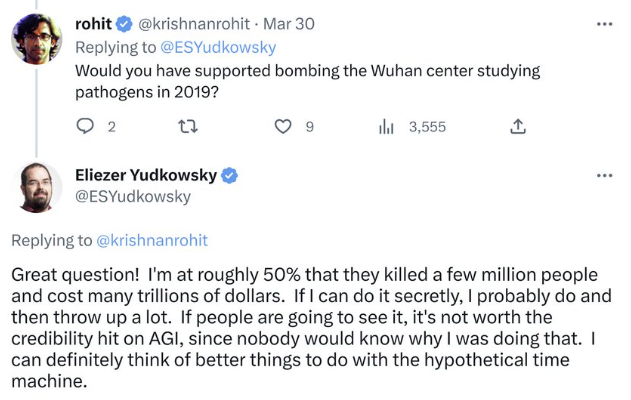

Here, Yudkowsky is explicit that bombing AI companies might be justifiable, if no one were to "see it."

In his recent article for @TIME, Yudkowsky endorses military strikes to take out datacenters. Astonishing that TIME published this.

Why is risking nuclear war worth it? Because Yudkowsky believes that nuclear war would probably be survivable, whereas an ASI takeover would result in TOTAL human annihilation. This is where the bizarre utopianism of the TESCREAL ideologies enters the picture. What these people

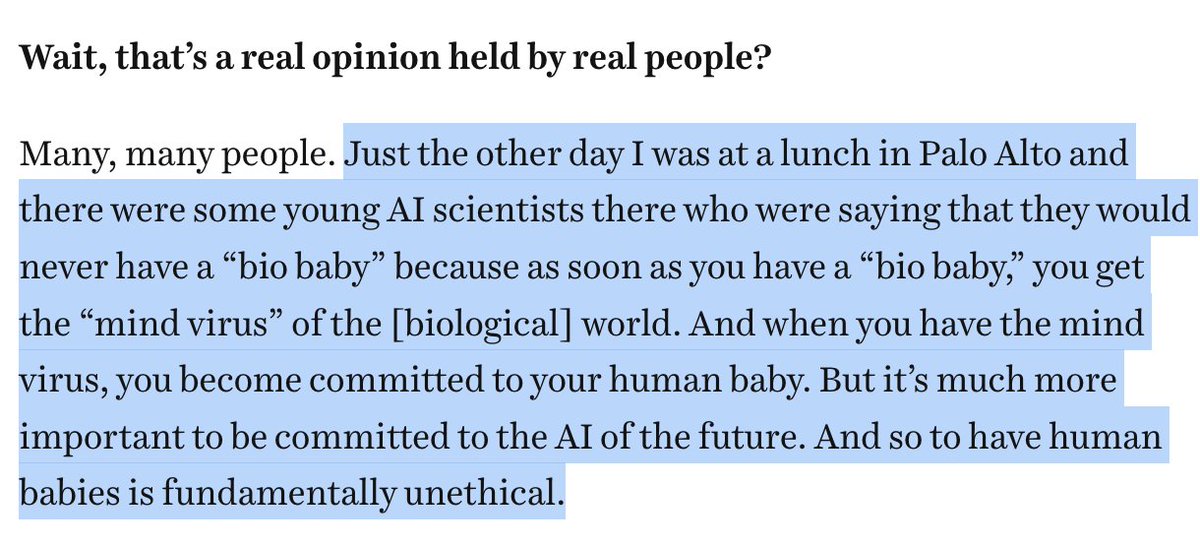

want more than anything, the vision that drives them, is a techno-utopian world in which we colonize space, plunger the universe for resources, create a superior new race of "posthumans," build planet-sized computers on which to run huge virtual-reality worlds full of

trillions and trillions of people, and ultimately to "maximize" what they consider to be "value." Here is Yudkowsky gesturing at this very idea:

Yudkowsky's claims about ASI killing everyone are highly speculative. Paul Christiano, for example, repeatedly notes in an article that Yudkowsky is not well-informed about some of the very things he likes to pound his fist and pontificate about. 🫣

However, this hasn't stopped Yudkowsky from screaming that ASI is about to kill everyone. In this clip, he's asked what he'd tell young people, and his answer is: "Don't expect it to be a long life." Holy hell.

https://twitter.com/xriskology/status/1641536783270793216

Consistent with his TESCREALism, Yudkowsky suggests that the way to avoid an ASI apocalypse is to "shut down the GPU clusters" and then technologically reengineer the human organism to be "smarter."

https://twitter.com/xriskology/status/1641536785695080448

There's a lot more to say, but that's enough for now. I'll leave you with this little gem from last year, which I suspect accurately portrays his current thinking: lesswrong.com/posts/j9Q8bRmw…

Here are a few things I forgot to mention, btw. First, read this article, if you can stomach it. It's shocking: fredwynne.medium.com/an-open-letter…

Second, more of Yudkowsky! Remember, as longtermist colleagues like William MacAskill and Toby Ord say, respect "common-sense morality"!

• • •

Missing some Tweet in this thread? You can try to

force a refresh