I used @predictionguard (paired with @LangChainAI)to evaluate 27 Large Language Models (LLMs) for text generation and automatically select a best/fallback model for use in some generative #AI applications.

A thread 🧵

A thread 🧵

Some general thoughts:

-> No surprise in the top performer (@OpenAI GPT-3.5), but...

-> 3 of @CohereAI models show up, including the runner up.

-> Surprised to see XLNet, a model that I can pull down from @huggingface, performs better than some of the popular #LLMs

-> No surprise in the top performer (@OpenAI GPT-3.5), but...

-> 3 of @CohereAI models show up, including the runner up.

-> Surprised to see XLNet, a model that I can pull down from @huggingface, performs better than some of the popular #LLMs

Regarding the evaluation, the process works as follows:

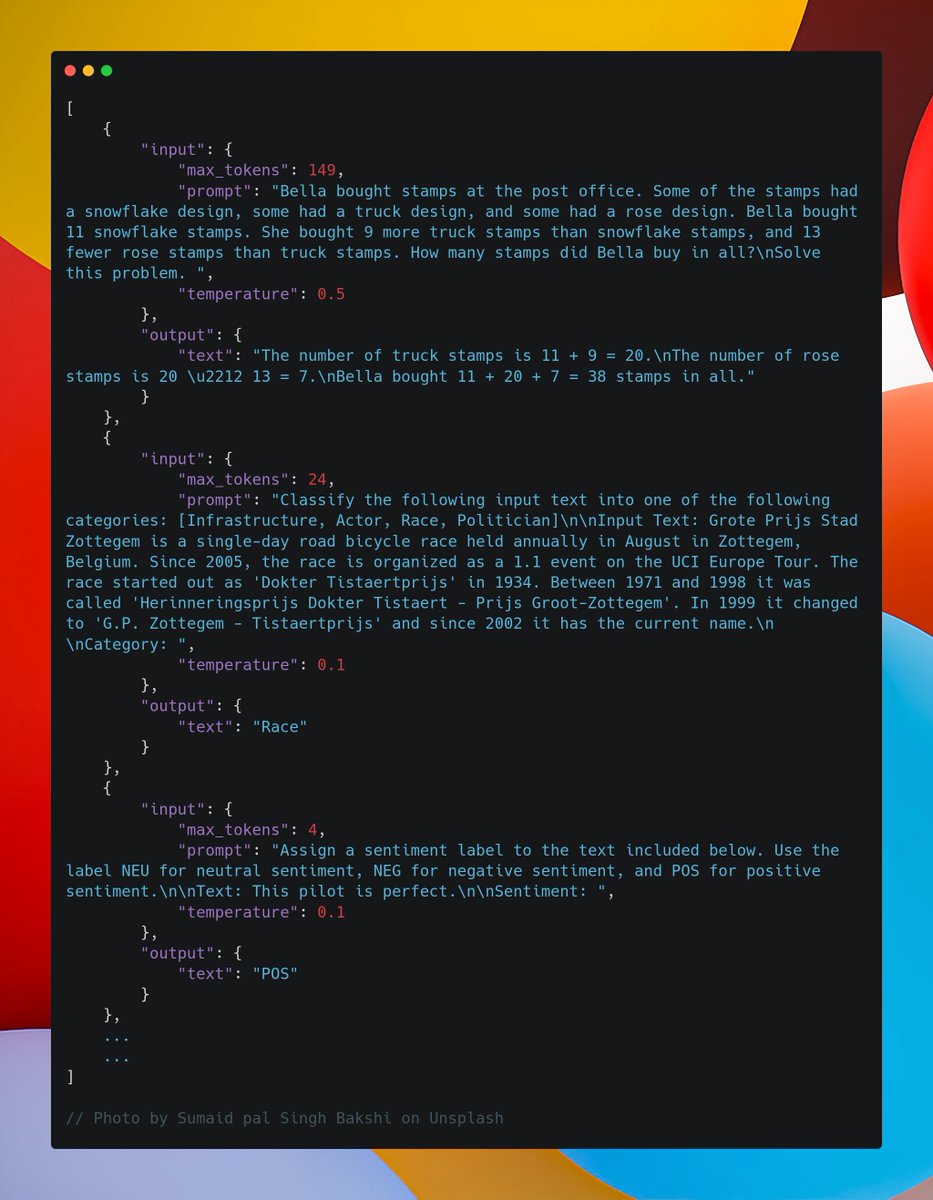

1. You upload some examples of the model input/ output behavior that you expect.

2. @predictionguard concurrently runs these examples through a bunch of SOTA models on the backend

1. You upload some examples of the model input/ output behavior that you expect.

2. @predictionguard concurrently runs these examples through a bunch of SOTA models on the backend

3. Results are compared to the expected output, and the system determines "failures" (in this case, if the semantic similarity, as measured via sentence transformer embeddings is less than a threshold)

4. The best models are made available via a serverless endpoints

4. The best models are made available via a serverless endpoints

For this test, I created 75 example prompts. I leveraged @LangChainAI for prompt templates covering few shot cls, zero shot cls, instructions, math, and chat. @predictionguard ran these against 27 SOTA LLMs in less than 5 minutes.

More invites to the @predictionguard will go out this week. Sign up for the wait-list here: predictionguard.com

(Note, I only evaluated reasonably accessible models. That is, models that are available without a special request, so no GPT-4 or LLaMA for now, or available to be run on reasonable hardware in the cloud)

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter