On this week’s episode of “WTF is TESCREAL?,” I’d like to tell you a story with a rather poetic narrative arc. It begins and ends with Ted Kaczynski—yes, THAT GUY, the Unabomber!—but its main characters are Eliezer Yudkowsky and the “AI safety” community.

A long but fun🧵.

A long but fun🧵.

In 1995, Ted Kaczynski published the “Unabomber manifesto” in WaPo, which ultimately led to his arrest. In it, he argued that advanced technologies are profoundly compromising that most cherished of human values: our freedom. washingtonpost.com/wp-srv/nationa…

(He also whined A LOT about “political correctness,” which dovetails in an amusing way with Elon Musk’s claim that what’s needed to counter some of the negative effects of AI is “anti-woke” AI. Many lolz.)

Now, someone named Bill Joy read Kaczynski's article and

Now, someone named Bill Joy read Kaczynski's article and

was moved by its neo-Luddite arguments. As a 2000 WaPo article noted, “Joy says he finds himself essentially agreeing, to his horror, with a core argument of the Unabomber—that advanced technology poses a threat to the human species.”

This is noteworthy because

This is noteworthy because

Joy wasn’t some anti-technology anarcho-primitivist “Back to the Pleistocene” type. He cofounded Sun Microsystems and is a tech billionaire! He just became super-worried that “GNR” (genetics, nanotech, & AI) technologies could pose unprecedented threats to our survival.

Indeed, not long after, Joy also perused a pre-publication draft of Ray Kurzweil’s “The Age of Spiritual Machines” (1999). Kurzweil is a TESCREAList who popularized singularitarianism—the “S” in “TESCREAL”—and envisions a future in which accelerating technological development

will enable us to merge with machines and become superintelligent immortals: the Singularity.

To simplify a lot: Kurzweil argued that the development of world-changing advanced tech is *inevitable*, and that the outcome would either be ANNIHILATION or UTOPIA. In contrast,

To simplify a lot: Kurzweil argued that the development of world-changing advanced tech is *inevitable*, and that the outcome would either be ANNIHILATION or UTOPIA. In contrast,

Kaczynski argued that the creation of advanced tech would result in either ANNIHILATION or DYSTOPIA, and that its development is *not* inevitable, because people could choose to revolt against the megatechnics of industrial society instead:

On April 1, 2000, in Wired magazine (of all dates and places!), Bill Joy published a hugely influential article titled “Why the Future Doesn’t Need Us.” Freaked out by Kurzweil’s accelerationism, and inspired by Kaczynski’s warnings that advanced tech = doom, Joy called for

a complete and indefinite halt to entire fields of emerging science/technology. There is simply no safe way forward, and hence we must *ban* research on whole domains of genetic engineering, nanotechnology, and artificial intelligence.

Transhumanists, Extropians, and longtermists (although the last term didn’t exist at the time) more or less mocked this proposal. “It is totally impracticable—a complete nonstarter!,” they exclaimed, based on a techno-deterministic worldview according to which the freight train

of “progress” simply cannot be stopped. At best, they argued, it could be *slowed down* a little bit here and there, an idea that Nick Bostrom popularized under the heading of “differential technological development.”

en.wikipedia.org/wiki/Different…

en.wikipedia.org/wiki/Different…

*Far more importantly*, TESCREALists argued that technoscientific “progress” SHOULD NOT be stopped. Why? Because GNR tech has salvific powers! It is our vehicle from the misery of the human condition today to a techno-utopian paradise of immortality, superintelligence, and

“surpassing bliss and delight,” to quote Bostrom’s “Letter from Utopia.” It would be an absolute moral catastrophe to relinguish, as Joy contends, entire fields of advanced science and technology. Joy is, essentially, arguing that we should forever remained imprisoned

in the meat-suits of biology. He is denying us the opportunity to “transcend” our human limitations. How dare he!

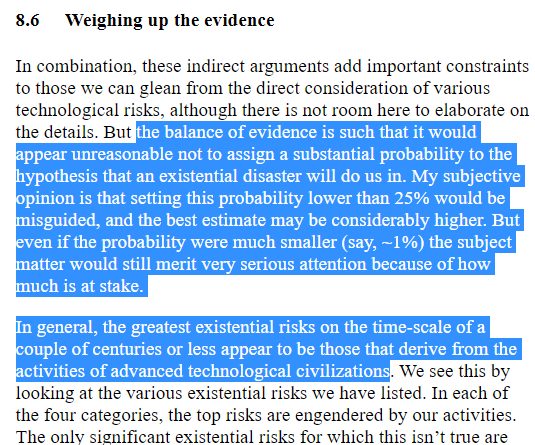

But here’s the catch: these early TESCREALists almost unanimously *agreed* with Joy about the profound dangers of GNR technologies. Indeed, Bostrom argued that the probability of an existential catastrophe (likely from a GNR disaster) is *at least* 25%,

while Kurzweil wrote that “a planet approaching its pivotal century of computational growth—as the Earth is today—has a better than even chance of making it through. But then I have always been accused of being an optimist.”

So, everyone was on the same page about the twenty-first century being the most dangerous in humanity’s history *because of these GNR technologies*.

The key difference between Joy and the early TESCREALists was how they *responded* to this predicament. Joy opted for broad relinquishment: “BAN THESE TECHNOLOGIES, because they’re too dangerous.” TESCREALists said:

“NO NO NO, we NEED these technologies to create Utopia!! Rather, what we should do is found an entirely NEW FIELD of empirical and philosophical study to (a) understand the attendant risks of GNR tech, and (b) devise strategies to neutralize these risks.

That way we can keep our technological cake and eat it, too!!”

This is how the field of Existential Risk Studies was born: it was the TESCREALists’ response to the unprecedented perils of GNR tech outlined most eloquently by Bill Joy in 2000.

This is how the field of Existential Risk Studies was born: it was the TESCREALists’ response to the unprecedented perils of GNR tech outlined most eloquently by Bill Joy in 2000.

And so we arrive at the present. OpenAI has initiated an “AI race” among Big Tech companies, and the capability gains between, say, GPT-2 and GPT-4 have really freaked out a whole bunch of TESCREALists. Suddenly, they're starting to worry that the technological cake might turn

around and eat THEM!

The result has been a full-circle loop all the way back to Joy’s position in 2000: “Stop! Too dangerous!! Hit the emergency brakes!!” A weak version of this is expressed in the recent “open letter” from the Future of Life Institute, which called for

The result has been a full-circle loop all the way back to Joy’s position in 2000: “Stop! Too dangerous!! Hit the emergency brakes!!” A weak version of this is expressed in the recent “open letter” from the Future of Life Institute, which called for

“all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

futureoflife.org/open-letter/pa…

futureoflife.org/open-letter/pa…

This is “weak” because, although the phrase “all AI labs” is very strong indeed, the “pause” is not indefinite—only 6 months. After all, we should WANT advanced AI systems—AGI—to be developed eventually because they’re our ticket to paradise! “Slow down but don’t stop,” is what

FLI is saying.

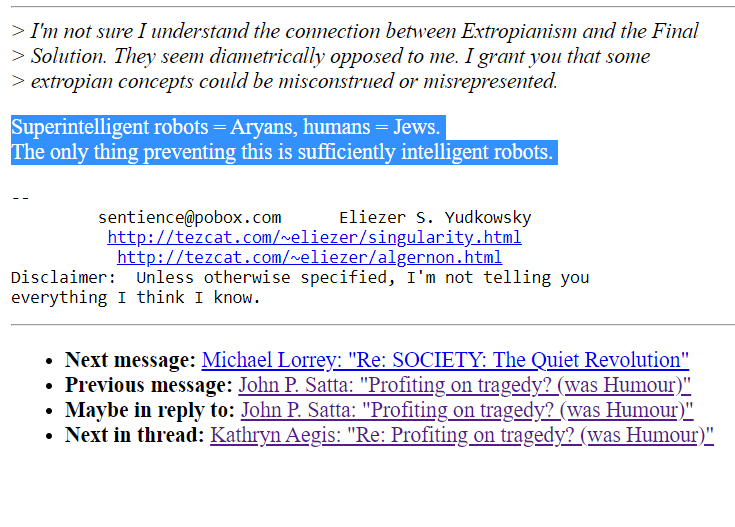

However, there’s a growing contingent of TESCREAL “doomers” led by Yudkowsky, who participated in the TESCREAL movement of the 1990s and early 2000s, who have come *completely* full circle, back to the position

However, there’s a growing contingent of TESCREAL “doomers” led by Yudkowsky, who participated in the TESCREAL movement of the 1990s and early 2000s, who have come *completely* full circle, back to the position

advocated by Joy and inspired by Kaczynski's neo-Luddite arguments. For example, in a TIME magazine op-ed, Yudkowsky writes that *everything* needs to be “shut down” immediatly, and he endorses military strikes to take out datacenters!

time.com/6266923/ai-eli…

time.com/6266923/ai-eli…

Yudkowsky even suggests that we should risk thermonuclear war to prevent advanced AI from being created!!

On Twitter, he’s advocated for the destruction of AI labs (targeting property, not people), and claims that nuclear war would be acceptable to avoid an AI apocalypse so long as there were enough survivors to repopulate the planet.

This is very, very reminiscent of Kaczynski's views (!), although *so far* no one in the TESCREAL doomer camp has ACTUALLY sent bombs in the mail or tried to assassinate AI researchers. Buuuuuuut ...

don’t be too comforted by that fact: in meeting minutes from an “AI safety” workshop in Berkeley—where Yudkowsky’s “Machine Intelligence Research Institute” is based—some participants literally suggested that one way to prevent doom-from-AI is to (see below)

“start building bombs from your cabin in Montana and mail them to DeepMind and OpenAI.” Montana, of course, is where Kaczynski lived during his campaign of domestic terrorism. Another bullet-point simply reads: “Solution: be ted kaczynski.”

So there you have it. The story began with Kaczynski in the mid-1990s, and has come full-circle back to Bill Joy’s Kaczynski-inspired proposal for how best to RESPOND to the dangers of advanced tech like artificial intelligence. Existential Risk Studies? That's NOT ENOUGH. We

need to shut everything down now. There's even some chatter that perhaps Kaczynski’s tactics might be necessary to avoid DOOM.

It’s poetic because TESCREALists laughed at Joy and denigrated his idea, yet some prominent TESCREALists are now advocated for the very same idea. 😬

It’s poetic because TESCREALists laughed at Joy and denigrated his idea, yet some prominent TESCREALists are now advocated for the very same idea. 😬

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter