Large Language Models (LLMs) are notoriously bad at solving reasoning-based tasks. However, we can drastically improve their reasoning performance using simple techniques that require no fine-tuning or task-specific verifiers. Here’s how…🧵[1/7]

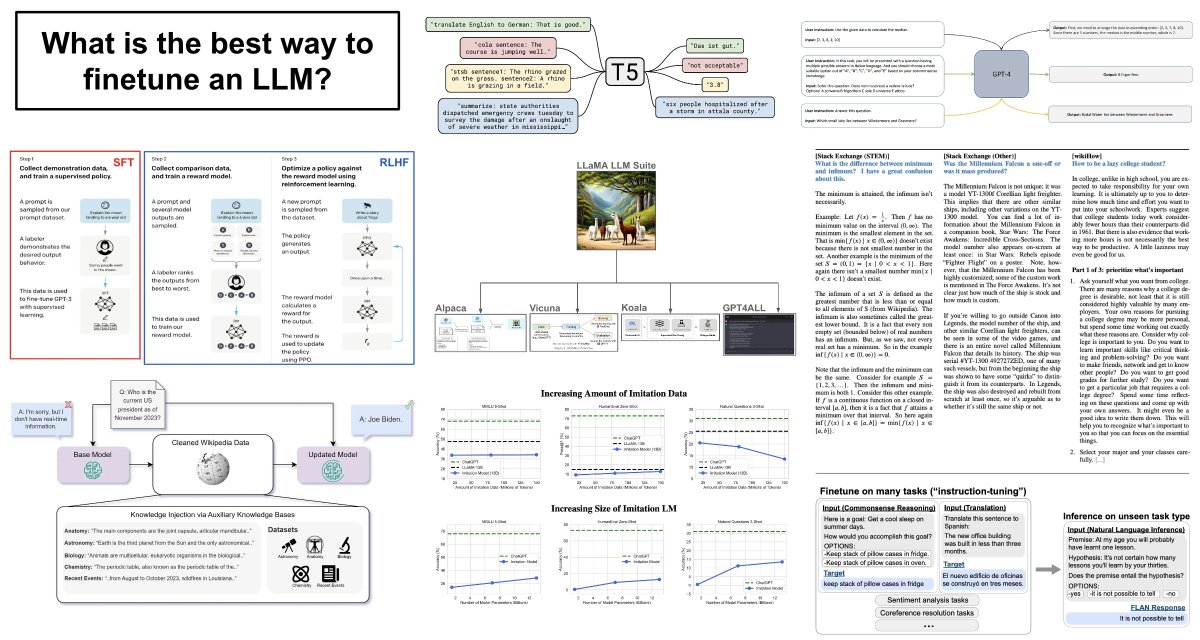

The technique is called chain-of-thought (CoT) prompting. It improves the reasoning abilities of LLMs using few-shot learning. In particular, CoT prompting inserts several examples of “chains of thought” for solving a reasoning problem into the LLM’s prompt. [2/7]

Here, a chain of thought is defined as “a coherent series of intermediate reasoning steps that lead to the final answer for a problem”. A CoT mimics how we solve reasoning problems as humans -- by breaking the problem down into intermediate steps that are easier to solve. [3/7]

Prior techniques teach LLMs how to generate coherent chains of thought via fine-tuning. Although this improves reasoning performance, such an approach requires an annotated dataset of reasoning problems with an associated CoT, which is burdensome and expensive to create. [4/7]

CoT prompting combines the idea of using chains of thought to improve reasoning performance with the few-shot learning abilities of LLMs. We can teach LLMs to generate a coherent CoT with their solution by just providing exemplars as part of their prompt. [5/7]

Such an approach massively improves LLM performance on tasks like arithmetic, commonsense and symbolic reasoning. Plus, it requires minimal data to be curated (i.e., just a few examples for the prompt) and performs no fine-tuning on the LLM. [6/7]

Put simply, CoT prompting is a simple prompting technique that can be applied to any pre-trained LLM checkpoint to improve reasoning performance. See the overview below for more details.

🔗: bit.ly/42067HU

[7/7]

🔗: bit.ly/42067HU

[7/7]

• • •

Missing some Tweet in this thread? You can try to

force a refresh