🤯 Palantir demo’ed a battlefield assistant calling in drones and quarterbacking a battle in Eastern Europe.

I’m sure @ESYudkowsky is going to be pleased at how fast this is moving.

🧵 👇

I’m sure @ESYudkowsky is going to be pleased at how fast this is moving.

🧵 👇

1) it’s a chatbot!

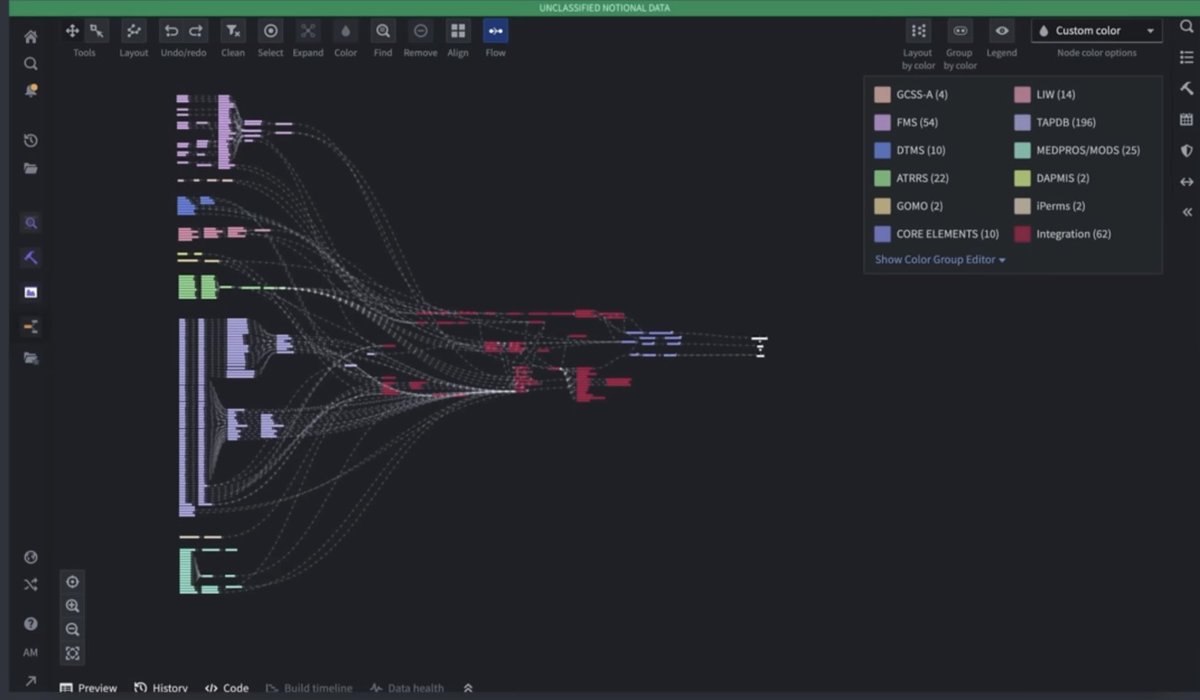

But with federated data retrieval based on intelligence classification access rules

But with federated data retrieval based on intelligence classification access rules

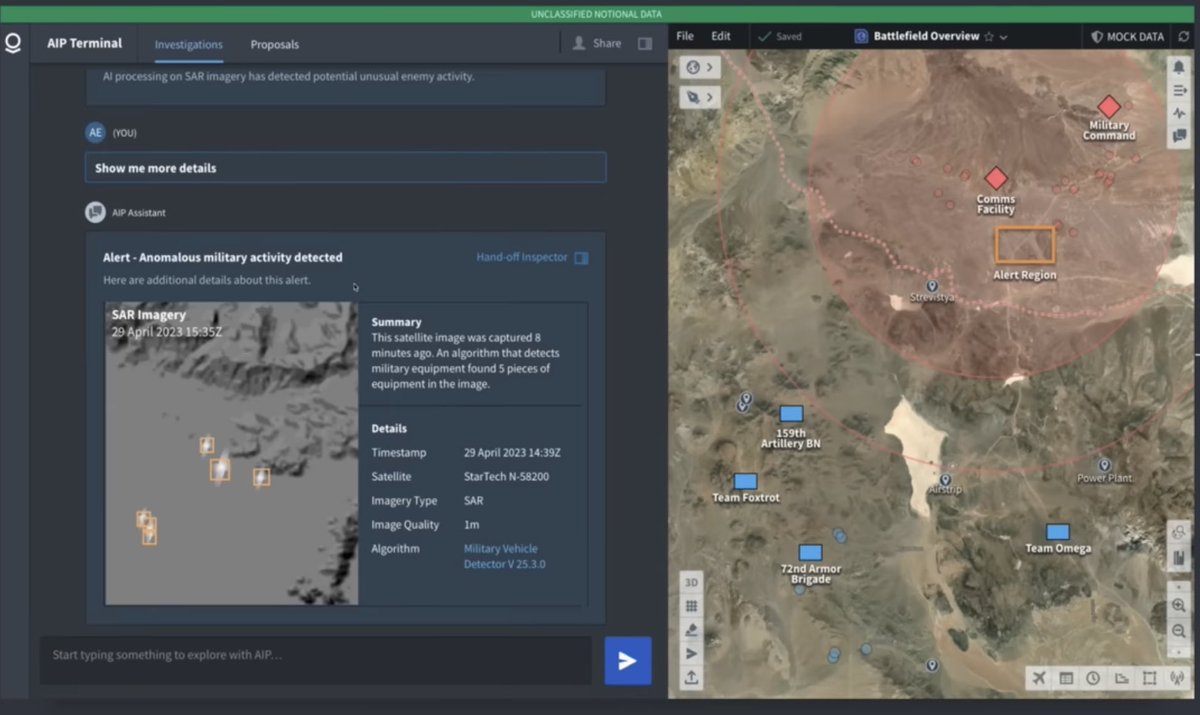

2) notifications 📣 come in to the chatbot (this is 🤖 bot prompting human) first time I’ve seen in any demo

Reminds me of Command and Conquer : “Alert: Anomalous military activity detected”

Reminds me of Command and Conquer : “Alert: Anomalous military activity detected”

3) Dynamic content UI - maps, graphics, form field.. chat bot generates whatever it needs in the chat window

“Show me more”

“Show me more”

11) amazing stuff. Palantir really providing value to the DoD.

Model they used was GPT-NeoX-20B fine tuned, though they have a Flan-T5 XL and a Dolly-v2-12b.

I’m sure @AiEleuther will be thrilled 😁

Best integrated AI I’ve seen. 2 years ahead.

Model they used was GPT-NeoX-20B fine tuned, though they have a Flan-T5 XL and a Dolly-v2-12b.

I’m sure @AiEleuther will be thrilled 😁

Best integrated AI I’ve seen. 2 years ahead.

• • •

Missing some Tweet in this thread? You can try to

force a refresh