Refers to phenomenon where the performance of ML algorithms deteriorates as No. of dimension or feature of input data ⬆️

This is because the volume of space increases exponentially with No. of dimension which causes data to become sparse & distance btwn data point to increase

This is because the volume of space increases exponentially with No. of dimension which causes data to become sparse & distance btwn data point to increase

Many ML algorithms struggle to find meaningful patterns & relationships in high-dimensional data & may suffer from overfitting or poor generalization performance. This can lead to longer training time increased memory requirements & reduced accuracy & efficiency in predictions.

Why is Dimensionality Reduction

necessary?

1⃣Avoids overfitting

2⃣Easier computation

3⃣Improved model performance

4⃣Lower dimensional data requires less storage space.

5⃣Lower dimensional data can work with other algorithms that were unfit for larger dimensions.

necessary?

1⃣Avoids overfitting

2⃣Easier computation

3⃣Improved model performance

4⃣Lower dimensional data requires less storage space.

5⃣Lower dimensional data can work with other algorithms that were unfit for larger dimensions.

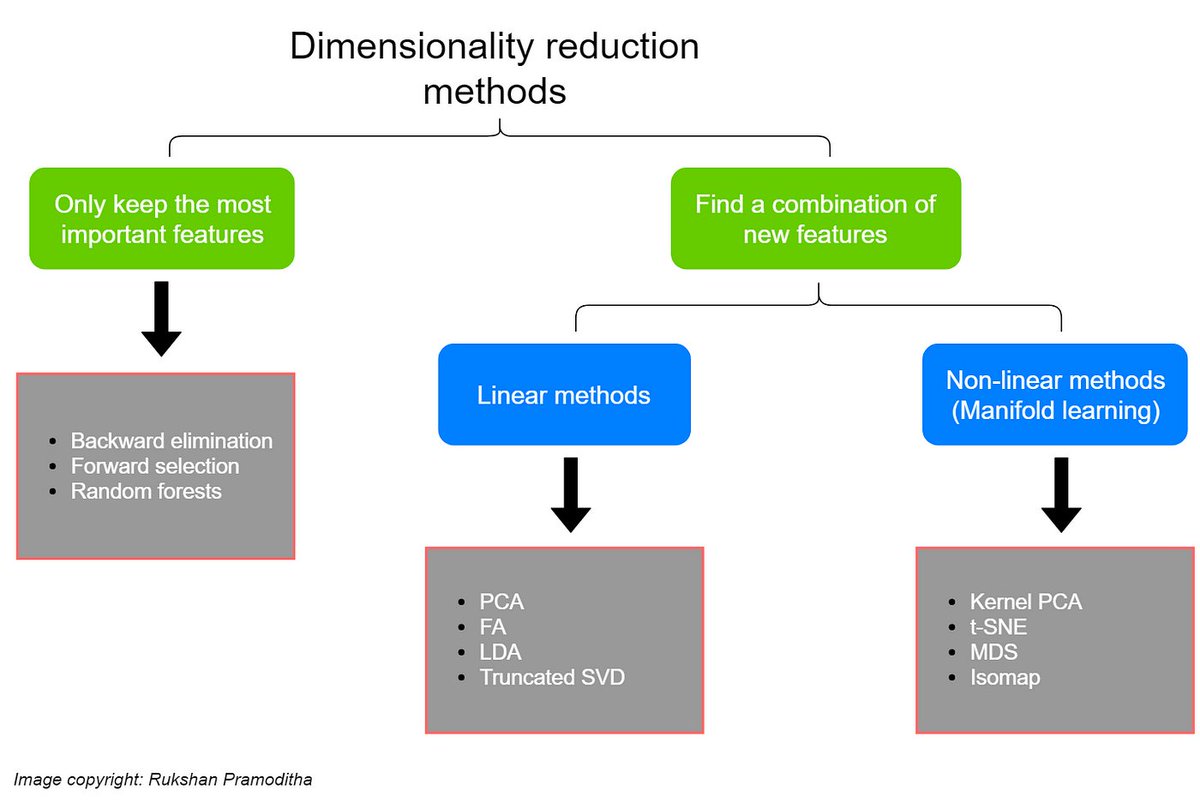

To mitigate the curse of dimensionality, techniques such as feature selection, dimensionality reduction & regularization can be used. These methods aim to reduce number of feature or dimensions in the data

shiksha.com/online-courses…

shiksha.com/online-courses…

🙏If this thread was helpful to you

📷 Follow me @Sachintukumar for daily content

2 Connect with me on Linkedin

linkedin.com/in/sachintukum…

3 RT tweet below to share it with your friend 3⃣

📷 Follow me @Sachintukumar for daily content

2 Connect with me on Linkedin

linkedin.com/in/sachintukum…

3 RT tweet below to share it with your friend 3⃣

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter