It is widely thought that neural networks generalize because of implicit regularization of gradient descent. Today at #ICLR2023 we show new evidence to the contrary. We train with gradient-free optimizers and observe generalization competitive with SGD.

openreview.net/forum?id=QC10R…

openreview.net/forum?id=QC10R…

An alternative theory of generalization is the "volume hypothesis": Good minima are flat, and occupy more volume than bad minima. For this reason, optimizers are more likely to land in the large/wide basins around good minima, and less likely to land in small/sharp bad minima.

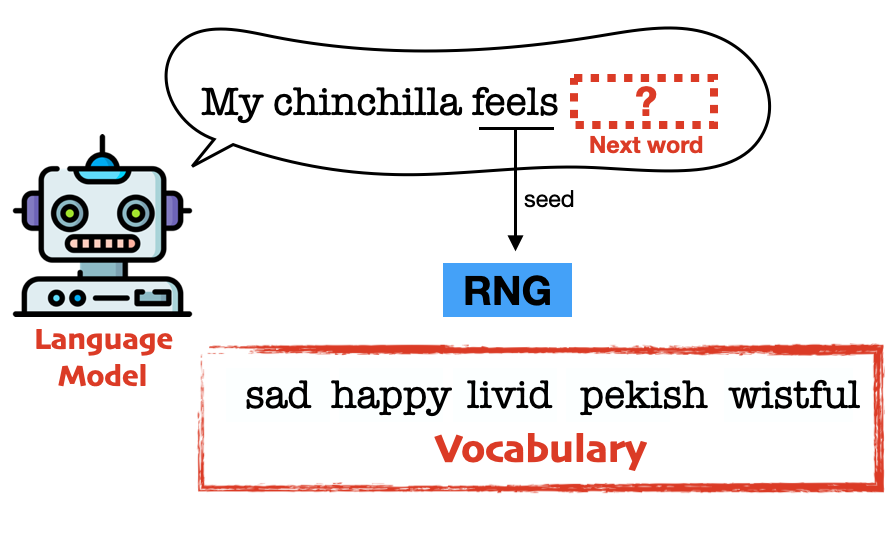

One of the optimizers we test is a “guess and check” (GAC) optimizer that samples parameters uniformly from a hypercube and checks whether the loss is low. If so, optimization terminates. If not, it throws away the parameters and samples again until it finds a low loss.

The GAC optimizer is more likely to land in a big basin than in a small basin. In fact, the probability of landing in a region is *exactly* proportional to its volume. The success of GAC shows that volume alone can explain generalization without appealing to optimizer dynamics.

One weakness of our experiment is that these zeroth-order optimizers only work with small datasets. Still, I hope it can further the notion that loss landscape geometry plays a role in generalization.

Come see our poster (#87) Tuesday at 11:30am, or our talk (track 4, 10:50am).

Come see our poster (#87) Tuesday at 11:30am, or our talk (track 4, 10:50am).

PS: Other evidence that SGD regularization is not needed includes the observation that generalization happens with non-stochastic gradient descent (openreview.net/forum?id=ZBESe…), and that bad minima have very small volumes (arxiv.org/abs/1906.03291).

Thanks to @PingyehChiang, @NiRenkun, David Miller, @arpitbansal297, @jonasgeiping, and @micahgoldblum.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter