Professor at UMD. AI security & privacy, algorithmic bias, foundations of ML.

Follow me for commentary on state-of-the-art AI.

6 subscribers

How to get URL link on X (Twitter) App

Huginn was built for reasoning from the ground up, not just fine-tuned on CoT.

Huginn was built for reasoning from the ground up, not just fine-tuned on CoT.

Let’s start with a toy example of why we need generator prompts. Suppose I want a list of different colors. So I feed this prompt to Gemini 1000 times. This does poorly - I only get 33 unique outputs from 1000 runs. I need more randomness.

Let’s start with a toy example of why we need generator prompts. Suppose I want a list of different colors. So I feed this prompt to Gemini 1000 times. This does poorly - I only get 33 unique outputs from 1000 runs. I need more randomness.

Ok, here's the trick: during instruction finetuning, we add uniform random noise to the word embeddings.

Ok, here's the trick: during instruction finetuning, we add uniform random noise to the word embeddings.

Llama1: Small models (7B & 13B) were trained on 1 trillion tokens. Large models saw 1.4T tokens.

Llama1: Small models (7B & 13B) were trained on 1 trillion tokens. Large models saw 1.4T tokens.

Let’s focus in on the machine learning GPUs. You can see the value drop over time, until the H100 created an uptick. Note: I’m using today’s price for each card, but a similar downward trend also holds for the release prices.

Let’s focus in on the machine learning GPUs. You can see the value drop over time, until the H100 created an uptick. Note: I’m using today’s price for each card, but a similar downward trend also holds for the release prices.

First, if you don’t remember how watermarks work, you might revisit my original post on this issue.

First, if you don’t remember how watermarks work, you might revisit my original post on this issue.https://twitter.com/tomgoldsteincs/status/1618287665006403585?s=20

A typical human hears 20K words per day. By age five, a typical child should have heard 37 million words. A 50 year old should have heard 370M words.

A typical human hears 20K words per day. By age five, a typical child should have heard 37 million words. A 50 year old should have heard 370M words.

Prompts made easy (PEZ) is a gradient optimizer for text. It can convert images into prompts for Stable Diffusion, or it can learn a hard prompt for an LLM task. The method uses ideas from the binary neural nets literature that mashup continuous and discrete optimization.

Prompts made easy (PEZ) is a gradient optimizer for text. It can convert images into prompts for Stable Diffusion, or it can learn a hard prompt for an LLM task. The method uses ideas from the binary neural nets literature that mashup continuous and discrete optimization.

This article, and a blog post by Scott Aaronson, suggest that OpenAI will deploy something similar to what I describe. The watermark below can be detected using an open source algorithm with no access to the language model or its API.

This article, and a blog post by Scott Aaronson, suggest that OpenAI will deploy something similar to what I describe. The watermark below can be detected using an open source algorithm with no access to the language model or its API.

Here's an algorithmic reasoning problem where standard nets fail. We train resnet18 to solve little 13x13 mazes. It accepts a 2D image of a maze and spits out a 2D image of the solution. Resnet18 gets 100% test acc on unseen mazes of the same size. But something is wrong…

Here's an algorithmic reasoning problem where standard nets fail. We train resnet18 to solve little 13x13 mazes. It accepts a 2D image of a maze and spits out a 2D image of the solution. Resnet18 gets 100% test acc on unseen mazes of the same size. But something is wrong…

Prompts are fed to stable diffusion as binary code, with each letter/symbol represented as several bytes. Then a "tokenizer" looks for commonly occurring spans of adjacent bytes and groups them into a single known "word". Stable diffusion only knows 49408 words.

Prompts are fed to stable diffusion as binary code, with each letter/symbol represented as several bytes. Then a "tokenizer" looks for commonly occurring spans of adjacent bytes and groups them into a single known "word". Stable diffusion only knows 49408 words.

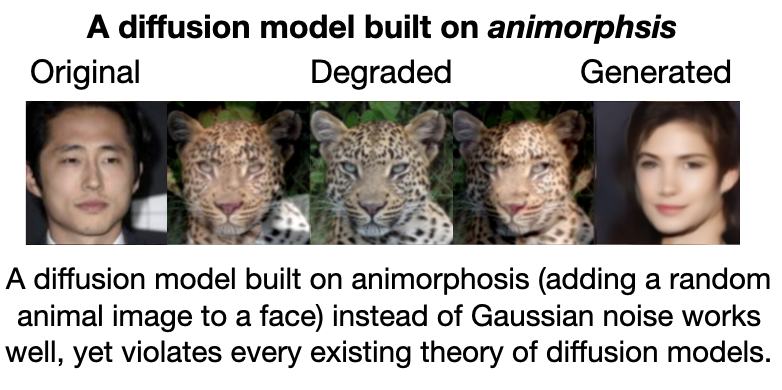

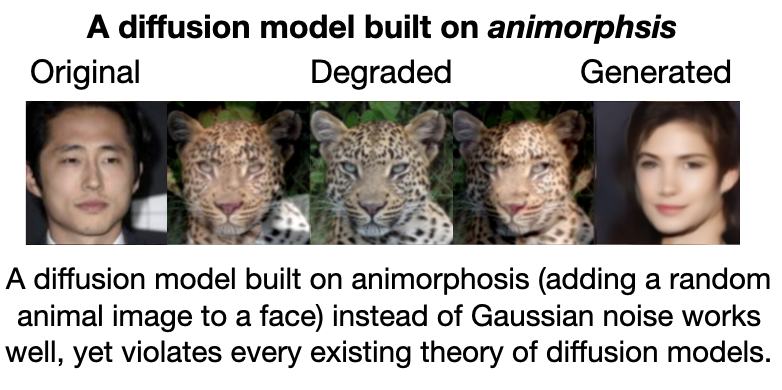

Diffusion models are powerful image generators, but they are built on two simple components: a function that degrades images by adding Gaussian noise, and a simple image restoration network for removing this noise.

Diffusion models are powerful image generators, but they are built on two simple components: a function that degrades images by adding Gaussian noise, and a simple image restoration network for removing this noise.