Woah!! 🤯

I got it working!!! 😮

🎉 Build an AI shopping assistant for your online store with #nocode🚀

In this demo, I make an AI chatbot from a @Flipkart store spreadsheet export in seconds.

The future of ecommerce? 🛍️🛒

How it works 👀⬇️🧵

I got it working!!! 😮

🎉 Build an AI shopping assistant for your online store with #nocode🚀

In this demo, I make an AI chatbot from a @Flipkart store spreadsheet export in seconds.

The future of ecommerce? 🛍️🛒

How it works 👀⬇️🧵

@Flipkart chatshape.com lets you make a custom AI chatbot for your website, but some users wanted this:

Upload CSV 👉 Get AI chatbot trained on your stores items!

We can do that! Now we need filters, but why add buttons when we have #LLM?

Chat UX > Traditional UI @dharmesh 😉

Upload CSV 👉 Get AI chatbot trained on your stores items!

We can do that! Now we need filters, but why add buttons when we have #LLM?

Chat UX > Traditional UI @dharmesh 😉

@Flipkart @dharmesh I used @LangChainAI retriever for Self Query, Basically, we chain LLMs together.

One is responsible for constructing filters to apply to the metadata (like cost) from the natural language query

"Get me🥻dress types under $400"

The filtered results are passed to your main LLM

One is responsible for constructing filters to apply to the metadata (like cost) from the natural language query

"Get me🥻dress types under $400"

The filtered results are passed to your main LLM

@Flipkart @dharmesh @LangChainAI I think this is SUPER powerful because it combines the power of semantic search with more traditional filters

@gpt_index @jerryjliu0 talks about "Hybrid Search" here, gut feeling this will be the ultimate combo used in real leading AI products:

@gpt_index @jerryjliu0 talks about "Hybrid Search" here, gut feeling this will be the ultimate combo used in real leading AI products:

https://twitter.com/jerryjliu0/status/1652349432707575808?s=20

@Flipkart @dharmesh @LangChainAI @gpt_index @jerryjliu0 The only con I can see is that using multiple LLM calls instead of one does slow down the overall chat experience

Will be experimenting with solutions for this soon.

Will be experimenting with solutions for this soon.

@Flipkart @dharmesh @LangChainAI @gpt_index @jerryjliu0 The CSV import and image display feature for chatshape.com is in beta testing now!

want this AI for your store? dm me!

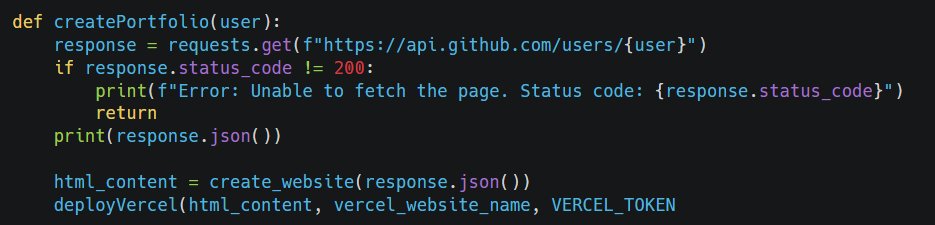

Liked this breakdown? Stay tuned for the code in a few days👀👀

Also, please share your feedback/ideas!

@stephsmithio @KennethCassel

want this AI for your store? dm me!

Liked this breakdown? Stay tuned for the code in a few days👀👀

Also, please share your feedback/ideas!

@stephsmithio @KennethCassel

• • •

Missing some Tweet in this thread? You can try to

force a refresh