🦓 Self-supervised multimodal ML is promising the next AI breakthrough - in our new work published in @Nature, we debut @cebraAI: for self-supervised hypothesis- and discovery-driven science.

📝 doi.org/10.1038/s41586…

💻github.com/AdaptiveMotorC…

🦓 cebra.ai

🧵⬇️

📝 doi.org/10.1038/s41586…

💻github.com/AdaptiveMotorC…

🦓 cebra.ai

🧵⬇️

First, this is a story about people 🥳 @stes_io & @jinnnnnlee are co-first authors and it was absolute pleasure to work with them & see the 🦓 CEBRA magic come to life. A few happy/fun bits in the @Nature research briefing!

🔗nature.com/articles/d4158…

🔗nature.com/articles/d4158…

The work: we focused in on 4 open-access datasets: synthetic, hippocampus, sensorimotor, and vision across species & recording methods to show the general performance and many features of #CEBRA

cc @AllenInstitute @sejdevries @PresNCM & more (🙏)

cc @AllenInstitute @sejdevries @PresNCM & more (🙏)

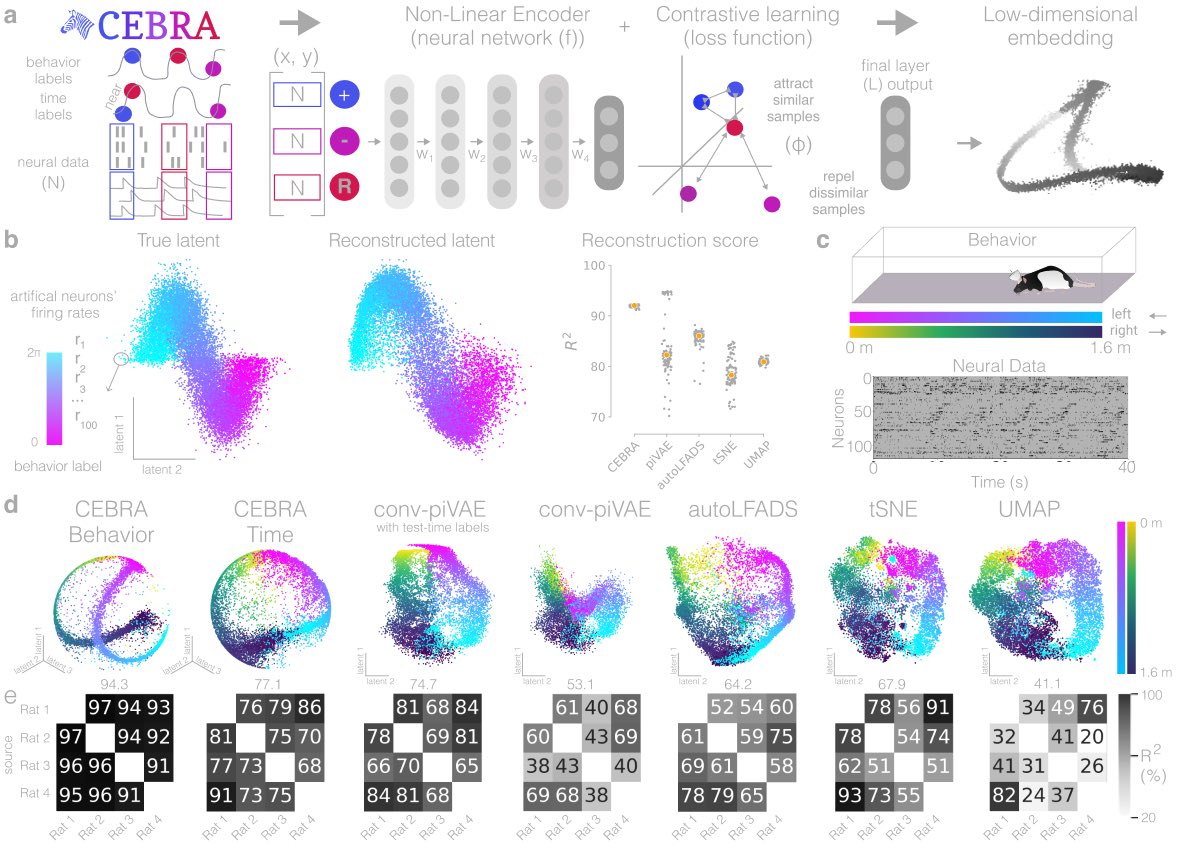

The strength of CEBRA is the flexibility & performance: no generative model = no restrictions on data, & it can be used unsupervised (Time) or w/labels (Behavior).

Here, we demo on hippocampus with CEBRA-Time and then do hypo. testing for a quantitative readout of model fit!

Here, we demo on hippocampus with CEBRA-Time and then do hypo. testing for a quantitative readout of model fit!

One big #CEBRA moment for us was seeing the impressive video decoding results:

🐭🧠+🎥->🦓=

- high perf. >95% accuracy at frame prediction 🔥

- highly similar latents across Neuropixels & 2-photon data ✅

- differences in performance across the visual system of 🐭

🐭🧠+🎥->🦓=

- high perf. >95% accuracy at frame prediction 🔥

- highly similar latents across Neuropixels & 2-photon data ✅

- differences in performance across the visual system of 🐭

To be continued...

we won't tweet all the science today,

so stay tuned for more highlights later 🖤🦓🍾

we won't tweet all the science today,

so stay tuned for more highlights later 🖤🦓🍾

✨🦓🖤 Our Research Briefing can now be found at rdcu.be/dbhwe

- the problem, our solution, and future directions … 🚨including how CEBRA is not limited to neural data🚨— if you use t-SNE or UMAP, consider using CEBRA for more consistent and higher accuracy results

- the problem, our solution, and future directions … 🚨including how CEBRA is not limited to neural data🚨— if you use t-SNE or UMAP, consider using CEBRA for more consistent and higher accuracy results

• • •

Missing some Tweet in this thread? You can try to

force a refresh