In today's #vatniksoup, I'll be talking about the Russian style of online propaganda and disinformation, "Firehose of Falsehood". It's a commonly used Kremlin strategy for Russian information operations, which often prioritizes quantity over quality.

1/23

1/23

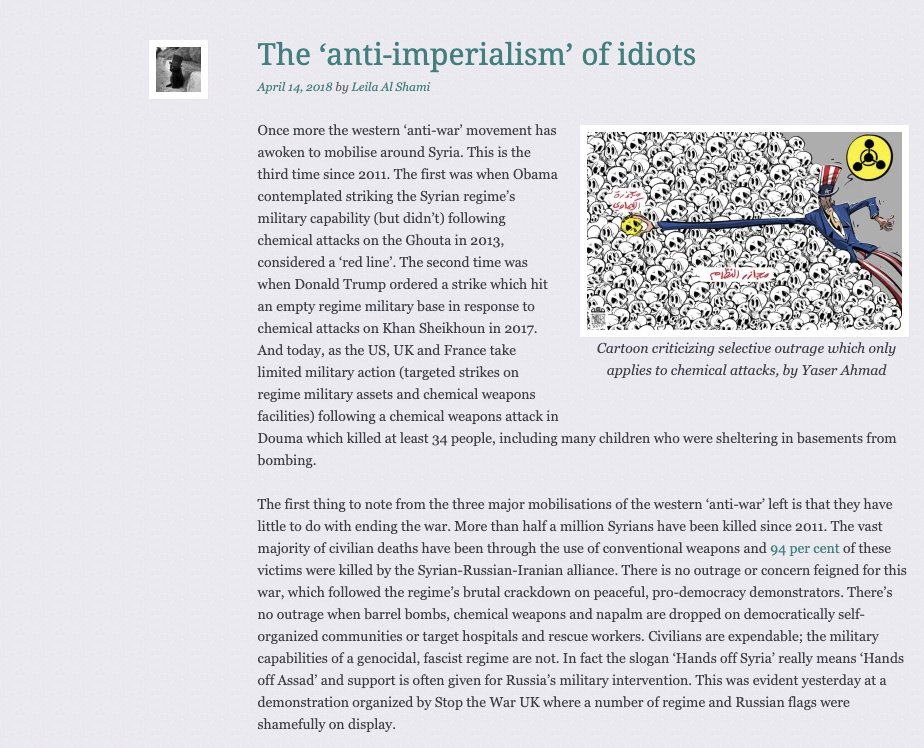

I have mentioned this particular strategy in many of my previous soups, but have never discussed it in more detail, so here goes. The term was originally coined by Paul & Matthews in their 2016 paper, The Russian "Firehose of Falsehood" Propaganda Model.

2/23

2/23

They based this name on two distinctive features: 1) high volume, multi-channel approach, and 2) shameless willingness to spread disinformation.

Academic Giorgio Bertolin described Russian disinformation as entertaining, confusing and overwhelming.

3/23

Academic Giorgio Bertolin described Russian disinformation as entertaining, confusing and overwhelming.

3/23

The high volume, multi-channel approach means that these operatives attempt to control the narrative on each major social media platform. Russia has conducted, and is conducting, these operations on Facebook, Twitter, TikTok, Telegram, VKontakte, YouTube, and even on Tinder.

4/23

4/23

The volume of these operations shouldn't be underestimated: already back in 2015, more than 1000 paid trolls worked at Yevgeny Prigozhin's Internet Research Agency (IRA), the most well-known troll farm in Russia, and each commentator had a daily quota of 100 comments.

5/23

5/23

These numbers have probably gone up a LOT since then, and many more countries are using troll farms to conduct political campaigns or to spread propaganda.

One of the most famous case of social manipulation was the social media influencing around the Khashoggi murder.

6/23

One of the most famous case of social manipulation was the social media influencing around the Khashoggi murder.

6/23

These trolls would work in shifts, and the work goes on daily around the clock. A better description of these sweatshops would be troll factories, since they have turned trolling into an assembly line of propaganda and disinformation.

7/23

7/23

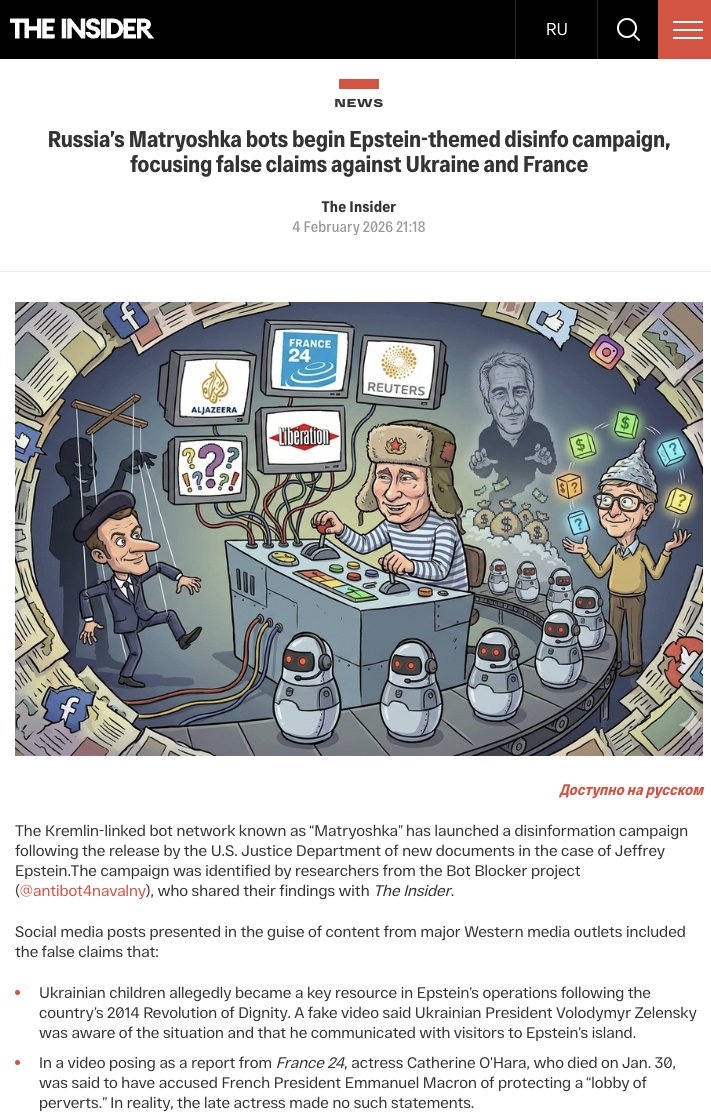

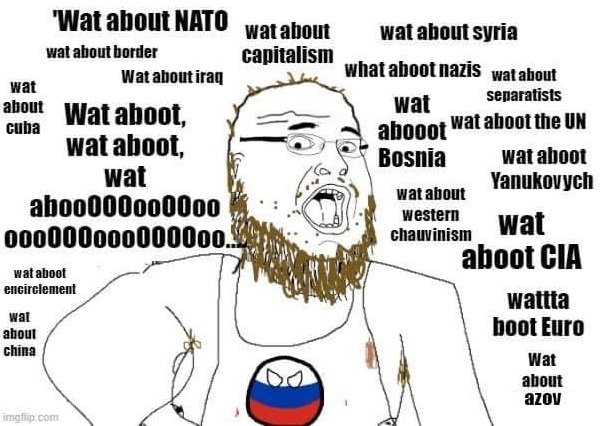

The high volume is accompanied with the willingness to spread disinformation. Russia often utilizes the "throwing shit at the wall to see what sticks" strategy, pushing out hundreds of contradicting and false narratives, only to see if some of them starts gaining traction.

8/23

8/23

Some examples of forgotten narratives include Zelenskyy leaving Kyiv after the invasion started, secret NATO base in Mariupol, Poles trying to blow up a chlorine tank, birds as bioweapons, combat mosquitos, the use of dirty bomb, and Ukrainian Satan worshipping.

9/23

9/23

Troll farms also often "borrow" ideas and narratives from conspiracy theorists. One example of this was the "bioweapons lab" theory started by a QAnon follower, Jacob Creech. The narrative was spread, along with the Kremlin,by people like Tucker Carlson and Steve Bannon.

10/23

10/23

There is also no commitment to any kind of consistency and these narratives can naturally be contradictory - as I mentioned, the goal is not to persuade but to confuse and overwhelm.

11/23

11/23

A lot of the "argumentation" from these trolls focuses on anecdotal evidence or faked sources. A good example of this are the "Ukrainian Nazis" replies that flood the discussion with anecdotal image collages of Ukrainians waving Nazi flags or having Nazi tattoos.

12/23

12/23

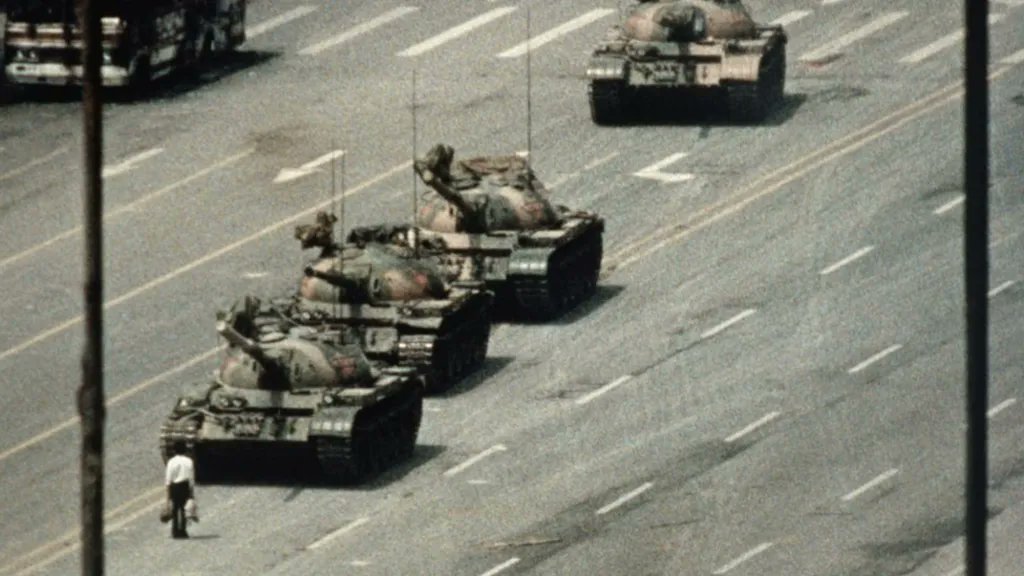

The firehose also often utilizes non-sourced and out-of-context materials. Using (fake) imagery is an effective way to invoke strong reactions and emotions. Sometimes Russia produces false flag videos, but have done them less after various videos were geolocated to Russia.

13/23

13/23

This strategy works extremely well in so-called low trust environments, meaning countries or societies where the trust against politicians, journalists and authorities is relatively low. Naturally, the effective use of this method degrades this trust even further.

14/23

14/23

The sheer number of messages and comments drown out any competing arguments or viewpoints, and this also often makes any kind of fact-checking obsolete - after the information has been debunked, the topic has already changed many, many times.

15/23

15/23

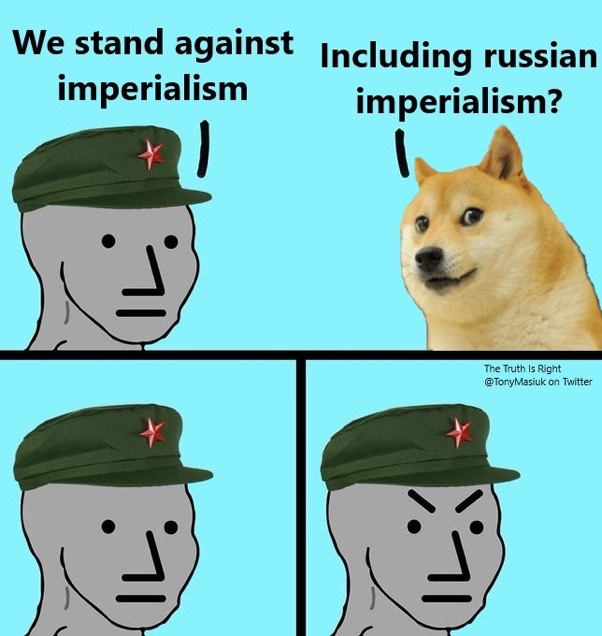

And this is exactly why #NAFO has been so efficient against this particular strategy: it counters the strategy with similar measures. High volume, nonsensical replies from braindead cartoon dogs...

16/23

16/23

...shuts down the firehose of falsehood extremely well, and as a bonus ridicules the main sources of pro-Russian narratives, including the country's ex-president, the embassy and diplomat accounts.

17/23

17/23

Like with most production, propaganda has been outsourced to cheaper sources. These days many of these troll farms have been moved from places like Russia and Macedonia into various African countries, including Nigeria and Ghana.

18/23

18/23

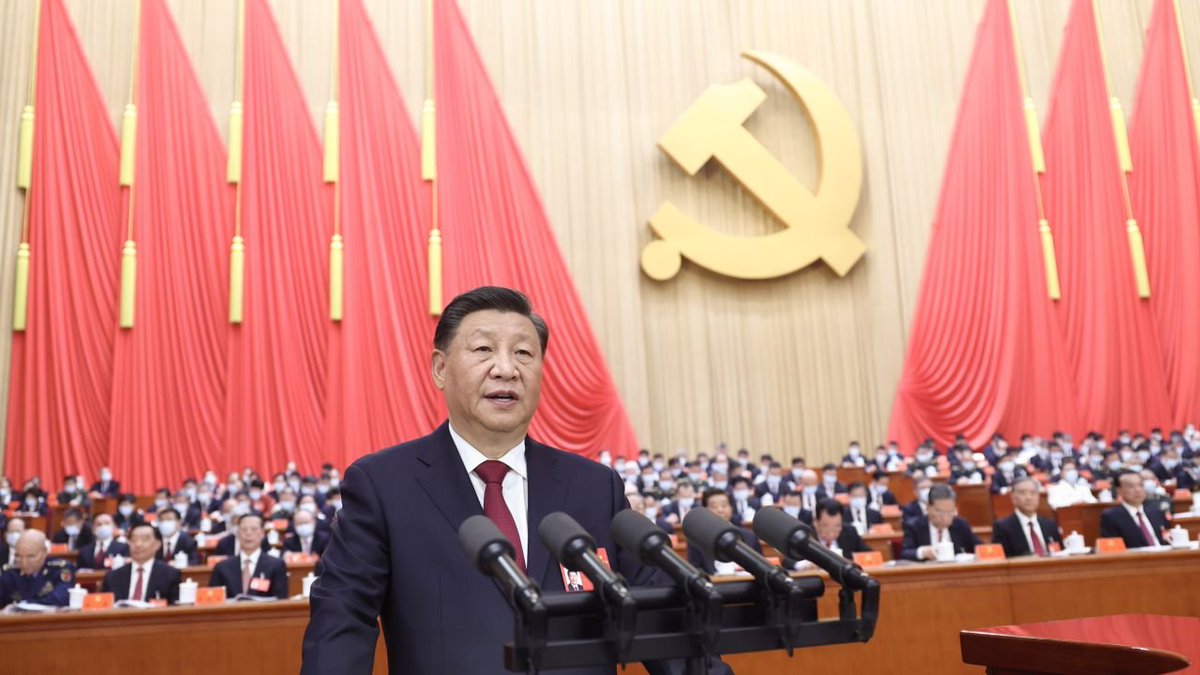

China has utilized the firehose in their own propaganda, and their most famous troll farm is the 50 Cent Army. The biggest difference between Russian and Chinese operations was that the Chinese focused on national networks,mostly neglecting the online world outside of China.19/23

Russia also focuses more on bashing and blaming others, whereas China focuses on praising the CCP. After realizing the success of Russia in their info ops, though, China has also started using more aggressive strategies against their rivals, especially against the US.

20/23

20/23

Based on BBC, Russian and Chinese propaganda accounts are "thriving" on Twitter after @elonmusk sacked the team that was countering them. Allegedly the current system relies fully on automated detection systems.

21/23

21/23

@DarrenLinvill, an associate professor from Clemson University said that one of these networks appears to originate from IRA. They have also identified troll farm from the opposite camp, with tweets supporting Ukraine and Alexei Navalny.

22/23

22/23

Before Musk took over the site, Twitter was relatively effective in removing troll farm accounts, but one can only assume that this is not the case anymore.

As is tradition, social media giants prioritize profits over safety.

23/23

As is tradition, social media giants prioritize profits over safety.

23/23

Support my work: buymeacoffee.com/PKallioniemi

Past soups: vatniksoup.com

Related soups:

Russian propaganda themes:

Russian narratives:

Social media manipulation:

Troll farms:

Past soups: vatniksoup.com

Related soups:

Russian propaganda themes:

https://twitter.com/P_Kallioniemi/status/1611810791744995330

Russian narratives:

https://twitter.com/P_Kallioniemi/status/1634168118992949251

Social media manipulation:

https://twitter.com/P_Kallioniemi/status/1605106747575771138

Troll farms:

https://twitter.com/P_Kallioniemi/status/1601115023589658624

• • •

Missing some Tweet in this thread? You can try to

force a refresh