🔹 If ML model is not accurate. it can make predictions error & these prediction errors are usually known as Bias & Variance

🔹 In ML these errors will alway be present as there is always slight difference between model predictions & actual predictions

🔹 In ML these errors will alway be present as there is always slight difference between model predictions & actual predictions

🔹The main aim of ML/data science analysts is to reduce these errors in order to get more accurate result

🔹In ML an error is measure of how accurately an algorithm can make predictions for the previously unknown dataset

🔹In ML an error is measure of how accurately an algorithm can make predictions for the previously unknown dataset

1⃣ Bias :

While making prediction difference occur between prediction values made by model & actual values/expected values & this difference is known as bias errors or Error due to bias

While making prediction difference occur between prediction values made by model & actual values/expected values & this difference is known as bias errors or Error due to bias

🔹It can be defined as an inability of ML algorithms such as Linear Regression to capture true relationship between data points

🔹Each algorithm begins with some amount of bias because bias occurs from assumptions in the model, which makes the target function simple to learn

🔹Each algorithm begins with some amount of bias because bias occurs from assumptions in the model, which makes the target function simple to learn

🔹A high bias model also cannot perform well on new data

🔹A low bias model will make fewer assumptions about the form of the target function.

🔹A low bias model will make fewer assumptions about the form of the target function.

🔹The simpler the algorithm, the higher the bias

🔹 Whereas a nonlinear algorithm often has low bias

🔹 Whereas a nonlinear algorithm often has low bias

2⃣ Variance

🔹variance tells that how much a random variable is different from its expected value

🔹variance tells that how much a random variable is different from its expected value

Low variance : means there is a small variation in the prediction of the target function with changes in the training data set. At the same time

High variance : shows a large variation in the prediction of the target function with changes in the training dataset

High variance : shows a large variation in the prediction of the target function with changes in the training dataset

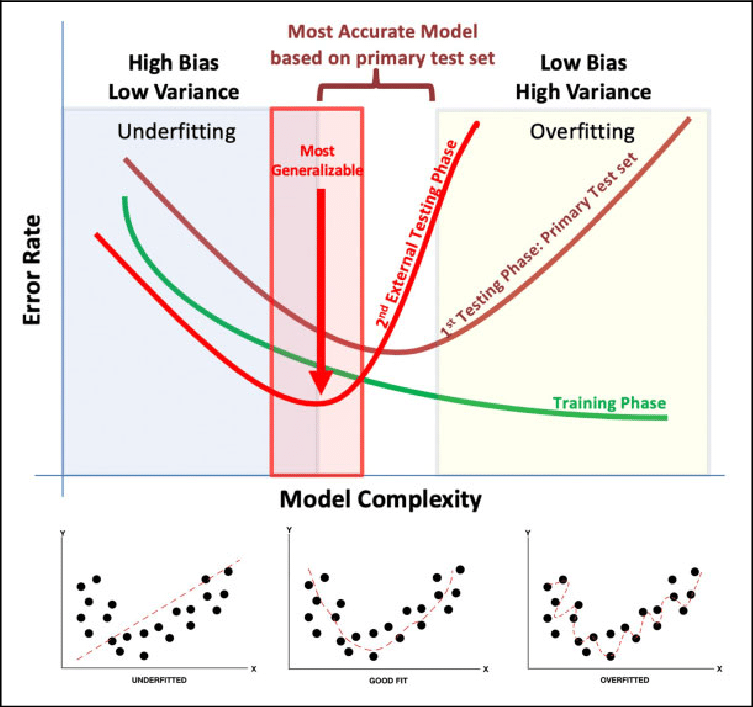

Bias-Variance Trade-Off

So, it is required to make a balance between bias and variance errors, and this balance between the bias error and variance error is known as the Bias-Variance trade-off

So, it is required to make a balance between bias and variance errors, and this balance between the bias error and variance error is known as the Bias-Variance trade-off

Bias-Variance trade-off is a central issue in supervised learning. Ideally, we need a model that accurately captures the regularities in training data and simultaneously generalizes well with the unseen dataset @pagejavatpoint

javatpoint.com/bias-and-varia…

javatpoint.com/bias-and-varia…

🔹If this thread was helpful to you

1. Follow me @Sachintukumar

for daily content

2. Connect with me on Linkedin: linkedin.com/in/sachintukum…

3. RT tweet below to share it with your friend

1. Follow me @Sachintukumar

for daily content

2. Connect with me on Linkedin: linkedin.com/in/sachintukum…

3. RT tweet below to share it with your friend

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter