🧨 diffusers 0.17.0 is out and comes with new pipelines, improved LoRA support, `torch.compile()` speedups, and more ⏰

🪄 UniDiffuser

🦄 DiffEdit

⚡️ IF DreamBooth

💡 Support for A1111 LoRA

and more ...

Release notes 📝

github.com/huggingface/di…

1/🧶

🪄 UniDiffuser

🦄 DiffEdit

⚡️ IF DreamBooth

💡 Support for A1111 LoRA

and more ...

Release notes 📝

github.com/huggingface/di…

1/🧶

First, we have another cool pipeline, namely UniDiffuser, capable of performing **SIX different tasks** 🤯

It's the first multimodal pipeline in 🧨 diffusers.

Thanks to `dg845` for contributing this!

Docs ⬇️

huggingface.co/docs/diffusers…

2/🧶

It's the first multimodal pipeline in 🧨 diffusers.

Thanks to `dg845` for contributing this!

Docs ⬇️

huggingface.co/docs/diffusers…

2/🧶

Image editing pipelines are taking off pretty fast and DiffEdit joins that train!

With DiffEdit, you can perform zero-shot inpainting 🎨

Thanks to `clarencechen` for contributing this!

Docs ⬇️

huggingface.co/docs/diffusers…

3/🧶

With DiffEdit, you can perform zero-shot inpainting 🎨

Thanks to `clarencechen` for contributing this!

Docs ⬇️

huggingface.co/docs/diffusers…

3/🧶

With Stable Diffusion DreamBooth, it's very difficult to get good results on faces.

To this end, @williamLberman added support for performing DreamBooth training with "IF" and the results are remarkably better!

Learn more here ⬇️

huggingface.co/docs/diffusers…

4/🧶

To this end, @williamLberman added support for performing DreamBooth training with "IF" and the results are remarkably better!

Learn more here ⬇️

huggingface.co/docs/diffusers…

4/🧶

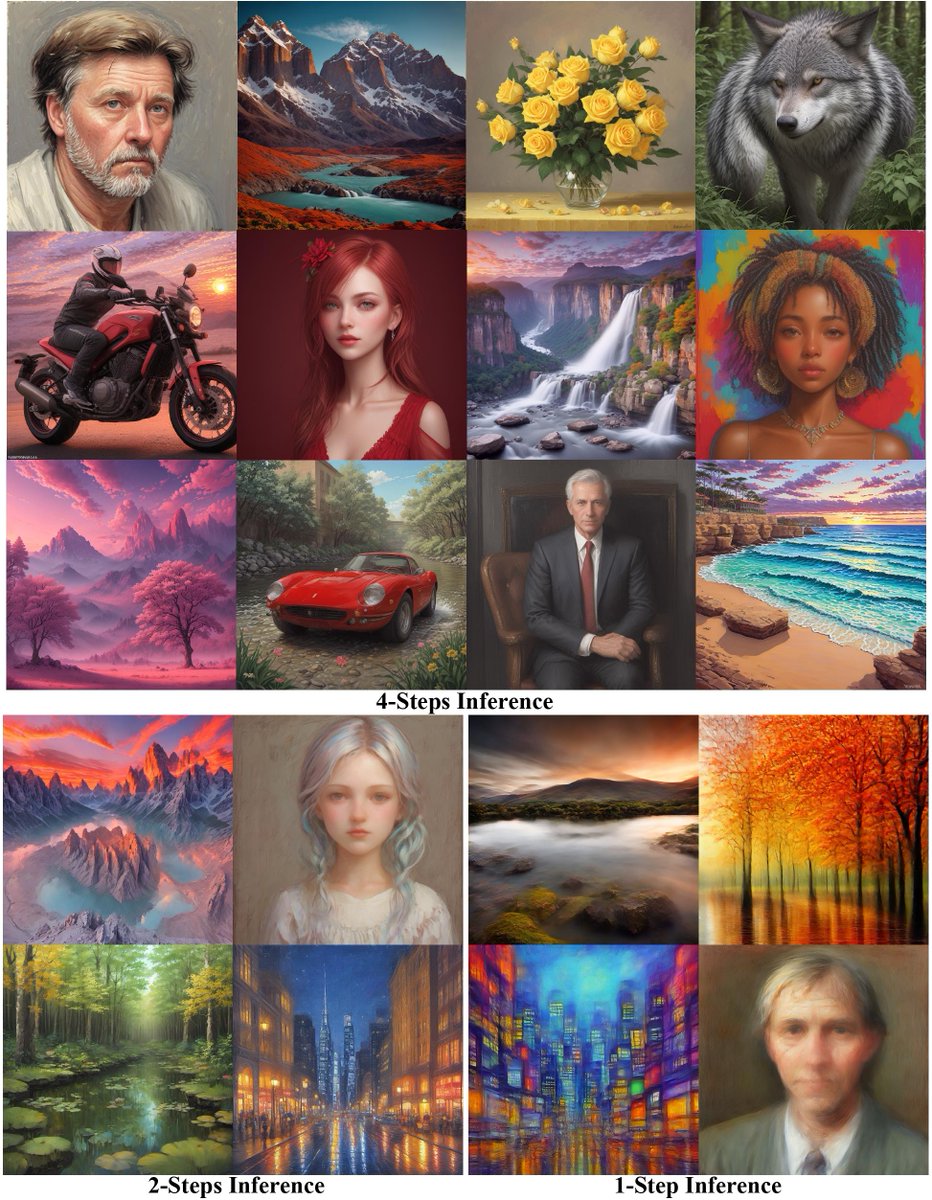

We now support A1111 formatted LoRA checkpoints directly from 🧨 diffusers 🥳

Thanks to `takuma104` for contributing this feature!

Check out the docs to learn more ⬇️

huggingface.co/docs/diffusers…

5/🧶

Thanks to `takuma104` for contributing this feature!

Check out the docs to learn more ⬇️

huggingface.co/docs/diffusers…

5/🧶

🧨 diffusers has supported LoRA adapter training & inference for a while now. We've made multiple QoL improvements to our LoRA API. So, training LoRAs and performing inference with them should now be much more robust.

Check out the updated docs ⬇️

huggingface.co/docs/diffusers…

6/🧶

Check out the updated docs ⬇️

huggingface.co/docs/diffusers…

6/🧶

All these updates -- wouldn't have been possible without our dear community, and we're thankful to them 🤗

Be sure to check out the full release notes 📝

github.com/huggingface/di…

7/🧶

Be sure to check out the full release notes 📝

github.com/huggingface/di…

7/🧶

https://twitter.com/RisingSayak/status/1666871818035277825

• • •

Missing some Tweet in this thread? You can try to

force a refresh