ChatGPT is great for creating plans.

But it can't use YouTube videos as a knowledge base.

With @LangChainAI, you can!

I've used the @thedankoe's YouTube video on '4-hour workdays' and let AI create a detailed plan.

Let me show you how you can do it too, in just 8 steps.

#AI

But it can't use YouTube videos as a knowledge base.

With @LangChainAI, you can!

I've used the @thedankoe's YouTube video on '4-hour workdays' and let AI create a detailed plan.

Let me show you how you can do it too, in just 8 steps.

#AI

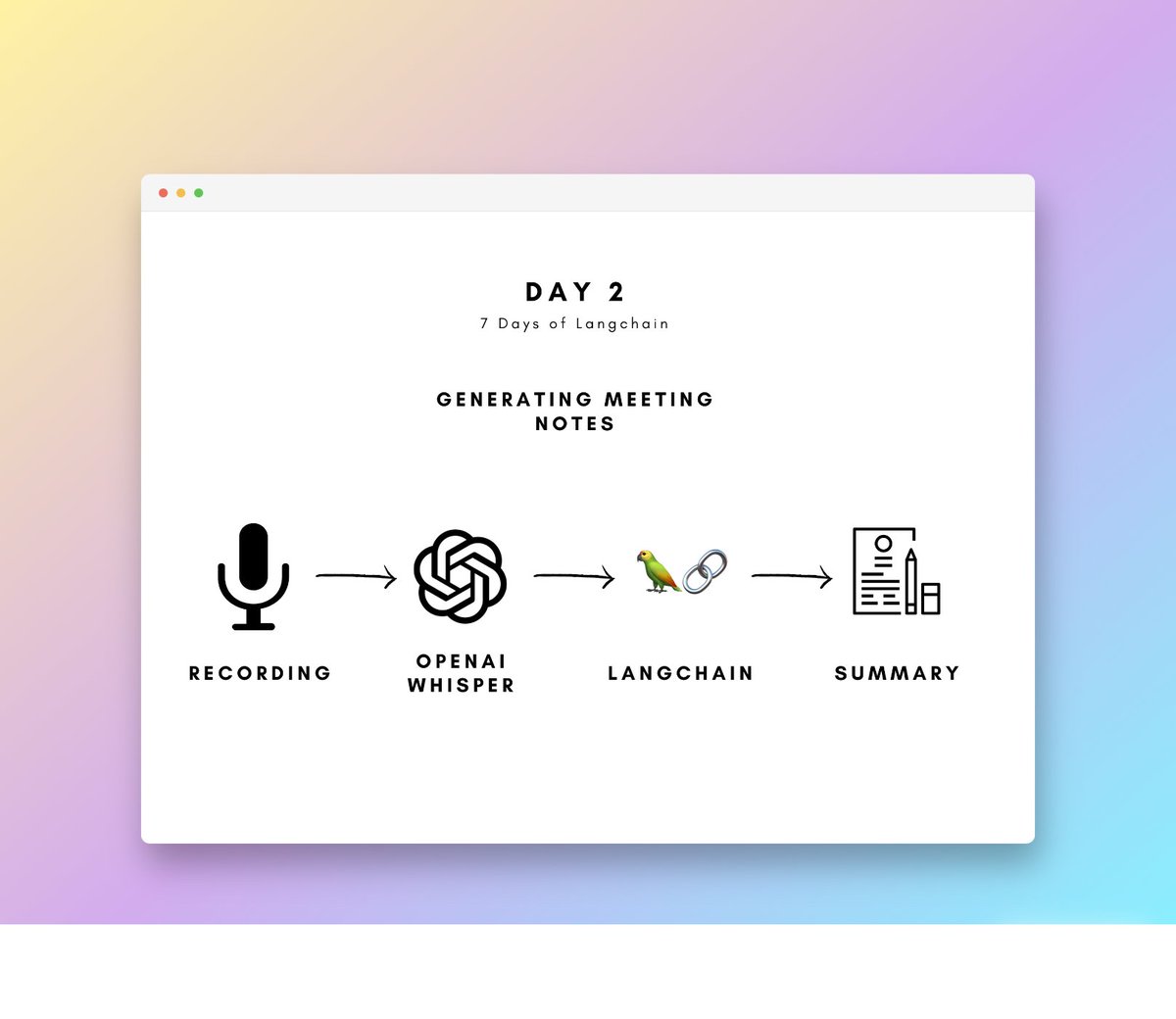

Before we dive in, this is day 1 of my '7 days of LangChain'.

Every day, I'll introduce you to a simple project that will guide you through the basics of LangChain.

Follow @JorisTechTalk to stay up-to-date.

If there's anything you'd like to see, let me know!

Let's dive in:

Every day, I'll introduce you to a simple project that will guide you through the basics of LangChain.

Follow @JorisTechTalk to stay up-to-date.

If there's anything you'd like to see, let me know!

Let's dive in:

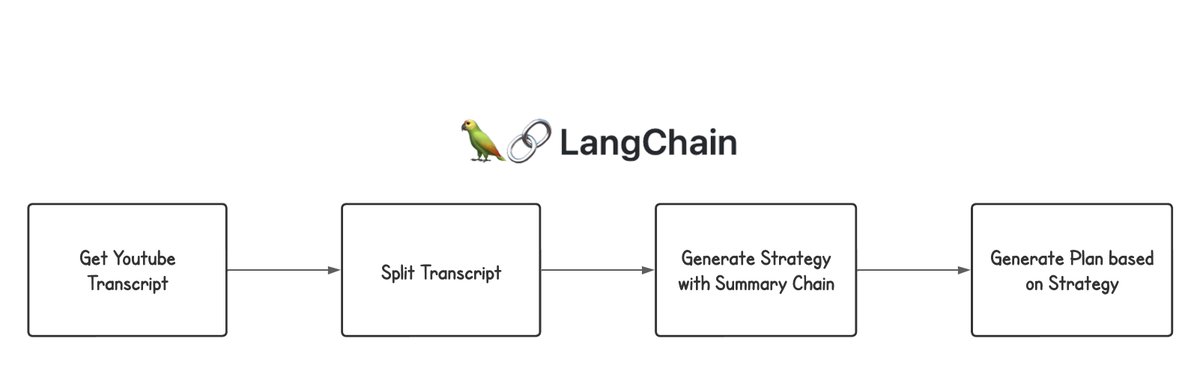

A high-level overview:

1️⃣ Load the YouTube transcript

2️⃣ Split the transcript into chunks

3️⃣ Use a summarization chain to create a strategy based on the content of the video

4️⃣ Use a simple LLM Chain to create a detailed plan based on the strategy.

And now for the code ⬇️

1️⃣ Load the YouTube transcript

2️⃣ Split the transcript into chunks

3️⃣ Use a summarization chain to create a strategy based on the content of the video

4️⃣ Use a simple LLM Chain to create a detailed plan based on the strategy.

And now for the code ⬇️

1. Loading the transcript.

LangChain's vast library of document loaders has made this extremely easy. Just use the YouTube Loader to get the transcript.

You can choose any video you like. I chose Dan Koe's 'The 4-Hour Workday'.

LangChain's vast library of document loaders has made this extremely easy. Just use the YouTube Loader to get the transcript.

You can choose any video you like. I chose Dan Koe's 'The 4-Hour Workday'.

2. Splitting the transcript into smaller chunks.

With the new 16K model, you actually don't have to do this step. I still think it's good to understand how to use it though.

Use larger chunks for better context.

Use some overlap to make sure no context is lost.

With the new 16K model, you actually don't have to do this step. I still think it's good to understand how to use it though.

Use larger chunks for better context.

Use some overlap to make sure no context is lost.

3. Create the prompt templates

Prompting is key!

Creating great prompts is both an art and a science. I'll dive deeper into this in a later thread.

One general tip: Be clear in what you want the model to do. Don't assume it 'knows' what you want.

Prompts: ⬇️

Prompting is key!

Creating great prompts is both an art and a science. I'll dive deeper into this in a later thread.

One general tip: Be clear in what you want the model to do. Don't assume it 'knows' what you want.

Prompts: ⬇️

Since we'll be using a 'refine' summary chain, we'll need two prompts:

1️⃣ For the initial strategy based on the first chunk.

2️⃣ For refining the created strategy based on the subsequent chunks.

Play around with this. Include as much info as you like.

1️⃣ For the initial strategy based on the first chunk.

2️⃣ For refining the created strategy based on the subsequent chunks.

Play around with this. Include as much info as you like.

4. Initialize the large language model.

Here, you can use any model you prefer. I use OpenAI's GPT 3.5 Turbo 16K model for speed and the larger context window.

Try out different temperatures.

Higher temperature ➡️ higher randomness ➡️ more 'creativity'

Here, you can use any model you prefer. I use OpenAI's GPT 3.5 Turbo 16K model for speed and the larger context window.

Try out different temperatures.

Higher temperature ➡️ higher randomness ➡️ more 'creativity'

5. Initialize and run the chain

We're using a summary chain.

Because we're using a custom prompt, it's not actually summarizing it, but it's creating the strategy based on the content of the video.

With 'verbose' set to True, the model will show you its 'thought process'.

We're using a summary chain.

Because we're using a custom prompt, it's not actually summarizing it, but it's creating the strategy based on the content of the video.

With 'verbose' set to True, the model will show you its 'thought process'.

Optional step:

You can save the strategy to a file for later use with the following code.

Great if you want to look back later or change things to the strategy yourself.

You can save the strategy to a file for later use with the following code.

Great if you want to look back later or change things to the strategy yourself.

6. Create the prompt template for writing a detailed plan based on the strategy.

We'll be using the output of the first chain, which will be the strategy, in order to create a detailed plan.

Again, be as specific as possible and play around with this.

We'll be using the output of the first chain, which will be the strategy, in order to create a detailed plan.

Again, be as specific as possible and play around with this.

7. Initialize and run the simple LLM Chain

For this step we don't need anything fancy, just a simple LLM chain with a custom prompt.

For this step we don't need anything fancy, just a simple LLM chain with a custom prompt.

8. Save your plan to a text file and go execute.

Your detailed plan on how to reach a 4-hour workday is done!

But how much did this cost you? ⬇️

Your detailed plan on how to reach a 4-hour workday is done!

But how much did this cost you? ⬇️

Bonus: Tracking your costs.

My cost for running this:

GPT 3: $0.03.

GPT 4: $0.37.

It's always nice to keep a check on what you're spending.

LangChain offers an easy solution for this. Just wrap your code in the OpenAI callback function and it will track the cost for you.

My cost for running this:

GPT 3: $0.03.

GPT 4: $0.37.

It's always nice to keep a check on what you're spending.

LangChain offers an easy solution for this. Just wrap your code in the OpenAI callback function and it will track the cost for you.

Tweak the prompts for your particular use case and let me know what you'll be building.

Thanks to @hwchase17, @LangChainAI and @thedankoe for today.

See you tomorrow!

Thanks to @hwchase17, @LangChainAI and @thedankoe for today.

See you tomorrow!

@hwchase17 @LangChainAI @thedankoe Day 1 of '7 days of @LangChainAI' ✅

Looking forward to tomorrow!

What do you want to see?

Looking forward to tomorrow!

What do you want to see?

https://twitter.com/JorisTechTalk/status/1671157923677077506

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter