Did you know you can use ChatGPT to summarize your (online) meetings?

With @LangChainAI, and a couple of lines of code, you can!

Let me show you how in 6 simple steps🧵

#AI

With @LangChainAI, and a couple of lines of code, you can!

Let me show you how in 6 simple steps🧵

#AI

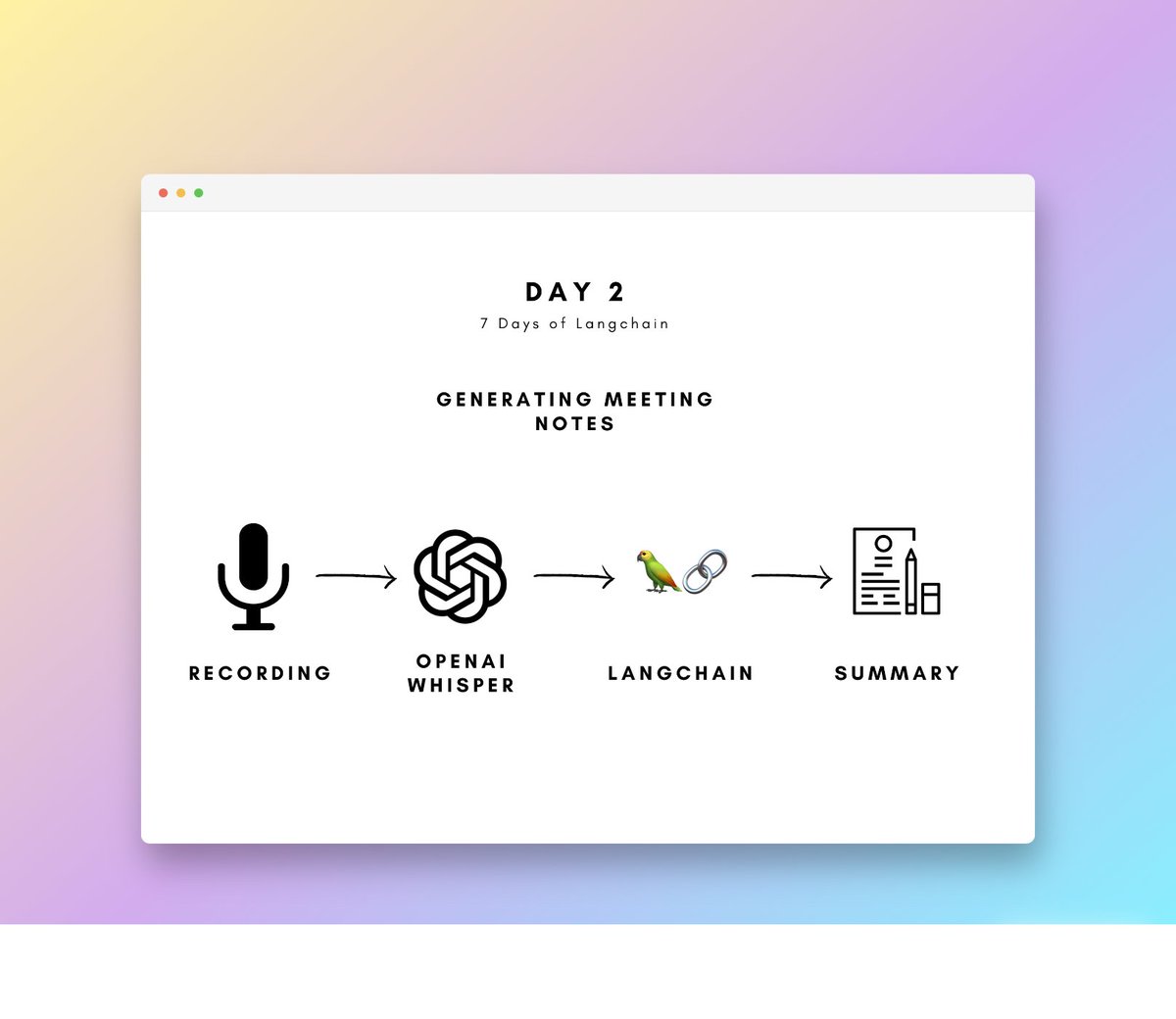

Before we dive in, this is day 2 of my '7 days of LangChain'.

Every day, I'll introduce you to a simple project that will guide you through the basics of LangChain.

Follow @JorisTechTalk to stay up-to-date.

If there's anything you'd like to see, let me know!

Let's dive in:

Every day, I'll introduce you to a simple project that will guide you through the basics of LangChain.

Follow @JorisTechTalk to stay up-to-date.

If there's anything you'd like to see, let me know!

Let's dive in:

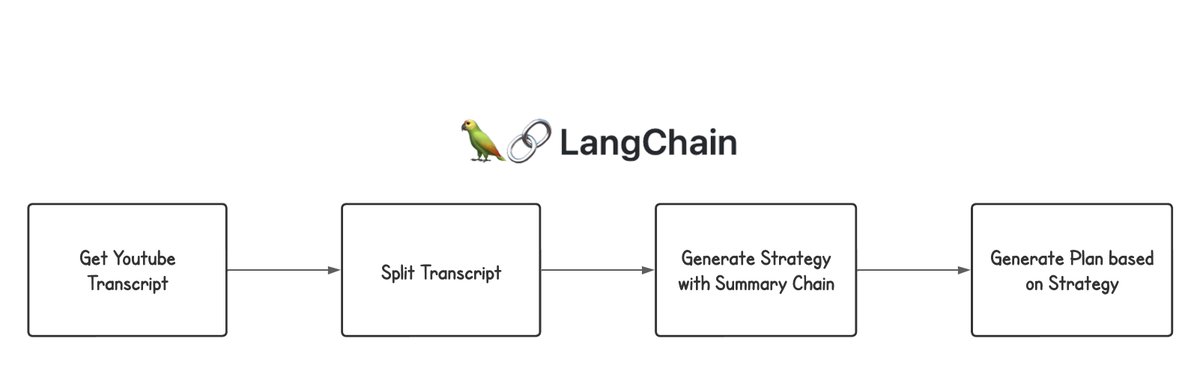

High level overview of what's happening:

1️⃣ Load your audio file

2️⃣ Speech-to-text with Whisper

3️⃣ Split the transcript into chunks

4️⃣ Summarize the transcript

Let's dive into the code ⬇️

1️⃣ Load your audio file

2️⃣ Speech-to-text with Whisper

3️⃣ Split the transcript into chunks

4️⃣ Summarize the transcript

Let's dive into the code ⬇️

1. Open your audio file.

Whisper works on files with a maximum duration of approximately 20 minutes.

Longer file?

Split it up by using PyTube and handle each chunk seperately.

Whisper works on files with a maximum duration of approximately 20 minutes.

Longer file?

Split it up by using PyTube and handle each chunk seperately.

2. Call the Whisper API

Of course, you can use any speech-to-text API you prefer. I like Whisper for its accuracy and ease of use.

Any open-source alternatives with quality output?

Of course, you can use any speech-to-text API you prefer. I like Whisper for its accuracy and ease of use.

Any open-source alternatives with quality output?

3. Splitting the transcript into chunks

With the new OpenAI GPT 16k model, you can fit a large amount of context into one chunk. This is amazing for the model to 'understand' the full context and make connections.

Use some overlap in order for context to not be lost.

With the new OpenAI GPT 16k model, you can fit a large amount of context into one chunk. This is amazing for the model to 'understand' the full context and make connections.

Use some overlap in order for context to not be lost.

4. Prompting

Prompting is key. It determines your output more than anything else.

Be as concise as you can be. Instruct the model on how you want the output to look. You could include bullet points, main takeaways, follow-up actions and much more.

Prompting is key. It determines your output more than anything else.

Be as concise as you can be. Instruct the model on how you want the output to look. You could include bullet points, main takeaways, follow-up actions and much more.

5. Initialize and run the summary chain

I'm using the refine summarization chain. This is great when you're working with large files.

It generates an initial summary based on the first chunk and updates it with the subsequent chunks.

I'm using the refine summarization chain. This is great when you're working with large files.

It generates an initial summary based on the first chunk and updates it with the subsequent chunks.

6. Export the summary to a text file

Your meeting is summarized and you're ready to take action!

Once again, try to play around with the prompts you're using. This will greatly impact the resulting summarization.

Your meeting is summarized and you're ready to take action!

Once again, try to play around with the prompts you're using. This will greatly impact the resulting summarization.

7. Possible future implementations

You can go wild with this. You could use a Zapier integration to send the summarized meeting in an email, create appointments in your schedule and much more.

Let me know what you're going to add.

You can go wild with this. You could use a Zapier integration to send the summarized meeting in an email, create appointments in your schedule and much more.

Let me know what you're going to add.

That concludes day 2 of '7 days of @LangChainAI'

Tomorrow's project: Creating mindmaps with @LangChainAI and @XmindHQ.

Follow @JorisTechTalk to stay up-to-date.

What else would you like to see?

Tomorrow's project: Creating mindmaps with @LangChainAI and @XmindHQ.

Follow @JorisTechTalk to stay up-to-date.

What else would you like to see?

@LangChainAI @XmindHQ Day 2 of '7 days of @LangChainAI' ✅

Tomorrow's project will be exciting: generating mindmaps for studying!

What else do you want to see?

Tomorrow's project will be exciting: generating mindmaps for studying!

What else do you want to see?

https://twitter.com/733672774531723265/status/1671496626790096901

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter