I wonder if Dan's been losing any sleep? Maybe that's why he hasn't come forward with an explanation

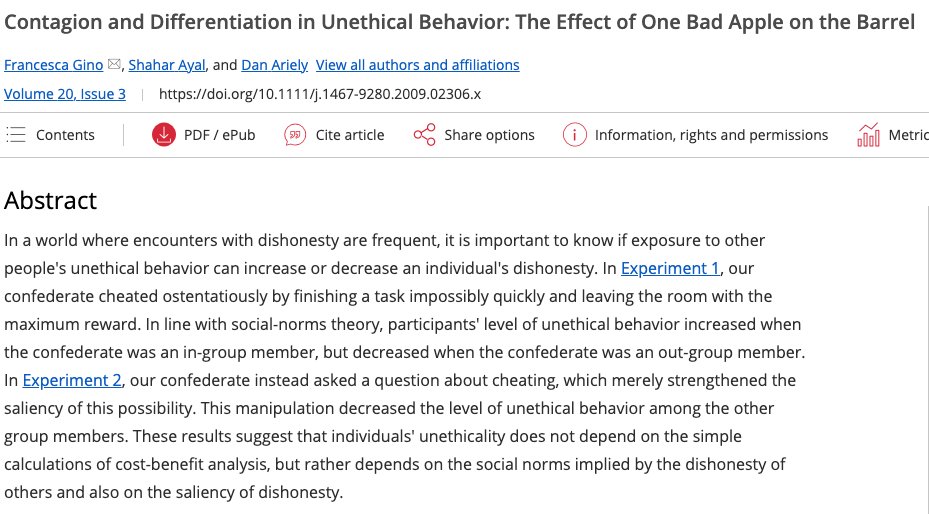

These are all studies that Dan co-authored with disgraced HBS prof. Francesca Gino. All I did to make this thread was go to Google Scholar & search "Gino Ariely". These are from page 1, which also includes this famous study, which both Gino and Ariely are confirmed to have faked.

• • •

Missing some Tweet in this thread? You can try to

force a refresh