🔴 PERFUSION: a generative AI model from NVIDIA that fits on a floppy disk 💾

It takes up just 100KB. Yes, you heard it right, much less than any picture you take with your mobile phone! Why is this revolutionary and can change everything?

I'll tell you 🧵👇

It takes up just 100KB. Yes, you heard it right, much less than any picture you take with your mobile phone! Why is this revolutionary and can change everything?

I'll tell you 🧵👇

Perfusion is a really lightweight "text-to-image" model (100KB) that also trains in just 4 minutes.

🔗 Link: https://t.co/502nEzWL2eresearch.nvidia.com/labs/par/Perfu…

🔗 Link: https://t.co/502nEzWL2eresearch.nvidia.com/labs/par/Perfu…

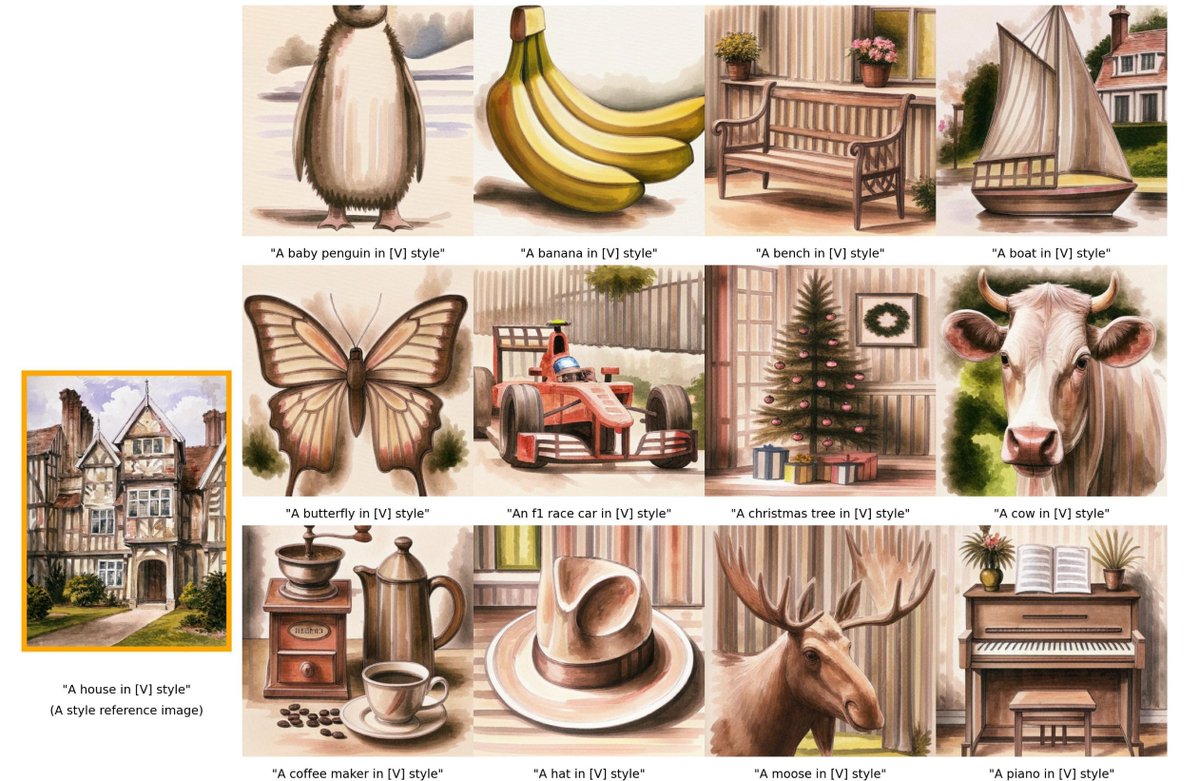

It allows creatively portraying objects and characters, maintaining their identity, using a novel mechanism they have called "Key-Locking."

Moreover, it allows controlling the balance between visual alignment and the text prompt at the time of inference, covering the entire Pareto front with just a single trained model.

1️⃣ Such great optimization means that we will soon have truly powerful AI models integrated into our mobile phones, computers, etc. Much lighter, faster to train, and consuming less computing power.

2️⃣ The costs of training models will be drastically reduced in the future with optimizations like this and new techniques that allow everything to be streamlined.

3️⃣ If, in just 100KB, a new technique (key-locking) has achieved such a large increase in the coherence of objects/characters between generations, as in this example, it means that we have only SCRATCHED THE SURFACE of what the future Generative AI will be able to do.

In short, a massive piece of news that I don't understand why it's going so unnoticed. Don't be fooled by "the low quality" of the images. The potential it has is truly MASSIVE.

If you liked this and would like me to continue writing similar threads, an RT on the first tweet of the thread will encourage me to keep doing so. Thanks! 😉👇

https://twitter.com/javilopen/status/1687795349719547905

More info here: decrypt.co/150861/nvidia-…

Watch out for this important detail 👇

https://twitter.com/mindplaydk/status/1686820514730221569?s=20

More info: fagenwasanni.com/news/nvidia-in…

Mentally, I'm already calling it: 'the miniLoras'

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter