Scraped data such as from Wikipedia is vital for NLP, but how reliable is it in low-resource settings?

🚀Happy to present our work "NusaWrites: Constructing High-Quality Corpora for Underrepresented and Extremely Low-Resource Languages" @AACL 2023🇮🇩

arxiv.org/abs/2309.10661

🚀Happy to present our work "NusaWrites: Constructing High-Quality Corpora for Underrepresented and Extremely Low-Resource Languages" @AACL 2023🇮🇩

arxiv.org/abs/2309.10661

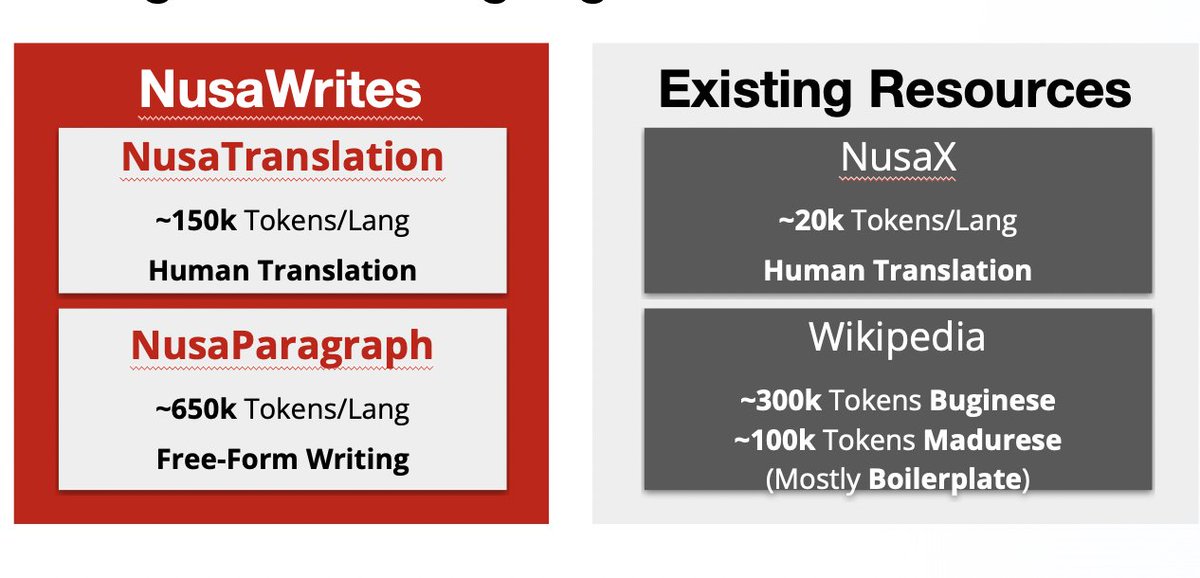

We explore 2 methods of building a corpus for 12 underrepresented Indonesian languages: by human translation, and by doing free-form paragraph writing given a theme.

We then compare their quality vs Wikipedia text.

We then compare their quality vs Wikipedia text.

When we compare Wikipedia data, both Nusa Translation (NusaT) and Nusa Paragraph (NusaP) are generally more lexically diverse and use fewer loan words.

We also realize that apparently some of the Wikipedia pages for low-resource languages are mostly boilerplate.

We also realize that apparently some of the Wikipedia pages for low-resource languages are mostly boilerplate.

Language Models trained with NusaP or NusaT also achieve lower perplexity on unseen test data in corresponding local languages, written by native speakers.

Due to how NusaT and NusaP were made, we can also convert these datasets into a benchmark dataset!

So we additionally perform the evaluation of various models on this benchmark.

So we additionally perform the evaluation of various models on this benchmark.

To conclude:

- We release NusaT and NusaP, high-quality corpus for 12 underrepresented languages

- Underrepresented languages corpus from Wikipedia does not represent the true language distribution

- We suggest alternative methods through Translation and free-text writing.

- We release NusaT and NusaP, high-quality corpus for 12 underrepresented languages

- Underrepresented languages corpus from Wikipedia does not represent the true language distribution

- We suggest alternative methods through Translation and free-text writing.

Thanks to the amazing work of all the authors:

@SCahyawijaya

@HolyLovenia

@FajriKoto

@DeaAdhista

@emmanuel_davee

@sarahoktaviani

@SabilMAk

@JhonsonLee

@Shadieqq

@wawancenggoro

@hanungwahyuning

@BryanWilie

@galihpradipta

@gentaiscool

@DavidMoeljadi

@AyuPurwarianti

@pascalefung

@SCahyawijaya

@HolyLovenia

@FajriKoto

@DeaAdhista

@emmanuel_davee

@sarahoktaviani

@SabilMAk

@JhonsonLee

@Shadieqq

@wawancenggoro

@hanungwahyuning

@BryanWilie

@galihpradipta

@gentaiscool

@DavidMoeljadi

@AyuPurwarianti

@pascalefung

• • •

Missing some Tweet in this thread? You can try to

force a refresh