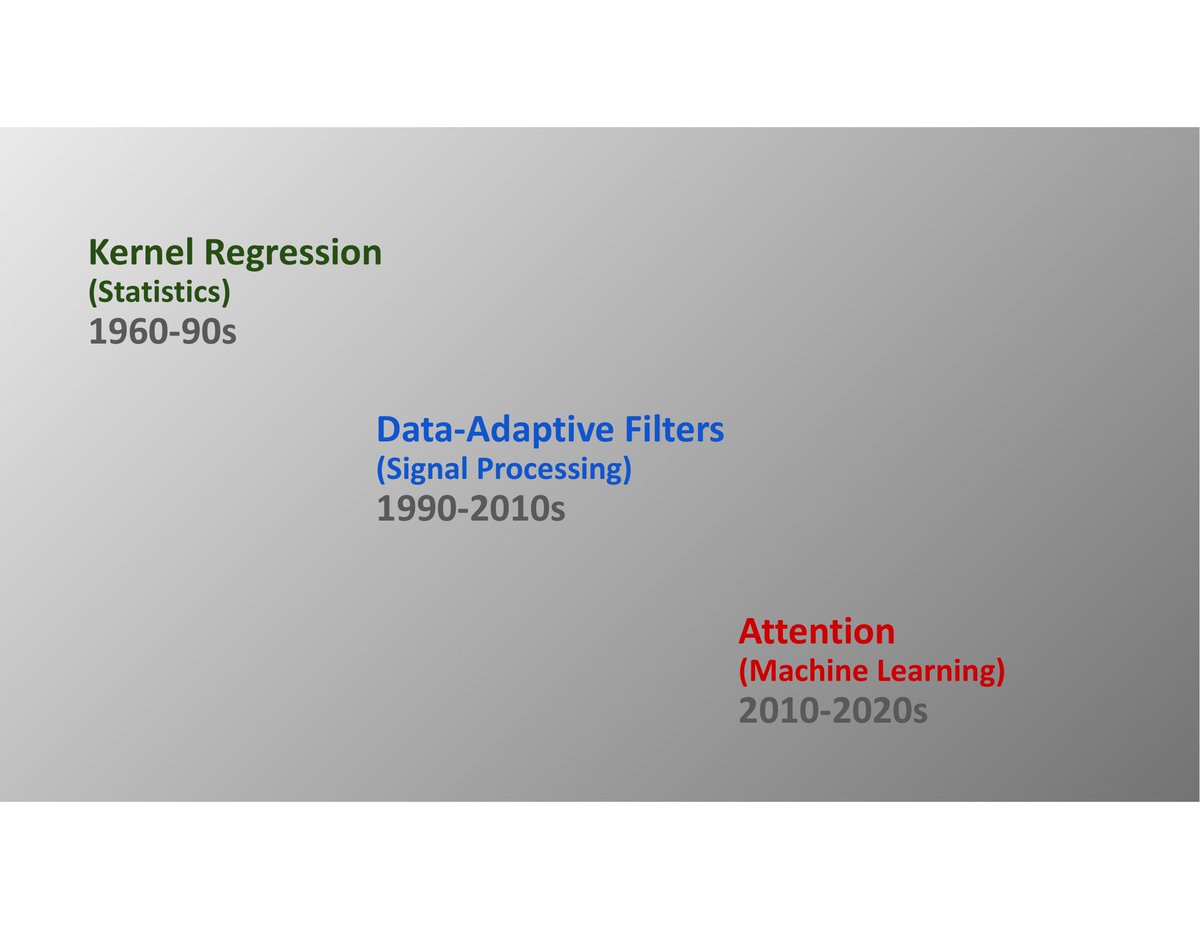

The Kalman Filter was once a core topic in EECS curricula. Given it's relevance to ML, RL, Ctrl/Robotics, I'm surprised that most researchers don't know much about it, and many papers just rediscover it. KF seems messy & complicated, but the intuition behind it is invaluable

1/4

1/4

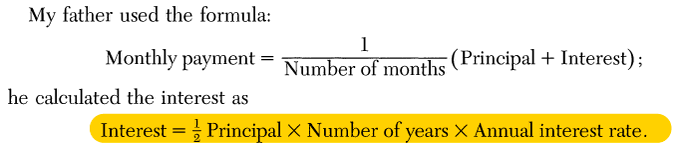

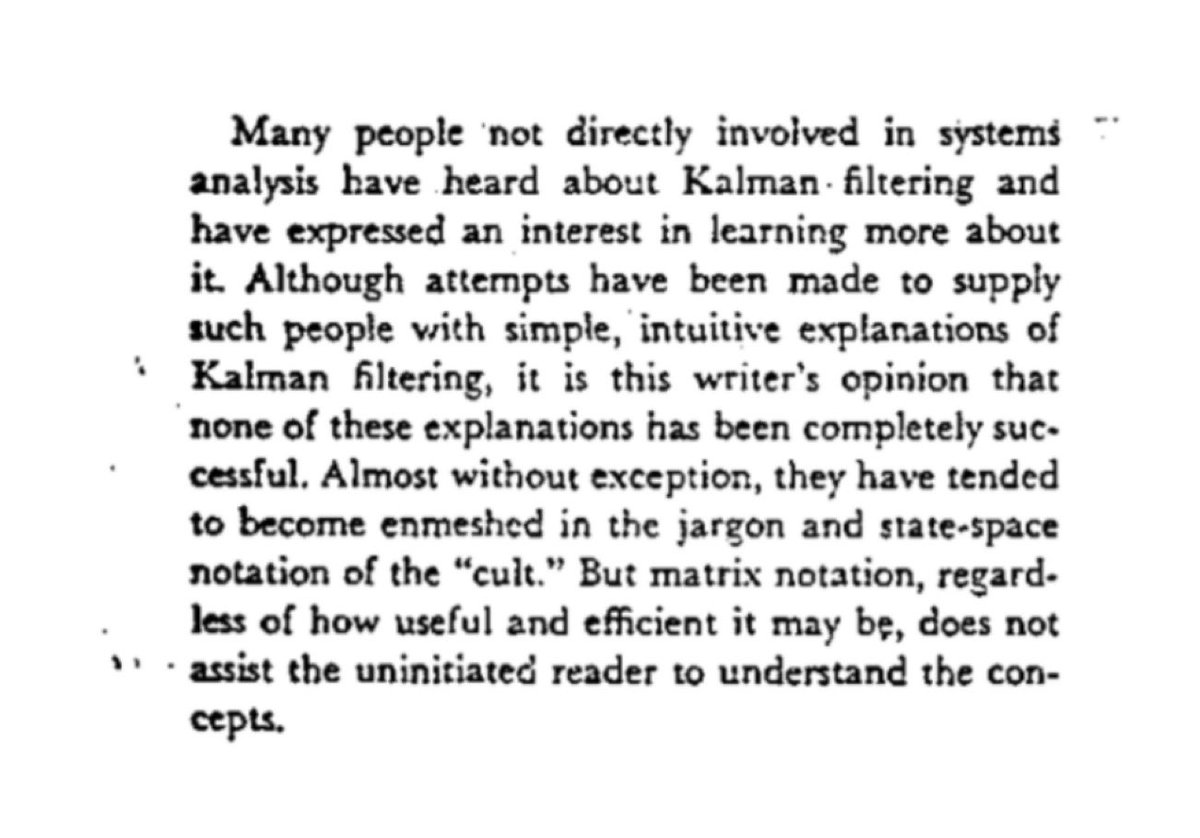

I once had to explain the Kalman Filter in layperson terms in a legal matter (no maths!). No problem, I thought. Yet despite being taught the subject by one of the greats (A.S. Willsky) & having taught the subject myself, I found this startlingly difficult to do.

2/4

2/4

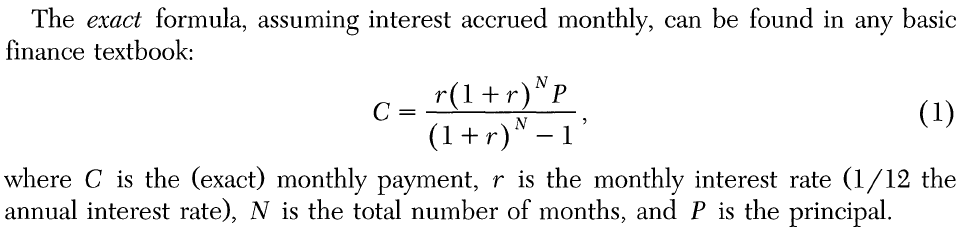

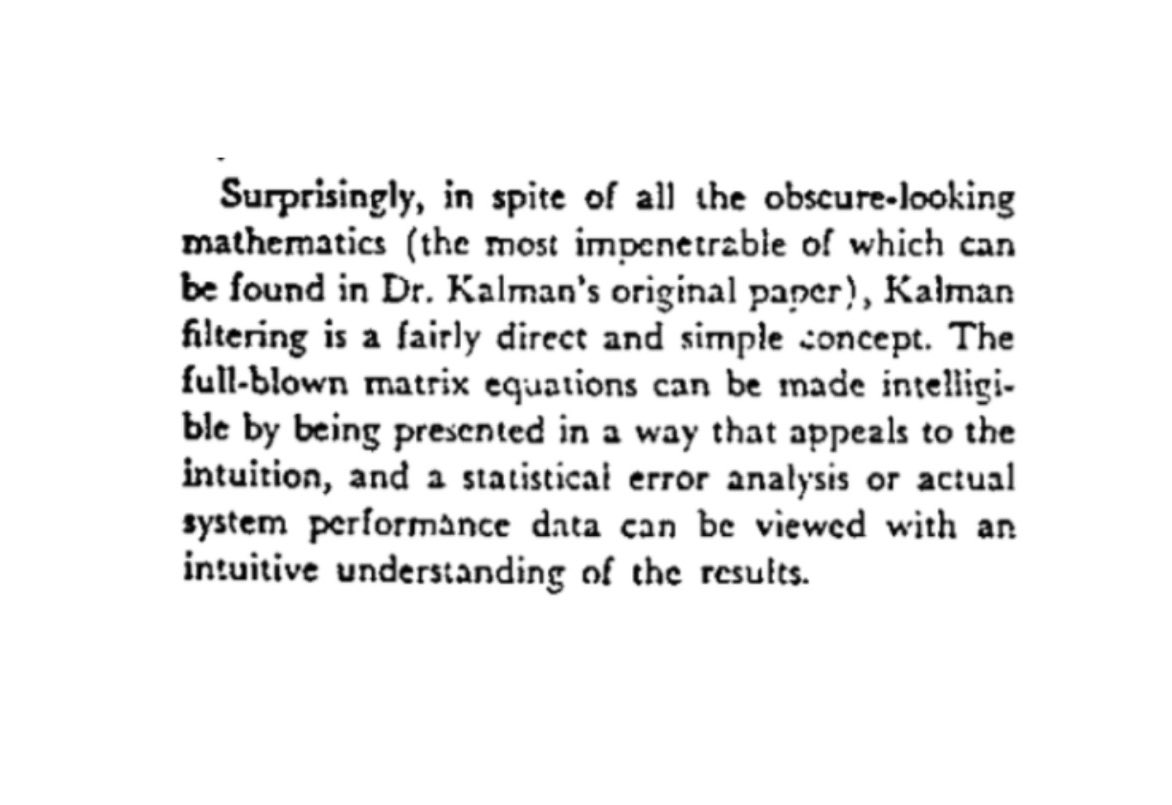

I was glad to find this little gem. It’s a 24-page writeup that is a great teaching tool, especially in introductory classes, and particularly at the undergraduate level.

The writeup seems to be out of print, but still available (albeit at a rather outrageous price)

3/4

The writeup seems to be out of print, but still available (albeit at a rather outrageous price)

3/4

One of the very first applications of the Kalman filter was in aerospace, namely NASA’s early space missions. There’s a wonderful historical account of how the Kalman Filter went from theory to practical tool for both NASA and the aerospace industry.

4/4 ntrs.nasa.gov/api/citations/…

4/4 ntrs.nasa.gov/api/citations/…

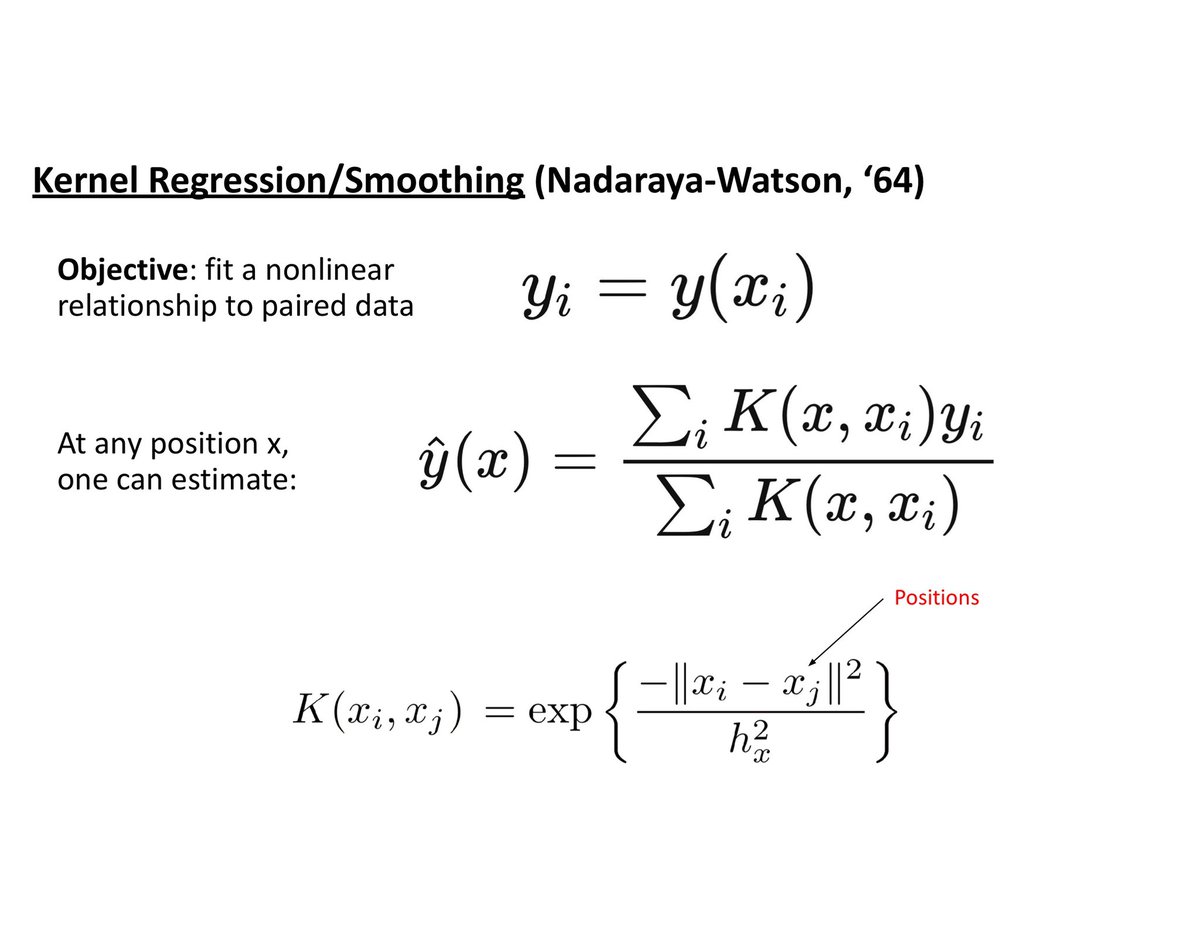

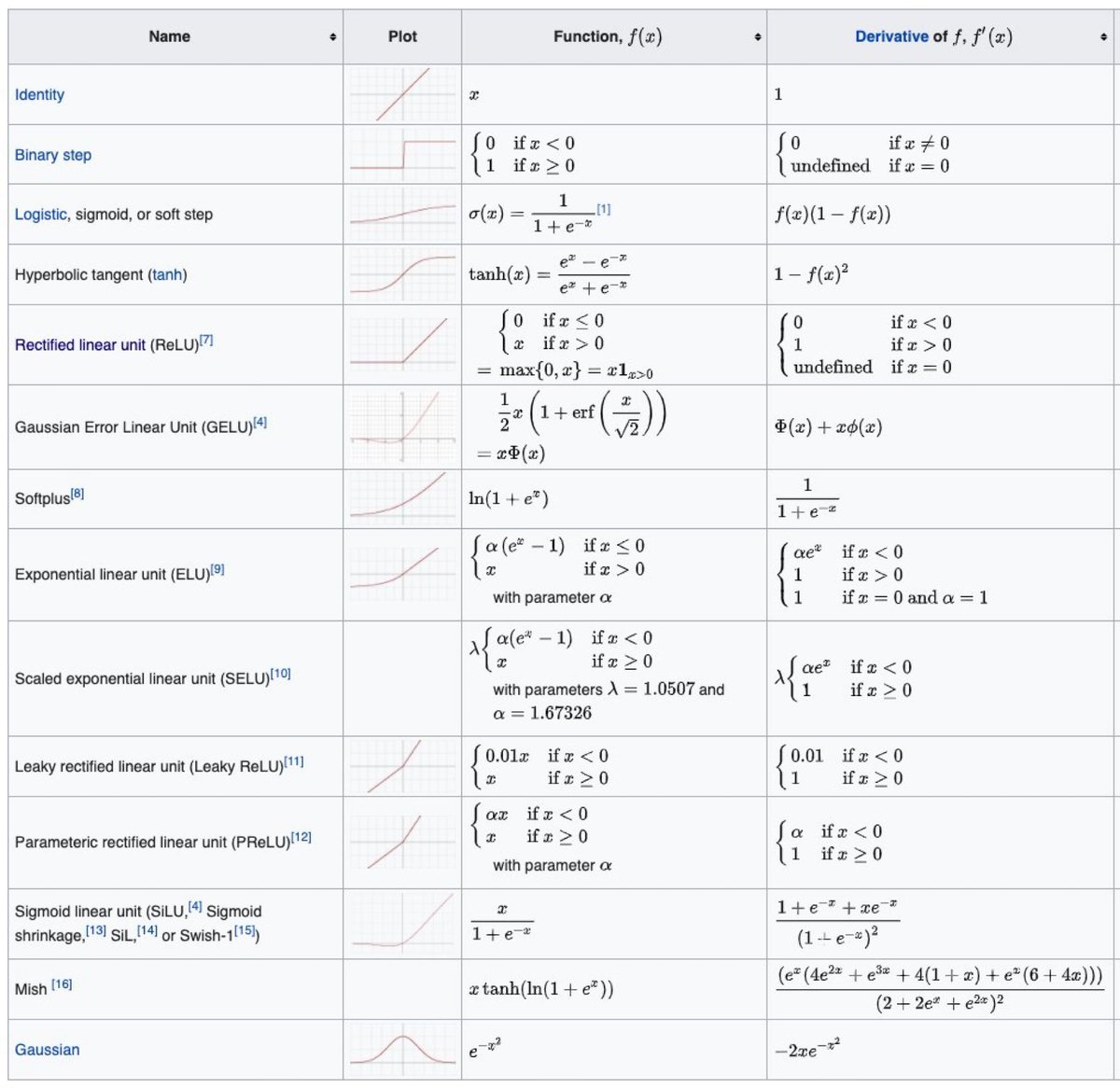

I wrote a thread on sequential estimation (of a constant A, in this toy example) to illustrates the idea. Of course the KF is far more general - it tracks *dynamic* systems where the internal state is itself evolving & subject to uncertainties of its own

https://x.com/docmilanfar/status/1503206828716400641

• • •

Missing some Tweet in this thread? You can try to

force a refresh